APIS data transfer mechanism; Data Push

A new way for transferring data internally in APIS, has been implemented. This mechanism will hereafter be referred to as: Data Push.

Background

In brief, when connecting to an OPC UA server, one can have different rates for data sampling and publishing. Ie. you can sample items every one second, ut choose to only send data (publish) to the client every 30 second. The client will then receive up to 30 samples per item, every 30 second, a small queue/series of samples instead of one sample by sample, which is the old APIS way, and also the way of classic OPC DA. Even more complex, a UA client may have a mix of various sampling rates, in one subscription (publishing).

Traditionally, APIS Hive has relied on sampling data when transferring data between different modules and internal services, i.e. the External Item manager and Logger/AlarmArea modules consuming data. To consume data from an OPC UA client in the old APIS way, the UA client module APIS OpcUaBee must apply one “timestep by timestep” from a queue/series of samples. After each timestep, an Eventbroker event, ServerDataChanged, is fired, to notify all consumers that they should check for any updated data on the items they consume, by sampling all of the items they are monitoring. When a Logger, or an OPC UA server client-sampler in the Hive UA server, receives such an event, it might sample/read hundreds of thousands of items, even if just a few items actually were updated.

I.e. when just a few hundreds of a million of items, are updated with a queued series of samples of length 30, all internal consumers (Logger, External items, UA server client-sampler, AlarmArea,…) will run 30 sample/read loops over a million of items, in a closed loop, to check for updated data samples. This is unnecessary and has a negative i mpact of the APIS Hive dataflow performance.

To remedy this, a new way has been developed, Data Push.

The new Data Push data transfer

The new way of transferring data internally, will send the updated data samples as a package, as a parameter attached to an APIS Hive Event broker event.

For now, we have one natural source of such data packages; i.e. data received by the ApisOpcUaBee UA-client, from any OPC UA server.

The ApisOpcUaBee hence has gotten a new Event broker event; ServerDataChanged_DataPush.

To consume this new event with its data parameter, we also need new Event broker commands where appropriate.

The following new events have been implemented:

| Event | Description |

| In the ApisOpcUaBee client; ServerDataChanged\_DataPush | This event is fired when the UA client receives data from the UA server, and the items and samples in the data push package are the ones that is received from the server\. |

| In any APIS module having External Items; ExternalItemsHandled\_DataPush | This event is fired after it has executed a HandleExternalItems\_DataPush command\. The data push package part of this ExternalItemsHandled\_DataPush event, are all the resulting VQTs \(Function items, ordinary external item transfer, etc\.\) from the HandleExternalItems\_DataPush command\.On this ExternalItemsHandled\_DataPush event, one can hook any \_DataPush command\(s\) \(Log; Scan; UaServerUpdateMonitorItems; HandleExternalItems\), to chain a path of execution with data transferred alongside\. |

The following new commands have been implemented:

| Command | Description |

| In the Hive UA server; UaServerUpdateMonitorItems\_DataPush | This command ensures that any UA clients that subscribe/monitor any of the items and samples in the data push package transfer these samples to the client\. |

| In any APIS module having External Items; HandleExternalItems\_DataPush | This command ensure that all samples for all items and samples in the data push package are applied/used in the external item manager, including in services as Data Validation, Ext Items Transfer Control, etc\. |

| In the ApisLoggerBee; Log\_DataPush | This command ensure that all items and samples in the data push package, are stored to the HoneyStore database where applicable\. |

| In the ApisAlarmAreaBee; Scan\_DataPush | This command ensure that all items and samples in the data push package, are used for alarm evaluation where applicable\. |

Finally, worth mentioning is that the new data push event broker event and commands, can be used together with the old fashioned way; i.e. the OPC UA ServerDataChanged event are still fired appropriate number of times, alongside with a single ServerDataChanged_DataPush event.

Example configuration

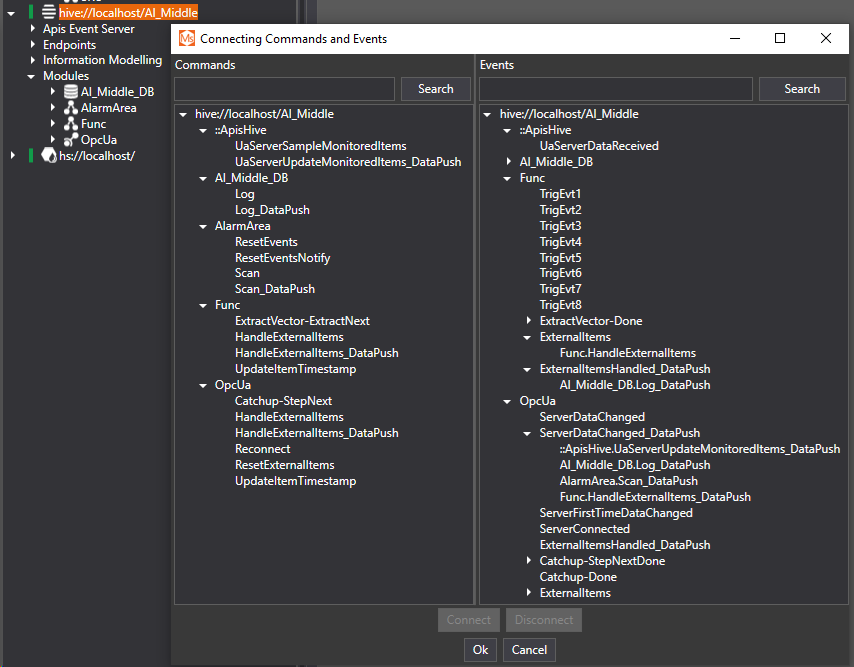

Consider the two Hive instances below; AI_Middle and AI_Top.

AI_Middle

-

OpcUa; a UA client connected to an(y) OPC UA server, here using Sampling interval of 1 second and Publish interval of 5 seconds to force data push packages of 5 samples per item per package.

-

AI_Middle_DB; a logger bee storing data to HoneyStore as eventbased trend types.

-

AlarmArea; an alarm module monitoring some of the items from the UA server.

-

Func; a Worker bee running Function items on a some of the item from the UA server.

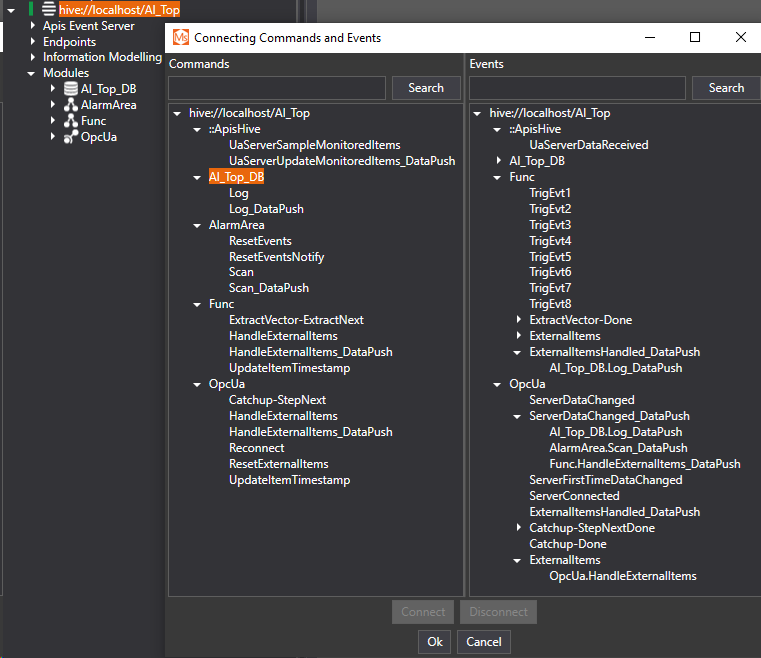

AI_Top

-

OpcUa; a UA client connected to the OPC UA server of Hive instance AI_Middle.

-

AI_Top_DB; a logger bee storing data to HoneyStore as eventbased trend types.

-

AlarmArea; an alarm module monitoring some of the items from the UA server.

-

Func; a Worker bee running Function items on a some of the item from the UA server.

Below, the Event broker configuration for both instances are shown.

AI_Middle event broker configuration:

AI_Top event broker configuration:

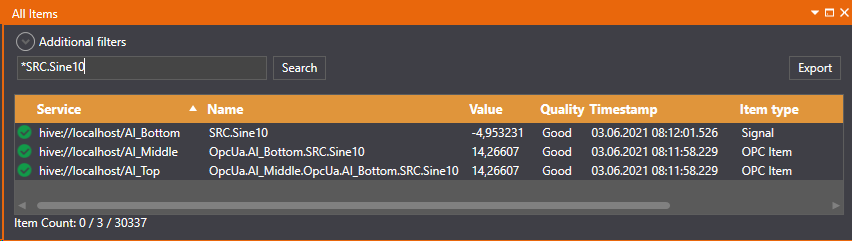

Here, the snapshot of the same items, in the source, the Middle and Top instance, are shown in a real time list in AMS.

Note that the timestamps upstream will update fast, at arrival of every package, and will typically be delayed up to the same amount of seconds as the publishing interval.