Introduction to Apis Foundation

Thank you for using Apis Foundation from Prediktor AS. Apis is Prediktor’s real-time industrial software platform. Apis is component based, with each component containing a set of functions, and has been used in over 600 installations, mostly within mission critical areas in the maritime, manufacturing, and oil & gas drilling and production industries.

We hope you find Apis Foundation useful. Any questions and suggestions are welcome via email: support@prediktor.no

What is Apis Foundation?

Apis Foundation is a real-time industrial software platform that incorporates many industry standards such as OPC and OPC UA. Apis Foundation is fully component-based and can therefore be integrated in a myriad of ways with our partners’ software. You can choose which components are required and avoid paying for unneeded functionality.

Components include: tools to collect data from external systems, sensors and equipment; a powerful time-series database that serves as a real-time Historian; and tools to expose data via OPC or OPC UA. Using these components, a number of different applications can be built including OPC UA-based Historians, OPC DA/HDA servers, OPC Hubs, OPC UA wrappers for transferring real-time data over the internet, and much, much more.

Apis Foundation includes the following tools and services:

Apis Services:

- Apis Hive is a multipurpose real-time data communication hub and container for Apis Modules. Hive is an executable that hosts data access, processing, and logging components into one efficient real-time domain.

- Apis HoneyStore is Prediktor's high-performance, time-series database.

- Apis Chronical is Prediktor's high-performance, event-server and historian.

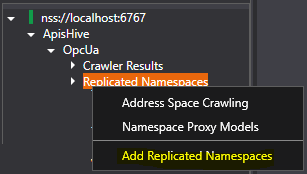

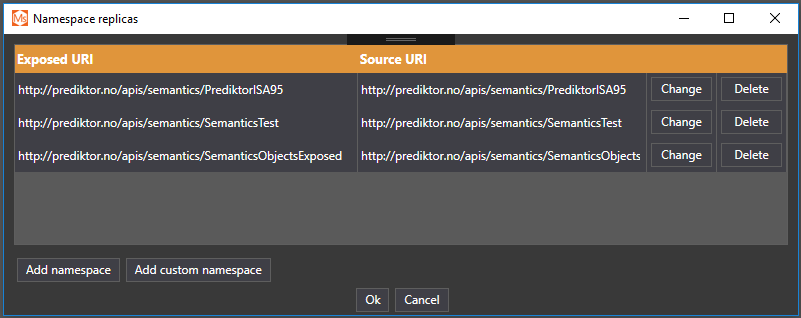

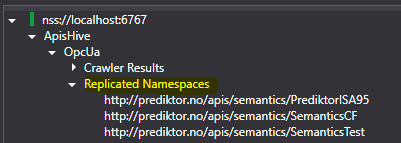

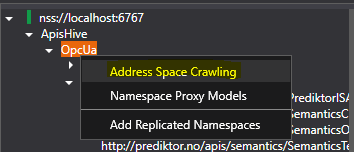

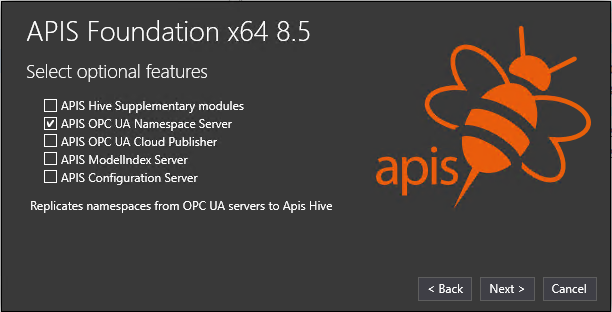

- Apis OpcUa Namespace Replication Service is a service for replicating namespaces from OPC UA servers to Apis Hive.

- Apis Backup Agent is a service responsible for executing backup and restore jobs.

Apis Tools:

- Apis Management Studio is the main engineering interface for configuring Apis services.

- Apis Bare is a tool for manually backing up and restoring configuration and data for your Apis applications.

Where to start?

If you're new to Apis, we suggest you read the How To Guides. They cover the most common tasks and concepts when using the software.

Overview of Apis Foundation.

The functionality of Apis can be organized into the following groups:

• Connect - robust, high frequency, and high capacity connection to real-time data sources.

• Store – high frequency and capacity, highly distributable, and scalable storage of real-time data.

• Process – real-time, deterministic processing of collected values to create aggregated information.

• Visualize – provide useful information to several groups of stakeholders simultaneously.

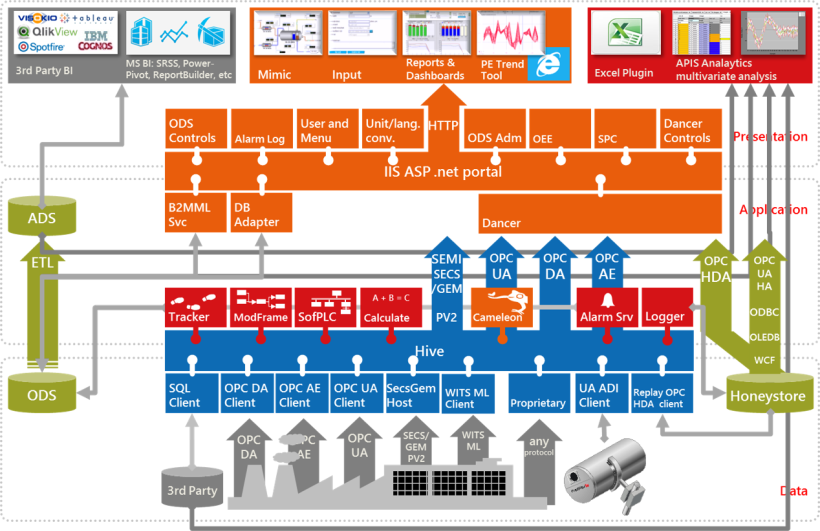

The core component of our connect functionality is Apis Hive. Hive is the executable that hosts data access, processing, and logging components in one efficient, real-time computational domain.

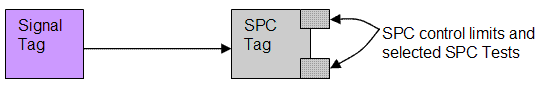

Data access components source data based on both open standards and proprietary interfaces. These are combined inside Hive into one homogenous way of understanding the data. Here they are refined by different processing components, logged to data storage, or exposed to external clients through industry standard interfaces. The outcome of data processing can also be written back to the access components.

The system is component-based and most of the components are optional. Customers can therefore choose which components are required and avoid paying for unneeded functionality.The figure below illustrates the design of the Apis platform.

How To Guides

The How To Guides will give you an introduction to the most common tasks and concepts when using Apis.

- The Getting Started section will get you familiar with using Apis Management Studio and the concepts of Apis Hive, Modules and Items.

- The Connect section covers how to acquire data using the most common data protocols .

- The Store section gives an introduction to storing time series data with Apis Honeystore.

- The Process section gives an introduction to adding alarms, data validation, and email notifications.

- The Contextualization section covers construction and management of information models.

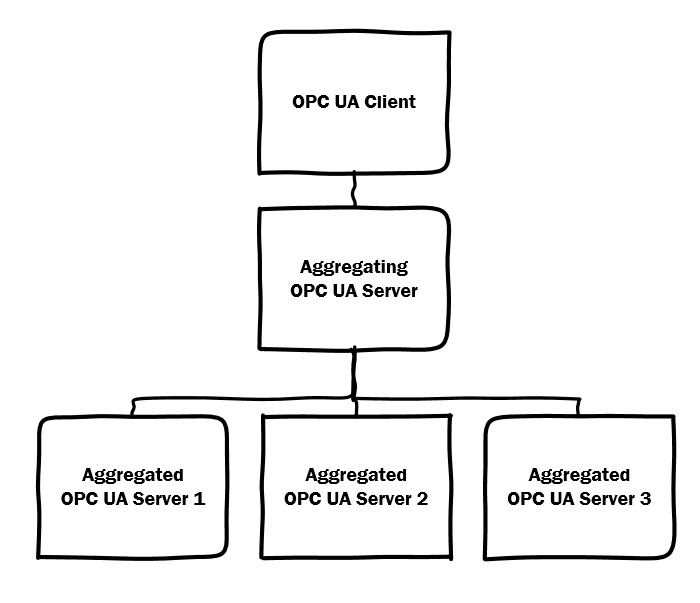

- The Aggregating Server section covers federating and replicating namespaces from remote servers.

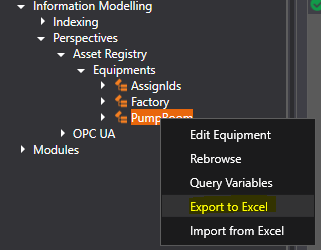

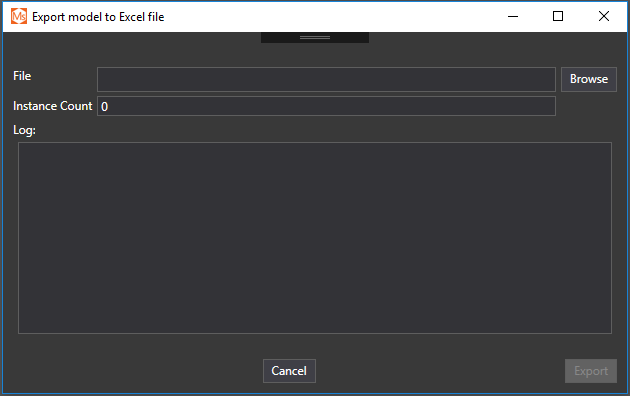

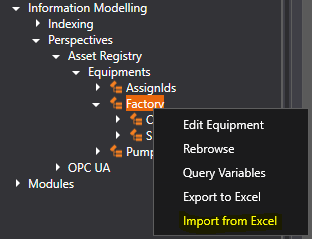

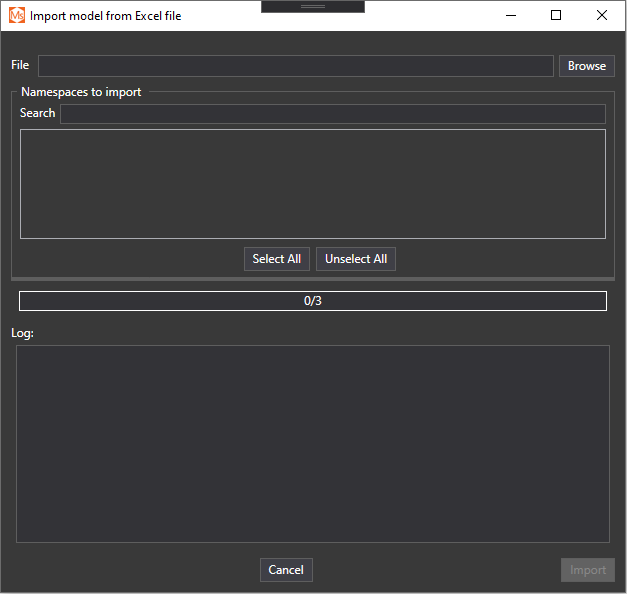

- The Configuration Management section gives an introduction to different methods for importing and exporting configurations.

- The Apis High Availability section covers setup of redundant server clusters.

- The Query variables section covers configuration and use of Apis Model Index Service.

- The Disaster Recovery section covers how to backup and restore configurations and history data.

- The Security section covers how to enable access control for Apis Hive.

- The Troubleshooting section covers various trouble shootig guides, post configurations, and how to's.

- The Surveillance section covers various methods to monitor source activity

Getting Started

This section explains how to install a run-time license and will get you familiar with using Apis Management Studio and the concepts of Apis Hive, Modules and Items. Please pick a topic from the menu.

Install a Runtime License

The Apis software will run in demo mode for one hour without a license key. To obtain a valid license, please contact Prediktor at either:

Email: support@prediktor.no

Phone: +47 95 40 80 00

To request a license

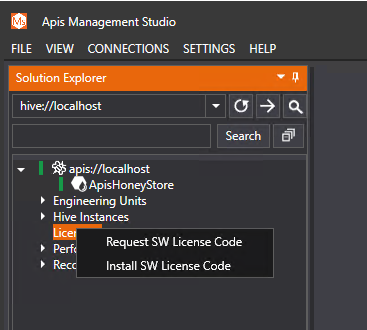

- Open Apis Managemen Studio and expand "apis://localhost" -> "Licensing" -> "Request SW License Code":

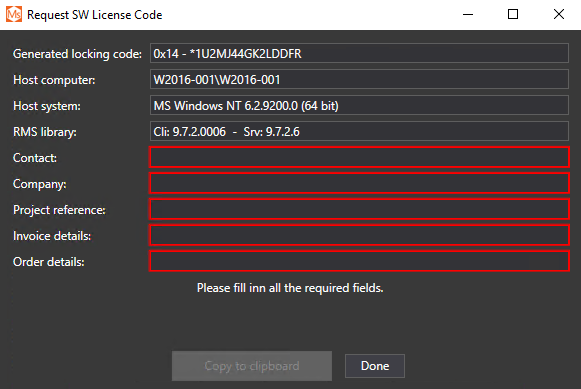

- Fill in ProjectRef and Invoice Details in the popup window.

- Click "Copy to Clipboard" and paste the results into an e-mail. Then send this to Prediktor.

Email: support@prediktor.no

To install a license key

When you have received the license key (which is locked to the MAC address of the computer and the hard disk ID)

- Open Apis Managemen Studio

- Expand "apis://localhost" -> "Licensing" -> -> "Install SW License Code". A dialog box opens, where you can browse to select, then open the license file. This completes the license key installation.

Restart Apis Hive and Apis HoneyStore

When the license key is installed, Apis Hive and Apis Honeystore have to be restarted (or started if they're not running).

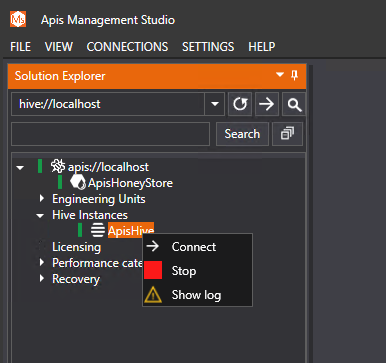

- Click "ApisHive" -> "Stop", and then "ApisHive" -> "Start", to restart:

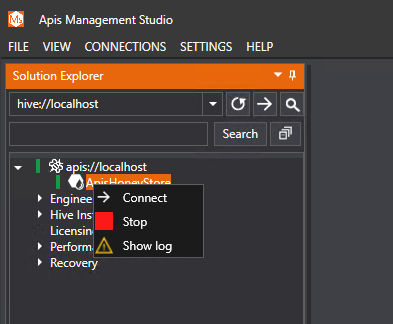

- Click "ApisHoneyStore" -> "Stop", and then "ApisHoneyStore" -> "Start", to restart:

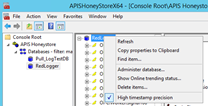

Start Apis Hive

- Open Open Apis Management Studio from the APIS menu under the Windowws Start Menu.

- From the Hive Instances node, go to the desired ApisHive instance, right click and select Start.

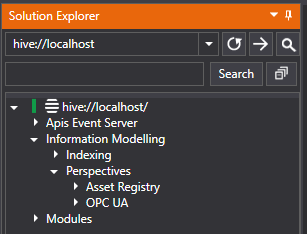

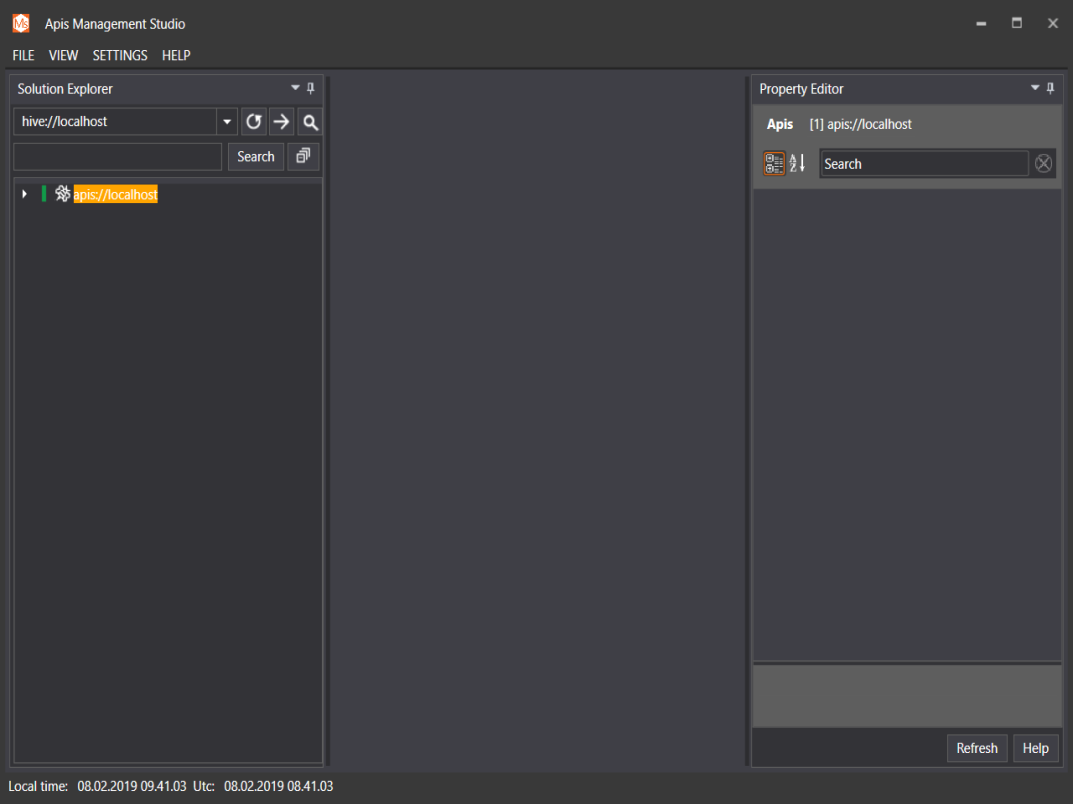

Open Apis Management Studio

- Open Apis Management Studio from the APIS menu under the Windowws Start Menu.

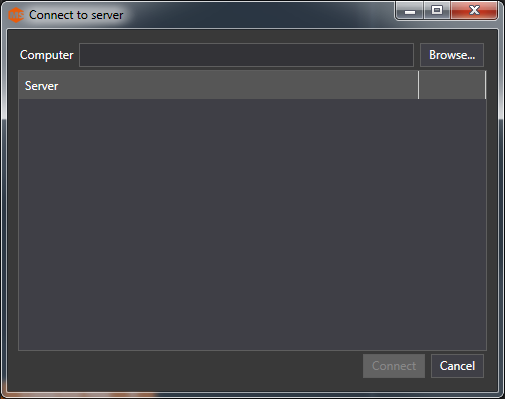

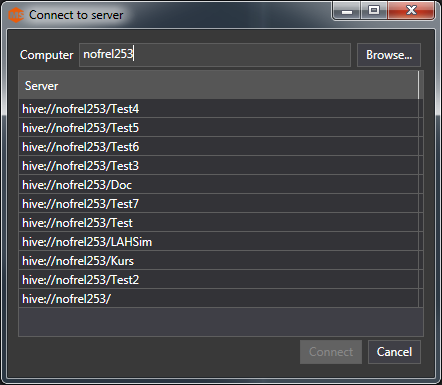

Then you can browse and connect to Apis services. - Apis Management Studio can only connect to running Apis services, not stopped ones.

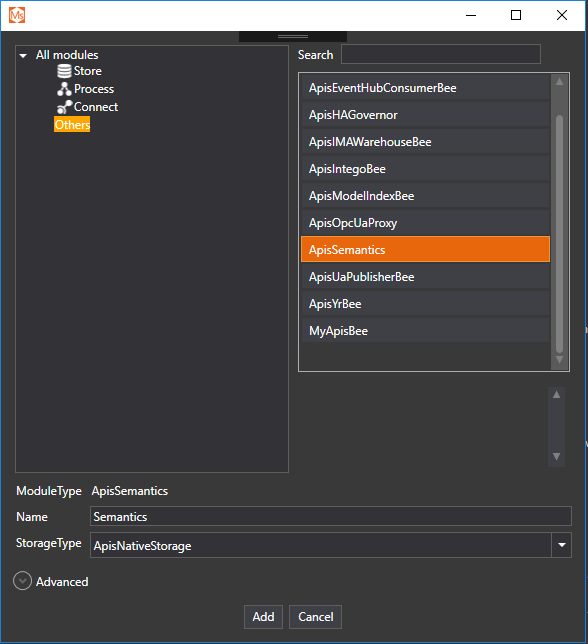

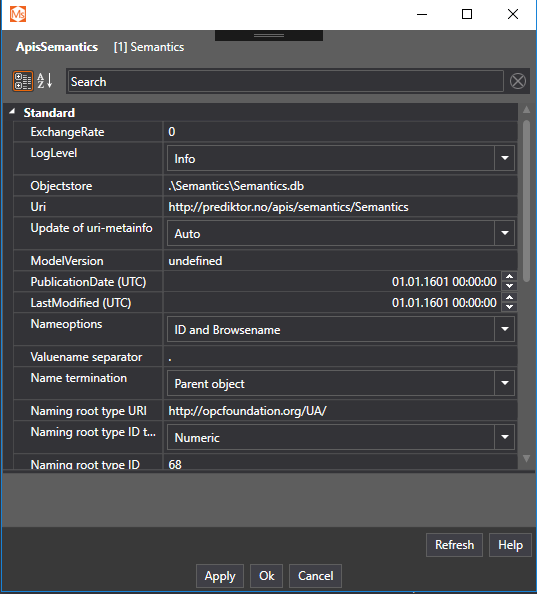

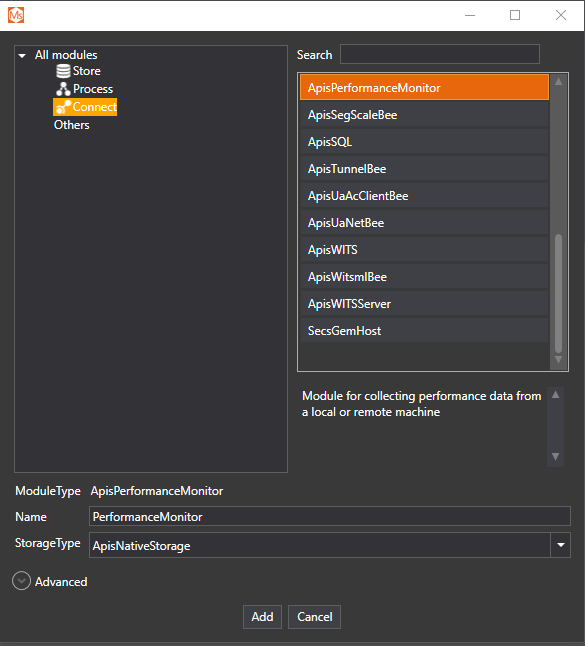

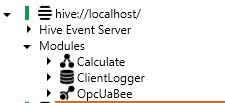

Adding a Module

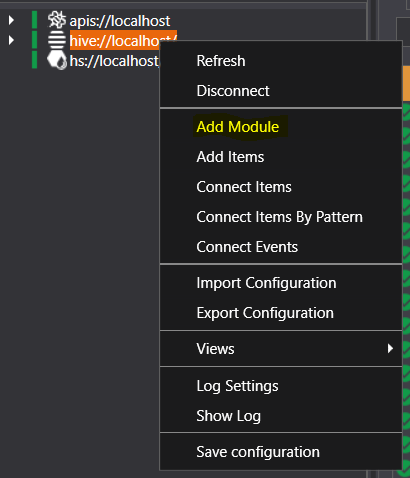

In this example, we'll use Apis Management Studio to add a module of type ApisWorkerBee to the Apis Hive environment. The procedure is similar for any type of module you want to add.

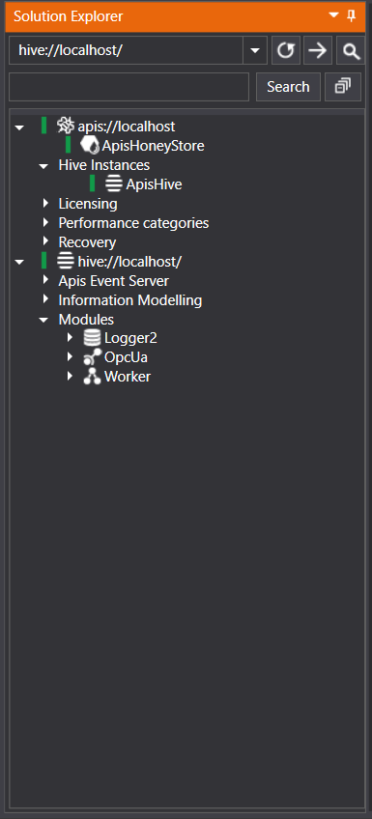

Open Apis Management Studio and right-click on the "hive" instance in Solution Explorer, then select "Add Module" from the menu.

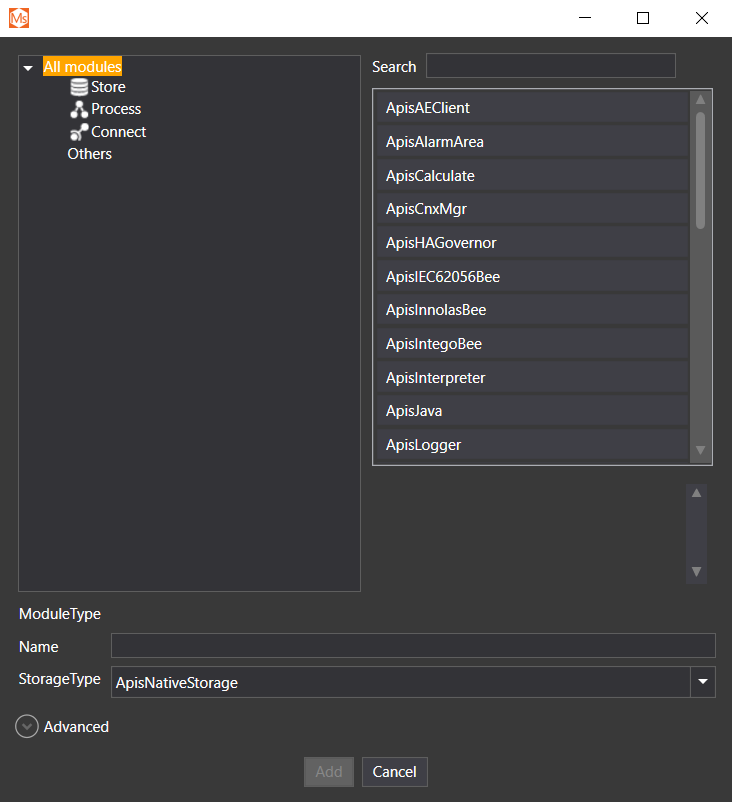

The following window appears:

By selecting a category on the left side, modules of that category will appear on the right side.

Click the Process category, and select the Worker module type

Give the module a name. Click Add and window with the properties of the module appears. Change the desired properties and click OK

Advanced

By clicking on the Advanced button, three more rows appear where it is possible to set the number of module which will be added and the start index of the naming of the module.

Additionally it is possible to set a Properties template file which will make the properties of the module be loaded from a previously saved template (this is optional). This is handy if modules need to have similar properties . You can save templates in the Solution Explorer by selecting Export properties in the context menu of the module and giving it a name. The module's properties upon creation will then be set to these saved properties.

You could write RTM {0} Worker in the name element, and 4 in the Count element. This will produce 4 modules named: RTM 1 Worker, RTM 2 Worker, RTM 3 Worker, and RTM 4 Worker.

Deleting and Renaming Modules

Deleting modules

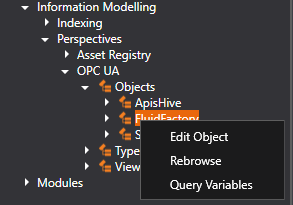

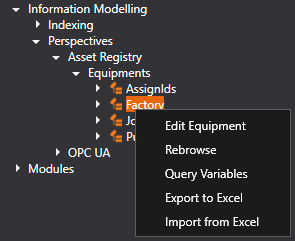

If you want to delete a module from your configuration, select the Module Node in the Solution Explorer. Open the context menu and select Remove.

If any items of this module have been enabled for logging into a Apis Honeystore database, the corresponding items in the database may be deleted from the database as well. Deletion depends on the module property ItemDeletion of the corresponding ApisLoggerBee module

If you are deleting an ApisLoggerBee module, the database managed by this module may be deleted, depending on the module property AutoDeleteDB of the ApisLoggerBee module you're deleting. Therefore, if you want to delete the database, make sure this property is set to true. Set it to false, if you want to keep the database for future use

Renaming modules

If you want to rename a module in your configuration, select the Module Node in the Solution Explorer. Open the context menu and select Rename module..... Then, enter a new, unique name for the module and click OK.

When a module is renamed, the following configuration changes are applied / maintained automatically:

- External item connections.

- Global attributes, will re-register and rename the Global attribute according to the new Module name, when applicable.

- Event broker connections.

- The Logger Bee automatically renames items currently stores into its HoneyStore database (Note that the database itself, is not renamed since it can be shared amongst several modules, and there is no one-to-one connection).

- The alarm Area name of the module in the Apis Event Server / Chronical.

- If Security / Config Audit trails are enabled, a ModuleRenamed entry will be logged.

It is strongly advised to take a backup before renaming modules, in case of unwanted effects as result of the rename operation. Also, if possible, it could be wise to restart the Hive instance after a module has been renamed, and inspect the trace logs for any related issues. Even if you cannot restart the Hive instance, inspecting the trace logs is a good idea.

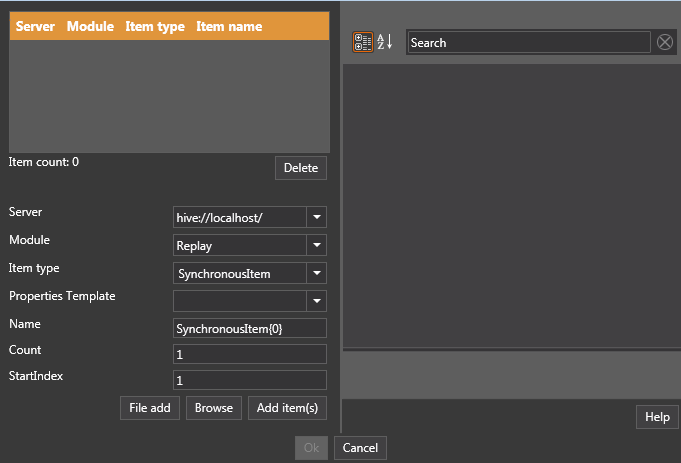

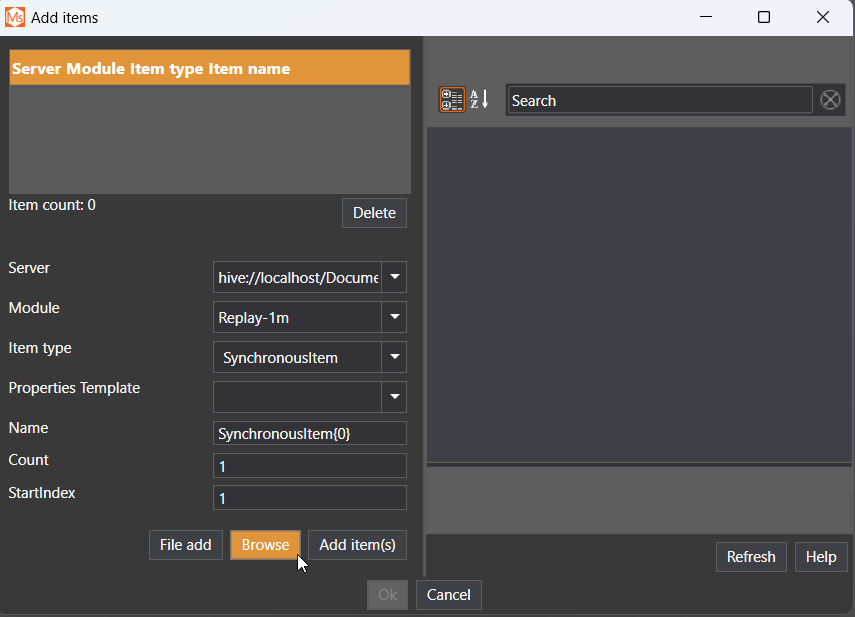

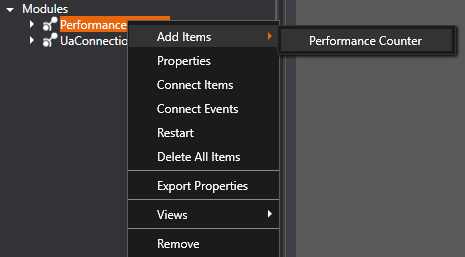

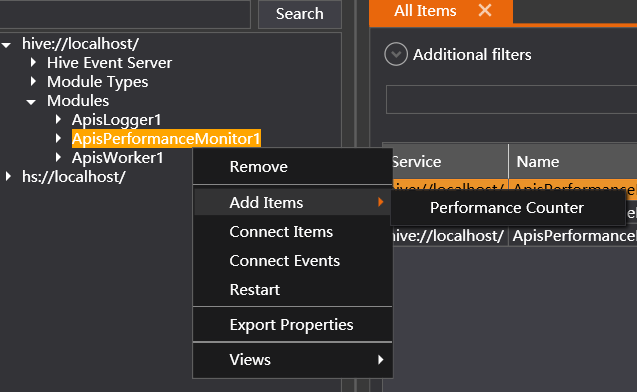

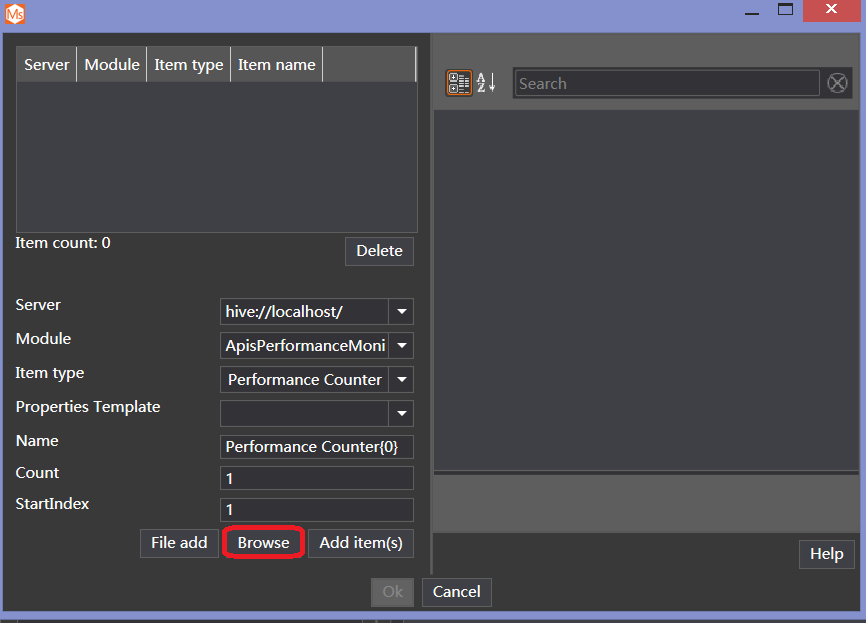

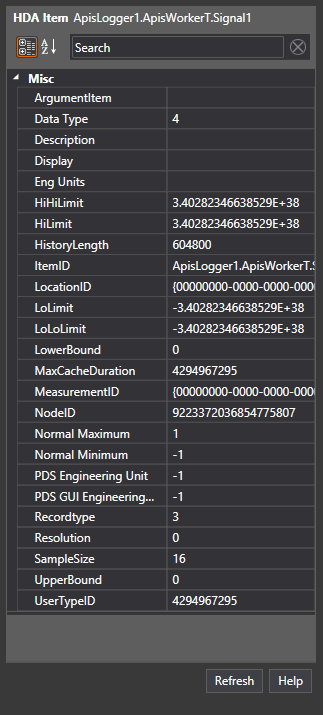

Adding Items

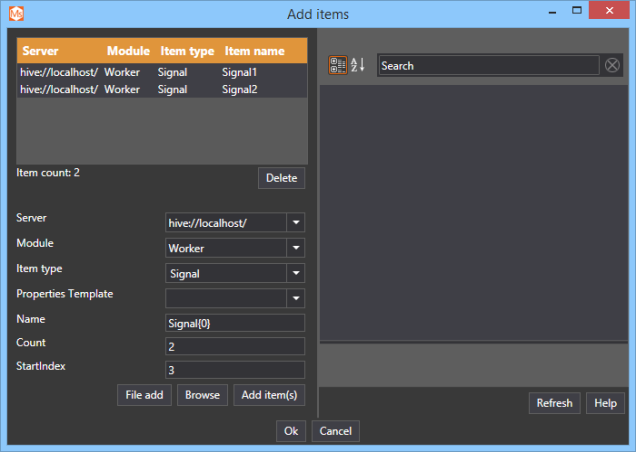

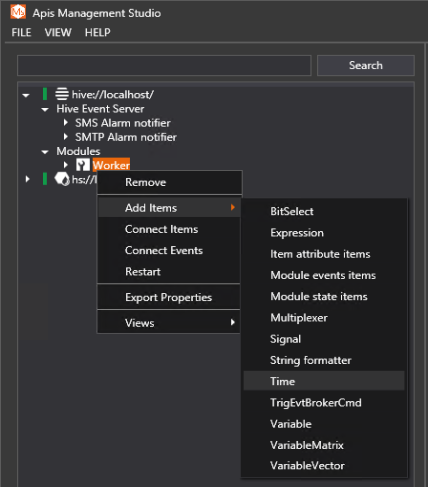

In this example, we'll use Apis Management Studio to add items of type Signal to a ApisWorkerBee module. The procedure is similar for any type of item you want to add.

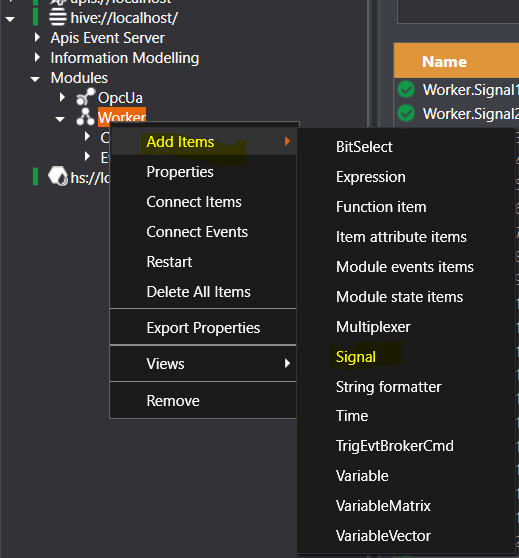

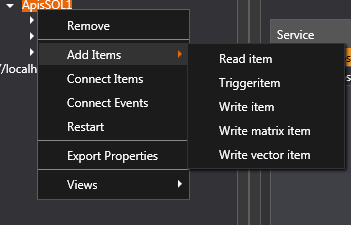

Right-click on the "Worker" module instance in Solution Explorer and select "Add Items " and "Signal" from the menu.

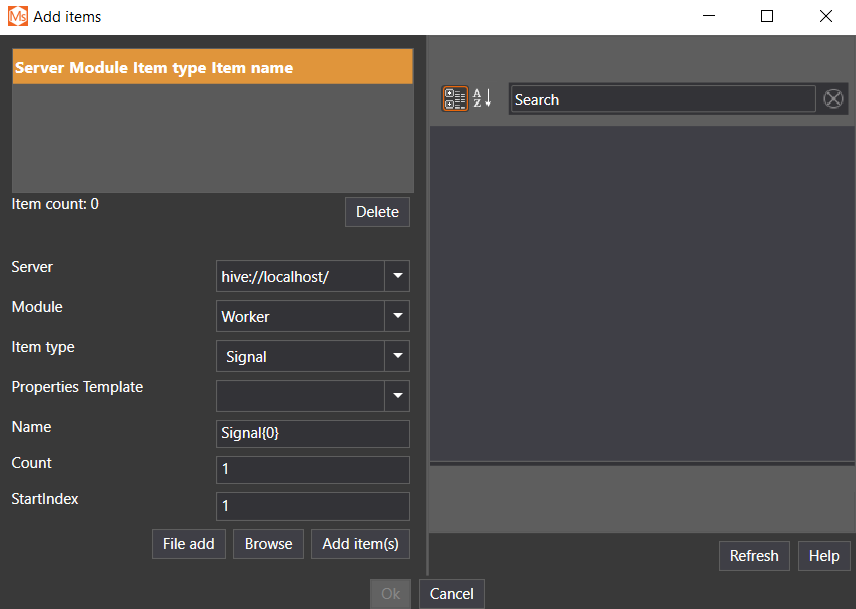

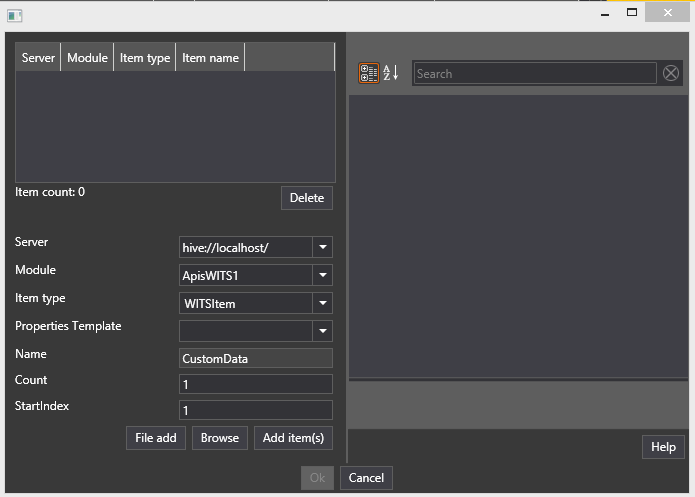

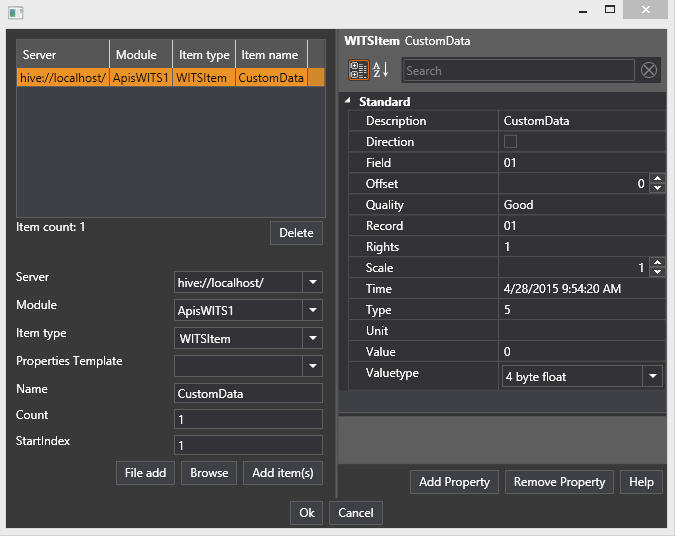

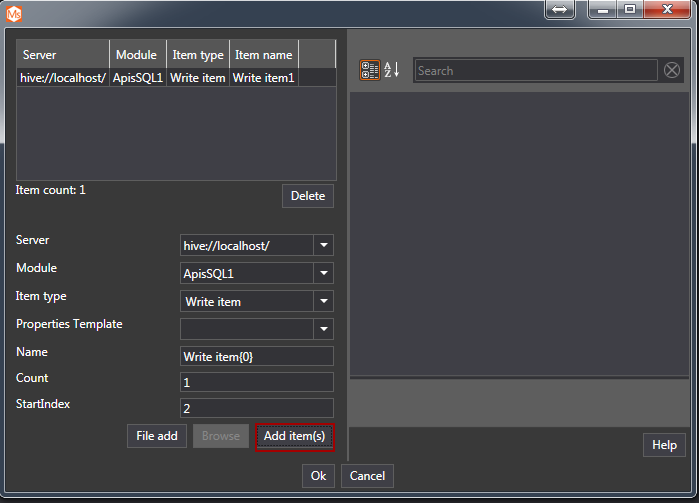

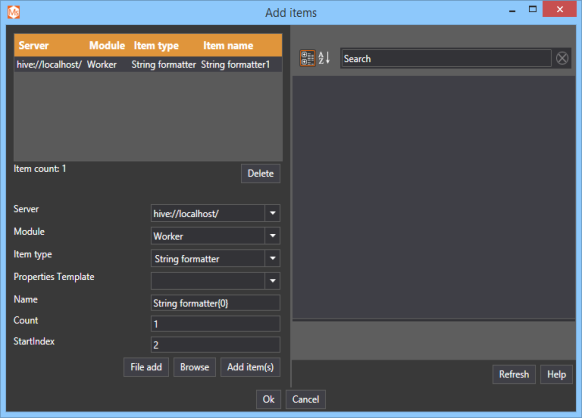

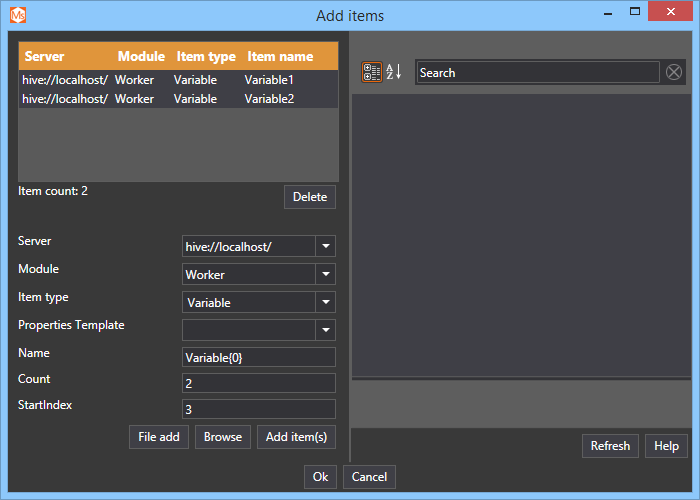

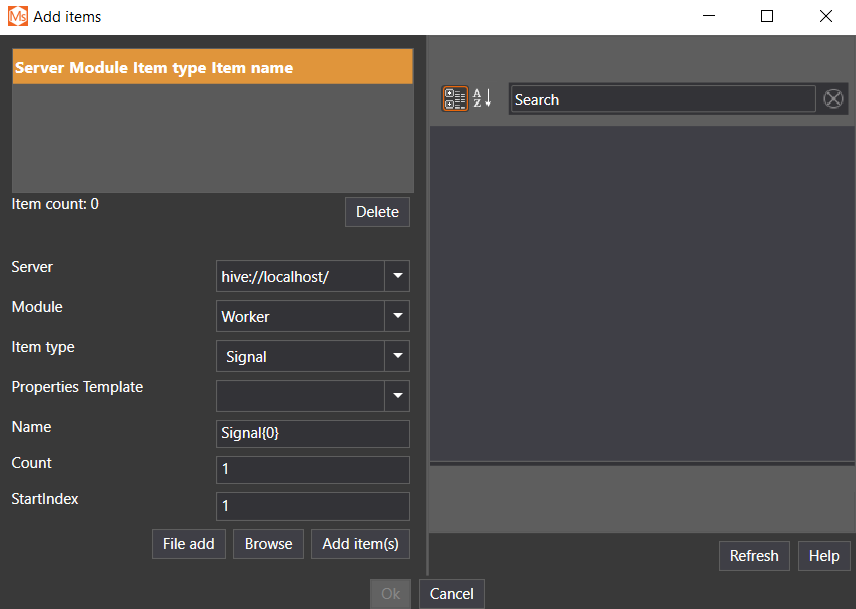

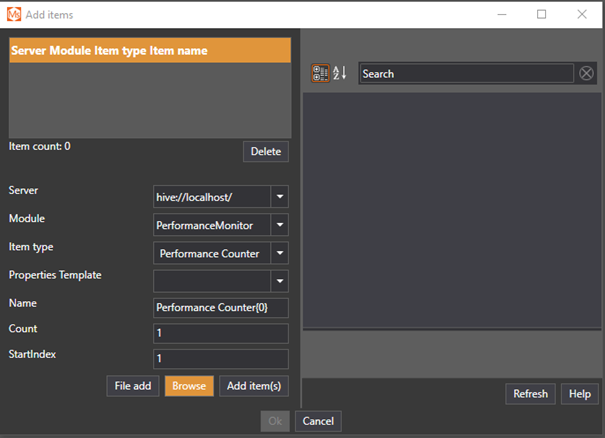

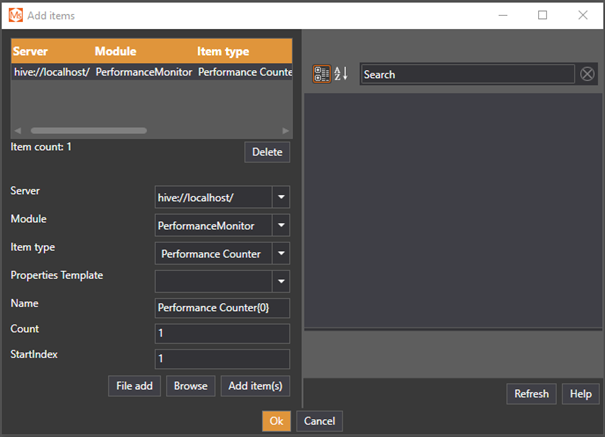

The following window shows up:

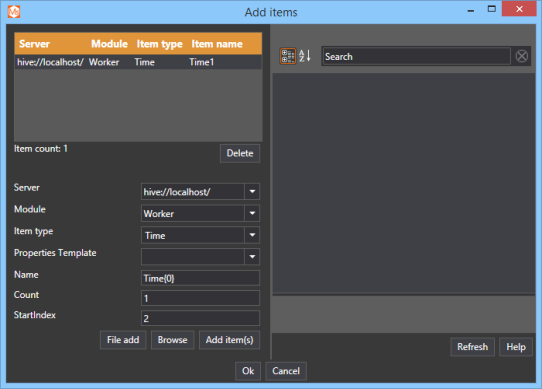

Give the item a name and click Add items. You can repeat this to create multiple items. Click OK when finished, and the items will be created in the module.

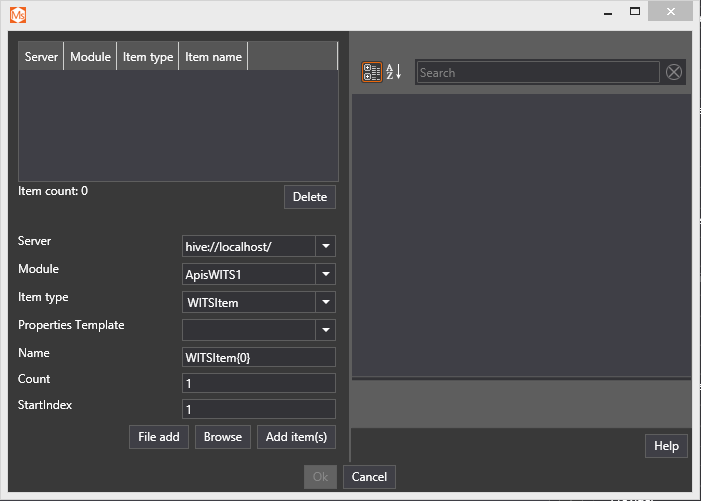

Explanations of the fields in the form

The fields of the form have to be filled out to create items.

1. Server - The hive server to add items to.

2. Module - The module to add items to.

3. Item type - The item type of the item to create. The item types available depend on the module selected.

4. Properties Template - The properties of the item can be loaded from a previously saved template (this is optional). This is handy if items shall have similar properties .You can save templates in the Solution Explorer by selecting Export properties in the context menu of the item, and giving it a name. The item's properties upon creation will then be set to these saved properties.

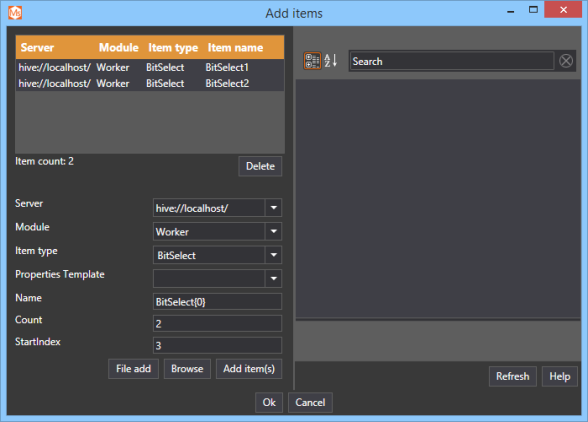

5. Name - Name of the item. If {0} is part of the name, it's used as a placeholder for the index.

6. Count - It's possible to add several items at once by setting the count to the desired number of items. If the count is larger than 1 the names of the items will be the name plus a number which is incremented for each item. {0} can be used as a placeholder for the increment number.

7. StartIndex - The starting index of the name if the count is > 1.

You could write Well {0} Pressure in the name element, and 4 in the Count element. This will produce 4 modules named: Well 1 Pressure, Well 2 Pressure, Well 3 Pressure, and Well 4 Pressure.

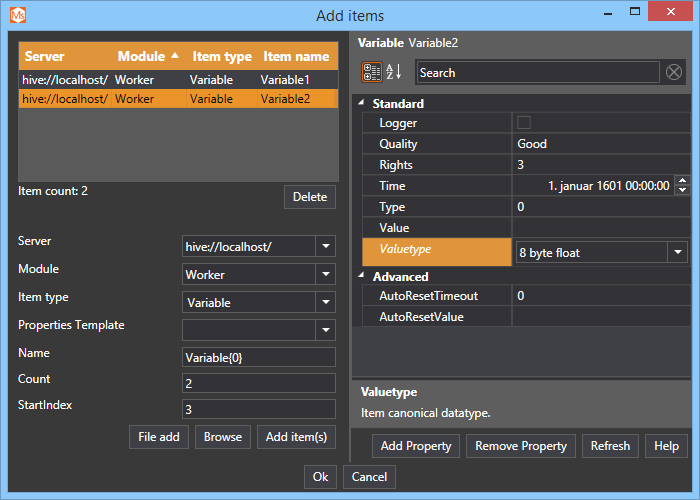

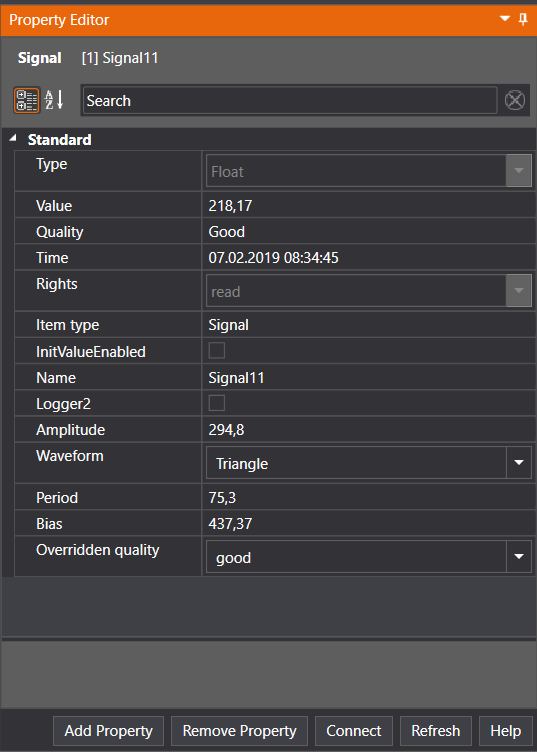

When you click "Add item(s)", the items are created as templates. This means that the items have not been actually added to the module(s) yet. The items will be created by clicking the "Submit" button. The reason they are temporarily created as templates is so you can change the properties of the items before they are actually created. This is done by selecting the items in the list in the upper left corner. The property editor on the right-hand side is used to change the properties.

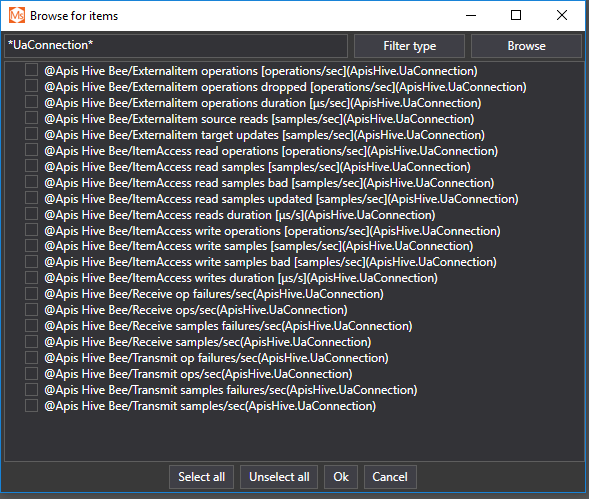

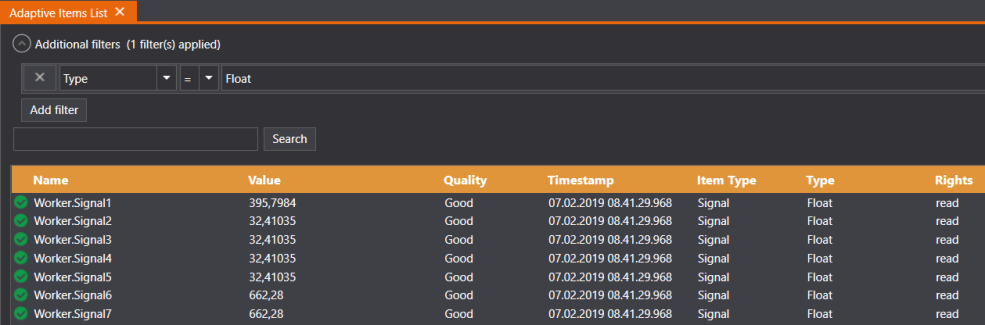

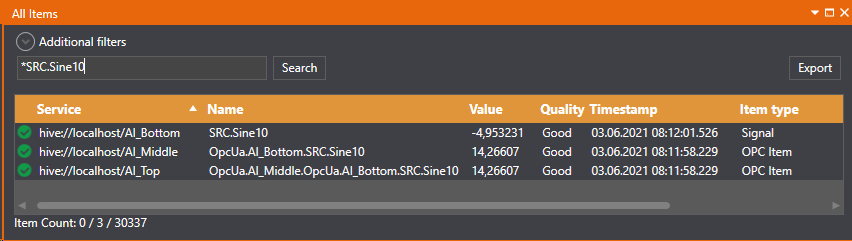

Browsing the namespace

By clicking the browse button after selecting the item type, it is possible to browse for the items the module offers. Not all modules support browsing.

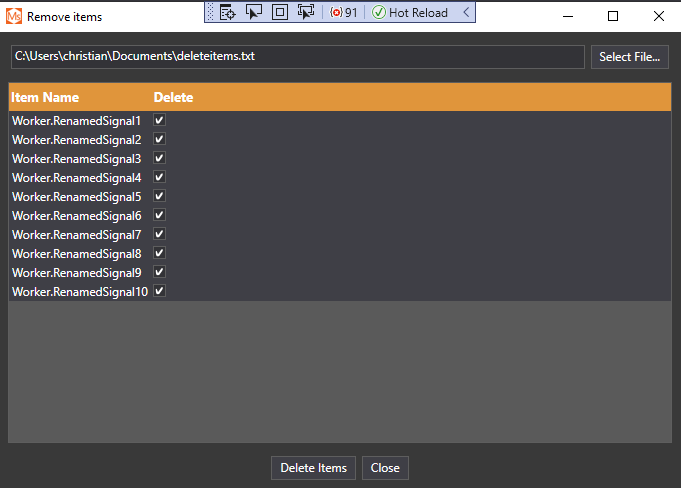

Delete an Item

Items can be deleted by selecting one or more item nodes in the Solution Explorer and selecting "Remove" in the context menu.

In addition, it's possible to delete items from the list views (except for the custom items view). This is done by selecting the items to delete and clicking the "Delete" button.

In both cases, a confirmation message will be displayed.

.

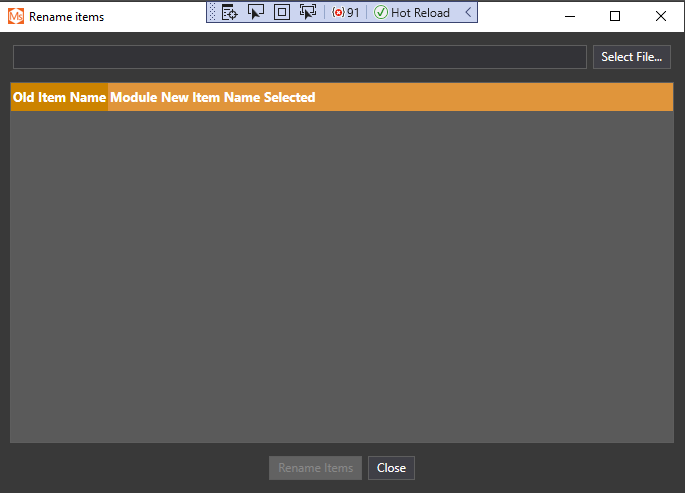

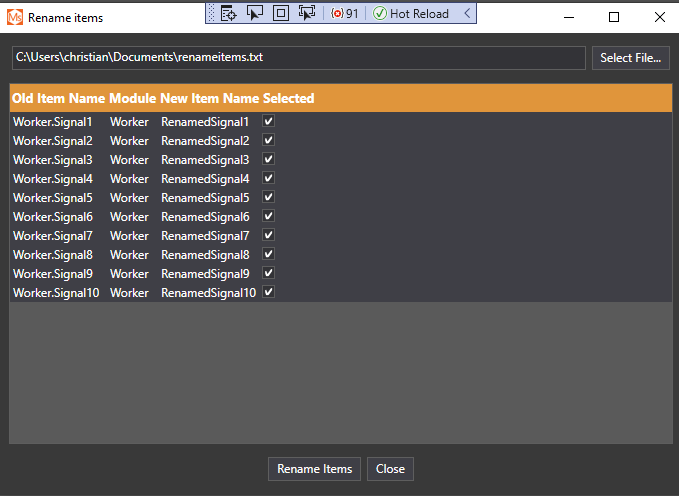

Renaming Items

An item can get a new name by changing the name in the Property Editor.

If the item you are renaming also has been enable for logging into a Apis Honeystore database, the item name will also change in the database.

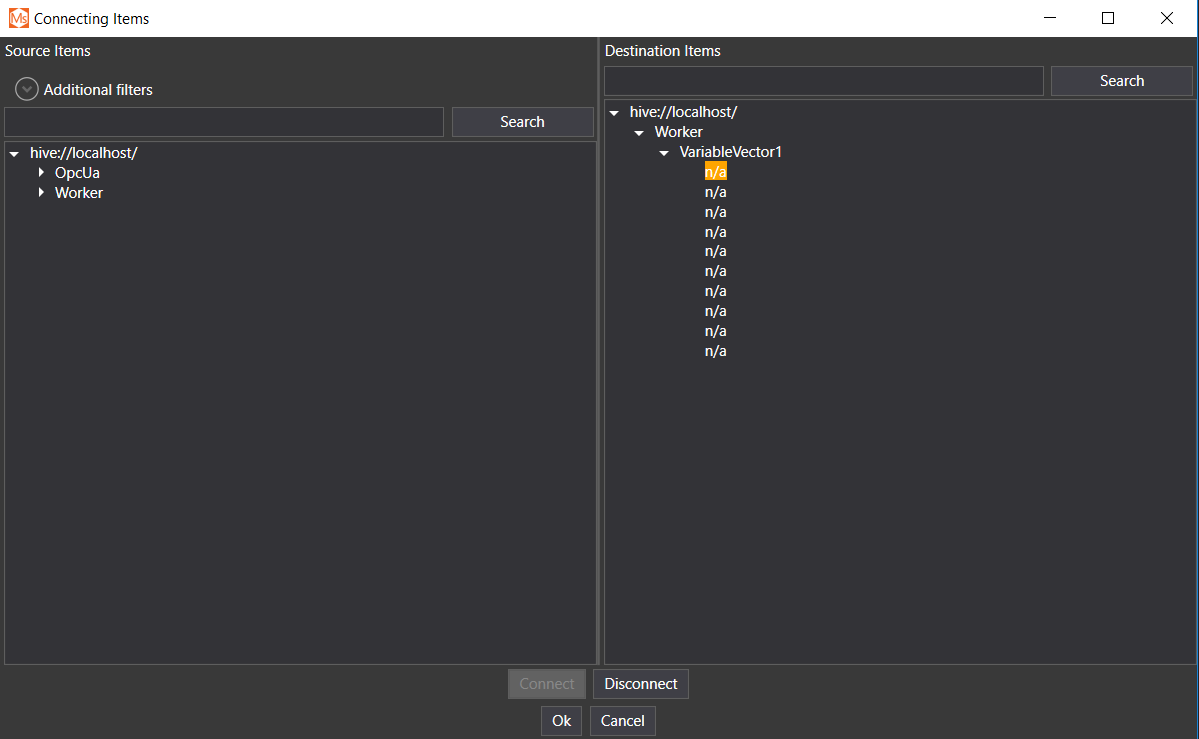

Connecting Items

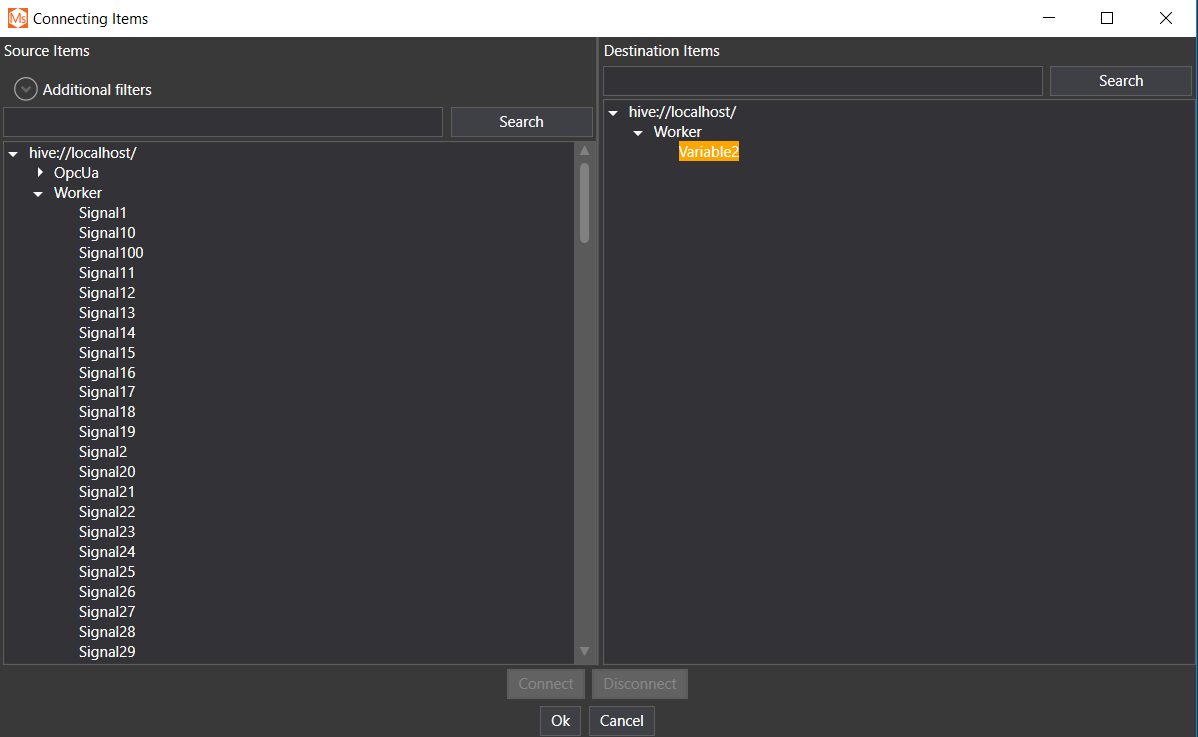

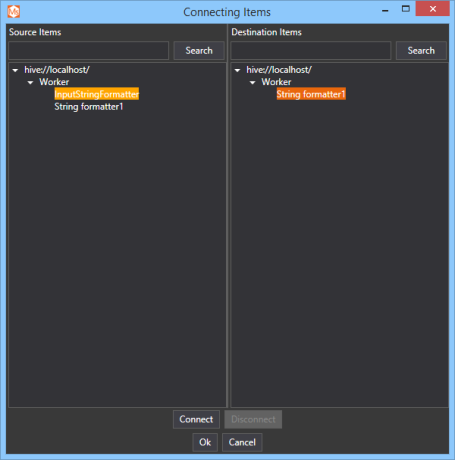

The item connection dialog can be displayed in several ways:

- From the context menu of a Hive node in the Solution Explorer, by selecting: Edit -> Connect items;

- From the context menu of a module node in the Solution Explorer, by selecting Edit -> Connect items;

- From the context menu of an Items view;

- By clicking the "Connect" button in the Property Editor.

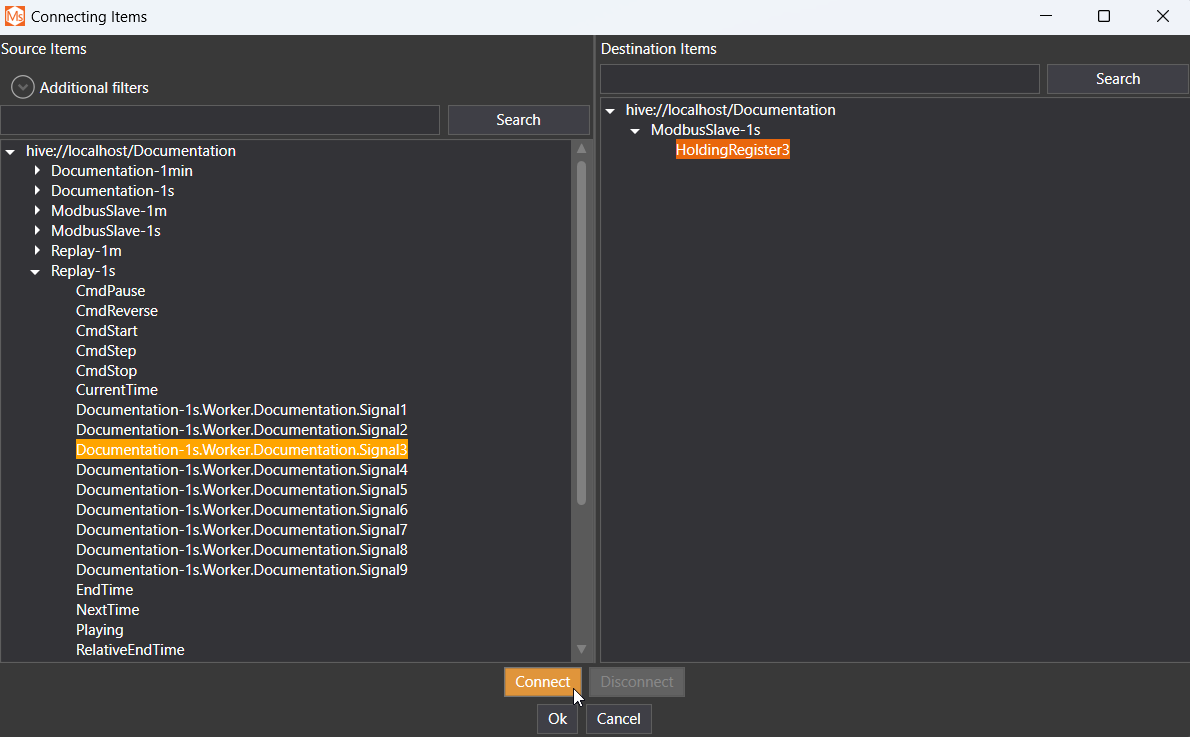

The connection dialog consists of two trees. The tree to the left contains available sources (i.e. all items for the instance), the tree on the right contains destination items. Only inputs can be connected in this dialog. You can select items in the trees on the left and right side, then click "Connect" to connect. To disconnect, select the input in the tree on the right side that you wish to disconnect, and click "Disconnect".

It is also possible to drag items from the source to a destination item.

Vectors and matrices are connected in the same manner, but for each element of the vector/matrix there will be field in which the connected item is displayed. If no item has been connected, "n/a" appears.

The changes to the connections will be performed when you click "Ok".

.

Export Item Properties

It is possible to export the properties of an item. This can then be used as a template when creating new items. The new item will get the same property values as the item for which properties were exported. Select Export properties in the context menu in the item node, which brings up a dialog box asking the user to input a name.

You can then enter an easily recognizable name for the Properties Template.

When adding items, all the saved properties templates for the item type are listed in the "Properties Template" combo box. The templates are stored in files in the program folder of Apis Management Studio under the folder defaults. The templates are grouped by the module type and under the folder Items, then further grouped by item type. The files can be copied to other installations of AMS.

Export Module Properties

It's possible to export the properties of a module. These can then be used as templates when creating a new module, and the new module will get the same property values as the export module. You can select "Export properties" in the context menu in the module, which will bring up this dialog box, where the user is asked to give the template a name.

You can then enter an easily recognizable name for the Properties Template.

When adding modules all the saved properties templates for the module type are listed in the "Properties Template" combo box. The templates are stored in files in the program folder of Apis Management Studio under the folder defaults. The templates are grouped by the module type, and the saved templates are stored in the folder "ModuleProperties". The files can be copied to other installations of AMS.

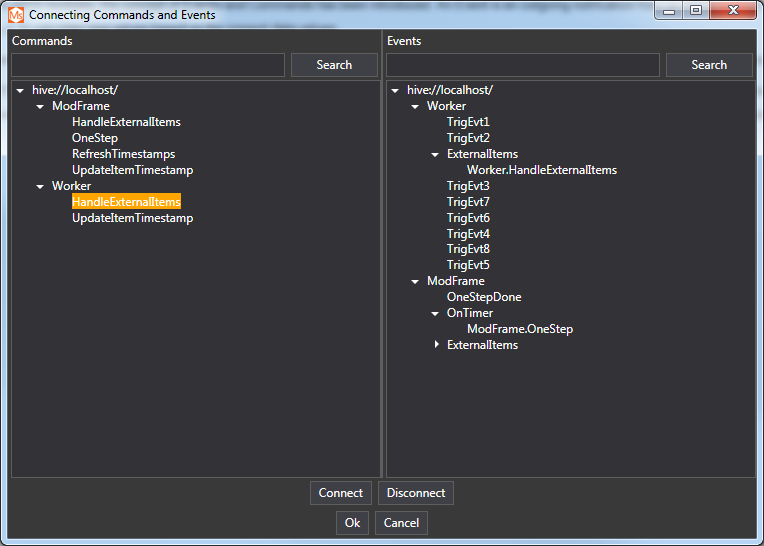

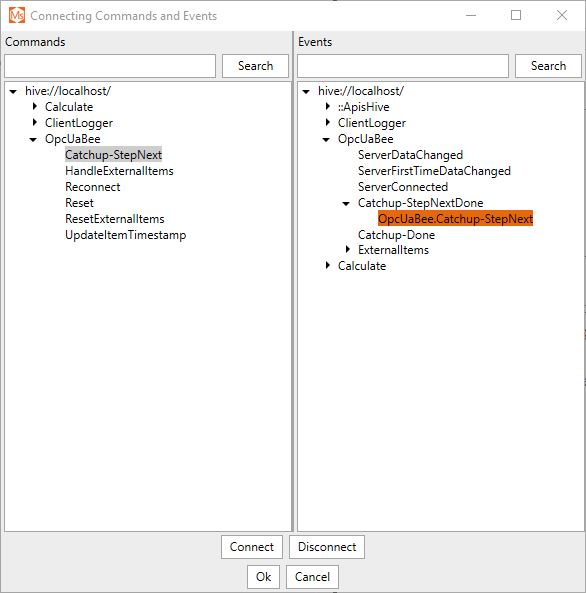

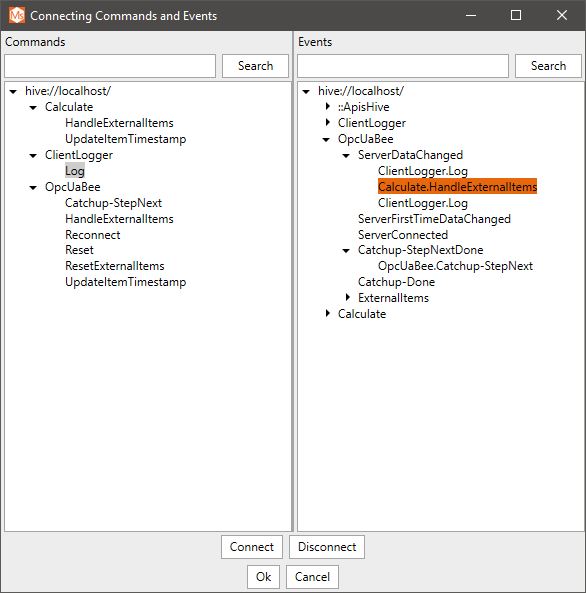

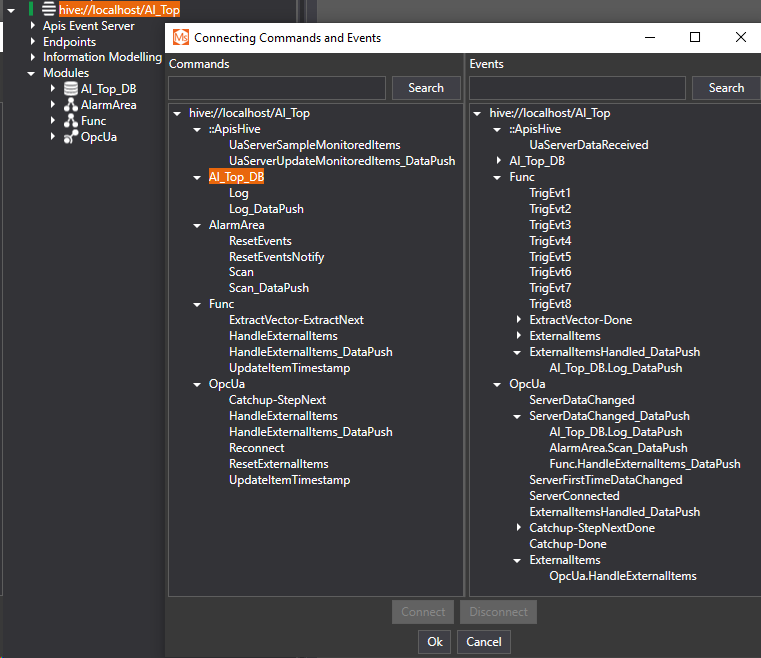

Commands And Events

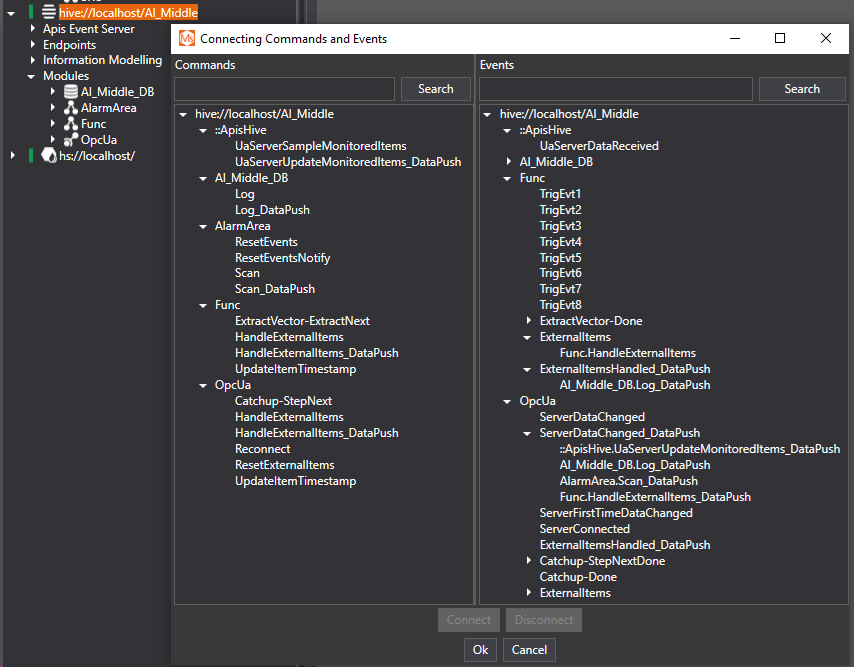

The configuration of Commands and Events is done by finding the Connect Events menu item in either the context menu of the Instance Node or the Module Node of the Solution Explorer.

This will bring up the Connect Events window:

The left hand side of the window displays the available commands in the modules. In this case there are two modules with a set of commands. Different module types will typically have different commands and events.

On the right hand side, the events of the modules are listed. Below the the events (on level 4), the commands which will be executed when the event occurs are listed. It is possible to add and remove the commands by dragging commands from the left hand side to the right hand side.

The order of the commands can also be changed by dragging the command up or down the tree.

In addition to that it is possible to mark a command on the left hand side and an event on the right hand side, and click Connect in order to add a command to an event.

By marking a command on the right hand side and clicking disconnect, the command will be removed from the event.

There will be no changes in the server configuration until you click "Ok". By clicking Cancel all changes will be discarded.

Install and use floating Runtime License

What is Network Licensing?

Network licensing is based on client/server architecture, where licenses are placed on a centralized system in the subnet. On the License Server computer, the APIS License Server must be running to serve license requests from clients.

The main difference between activating software that uses a network license rather than a standalone license is that the license code must reside on the system where the License Manager runs. This may not necessarily be the system where client application will be used.

Prepare the license server

TODO: Describe how to set up the APIS/Cryptlex Licensing server...

Email: support@prediktor.no

Phone: +47 95 40 80 00

Request a license

TODO: Describe how to request an APIS license...

Email: support@prediktor.no

Phone: +47 95 40 80 00

Install a floating network license key

TODO: Describe how to deploy an APIS license...

Activate a floating license

TODO: Describe how to activate an APIS license...

Restart Apis Hive and Apis HoneyStore

When the license key is activated, Apis Hive and Apis Honeystore have to be restarted (or started if they're not running).

- Open Apis Managemen Studio

- Click "ApisHive" -> "Stop", and then "ApisHive" -> "Start", to restart:

- Click "ApisHoneyStore" -> "Stop", and then "ApisHoneyStore" -> "Start", to restart:

Connect

This section covers how to acquire data using the most common data protocols . Please pick a topic from the menu.

Connect to an OPC DA server

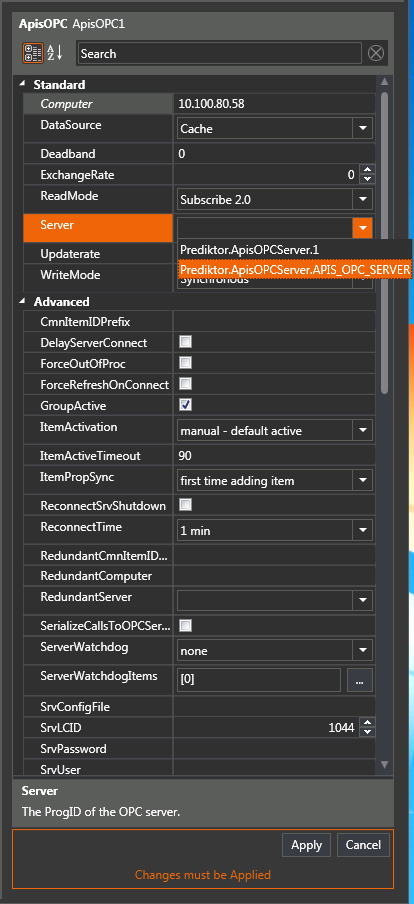

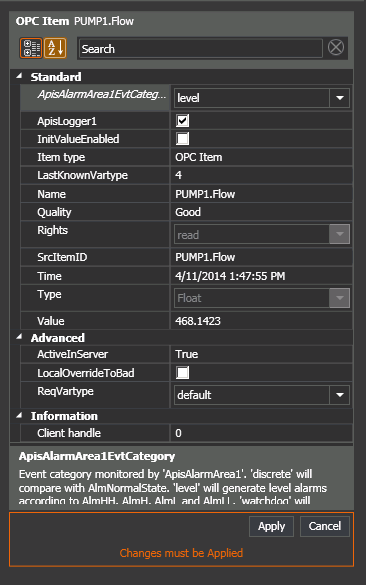

Follow the guide Add Module to Apis Hive, but this time select a module of type ApisOPC from the Module type dropdown list.

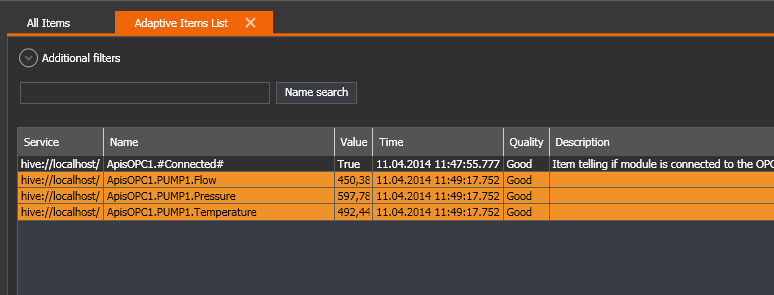

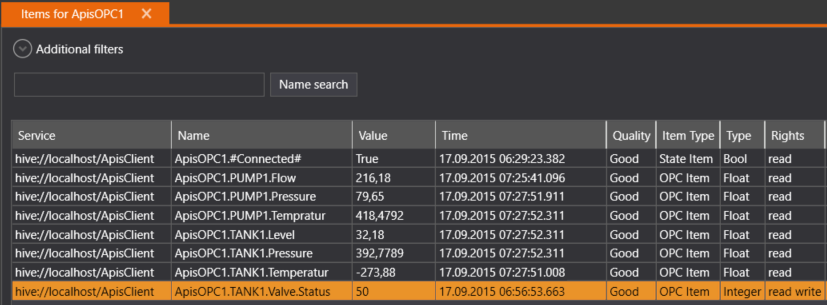

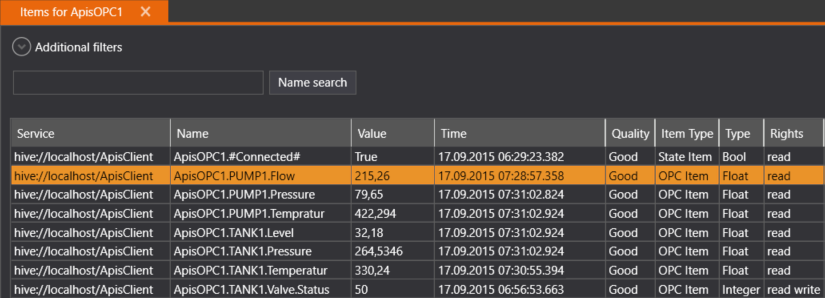

- After adding the module, select the new module named "ApisOPC1" from the Solution Explorer.

- In the Properties Editor, enter values for:

- Computer: The IP address or DNS name of your OPC server machine. If the OPC server is running on your local computer, you should leave this property blank.

- Server: Select the name of the OPC server from the dropdown list.

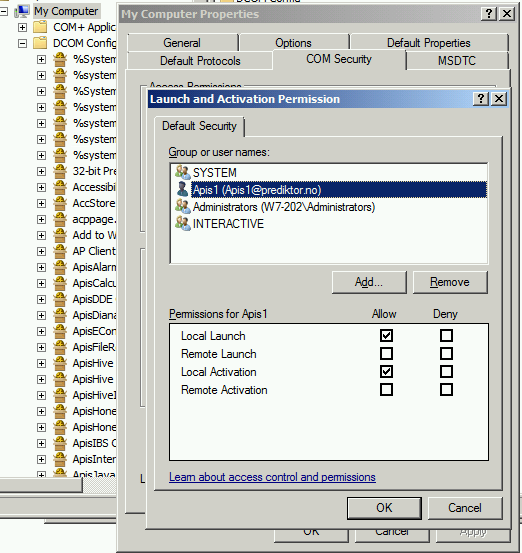

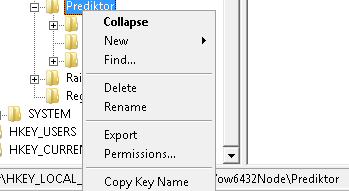

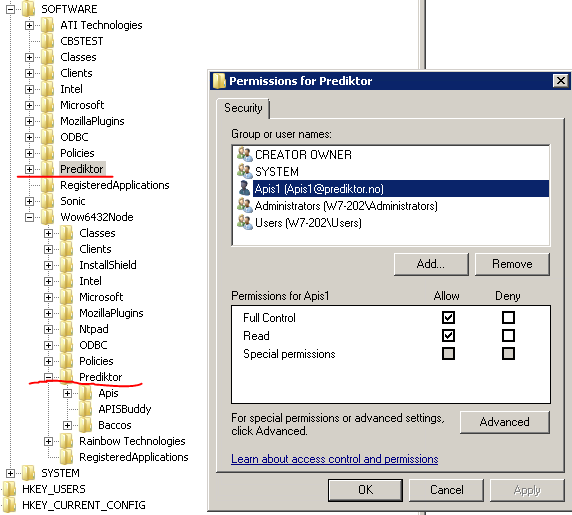

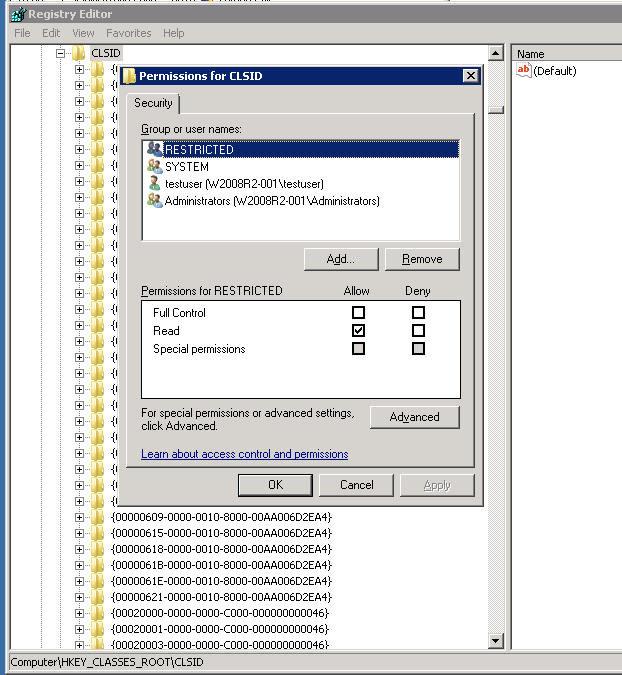

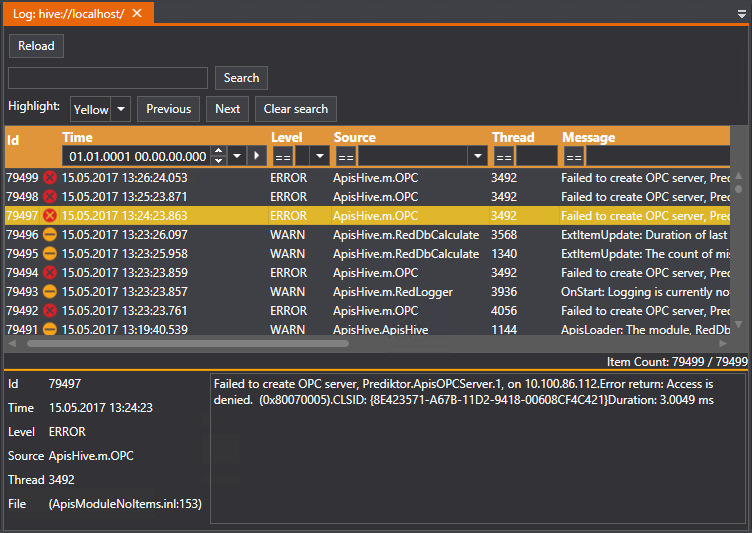

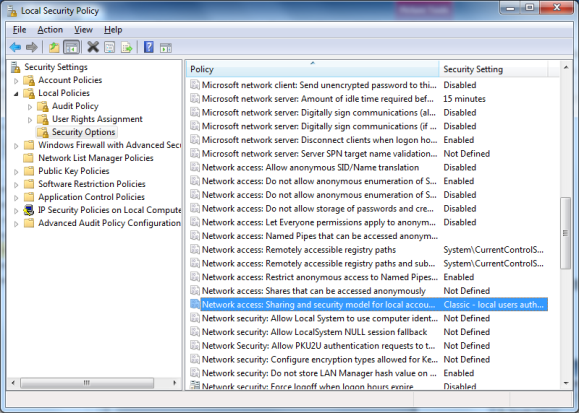

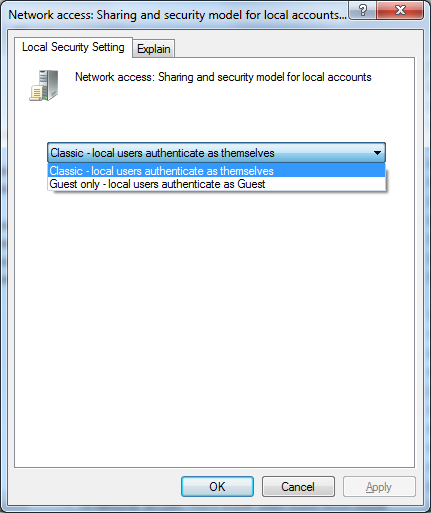

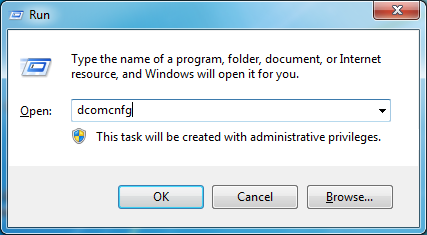

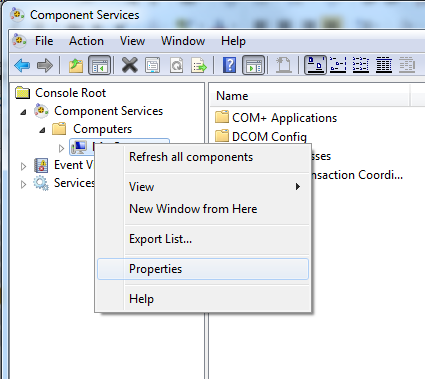

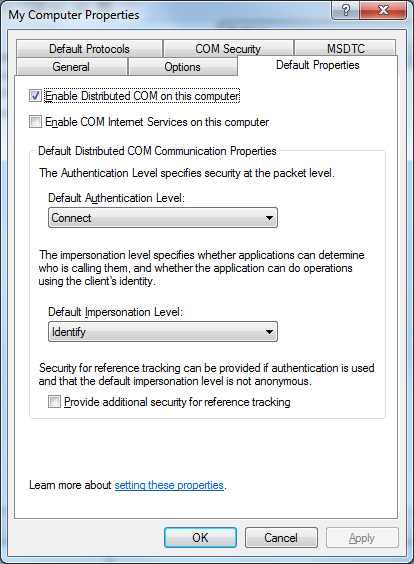

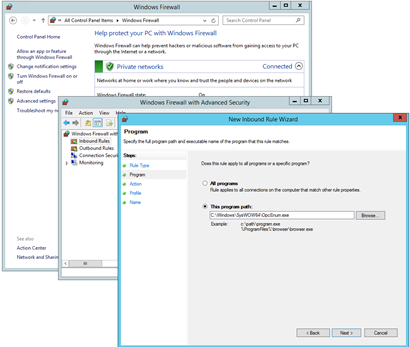

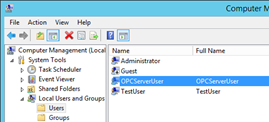

TIP: If you cannot see the name of your OPC server, take a look at the Guide OPC DCOM Setup . The log viewer in Apis Management Studio can also be useful when troubleshooting DCOM Setup.

- Press "Apply" when done.

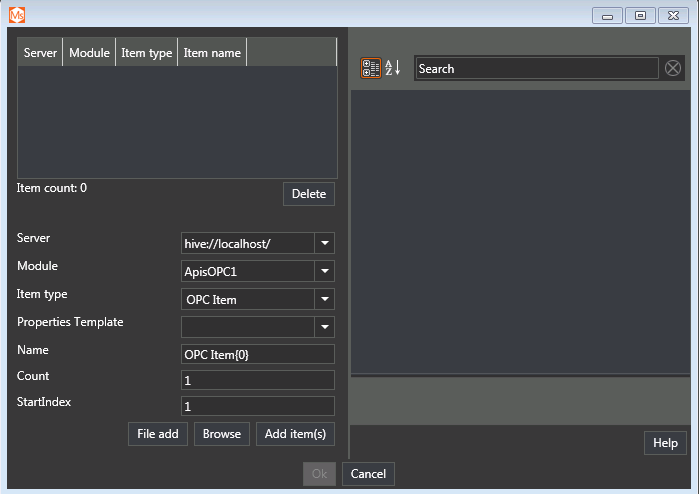

Follow the guide Add Items to a Module, but this time add item of type "OPC Item".

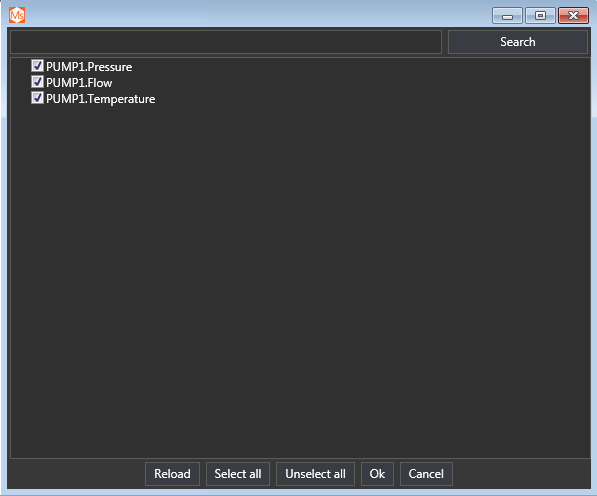

- Click the "Browse" button.

- A dialog opens that lets you select items from your OPC Server. Click "Ok" when done.

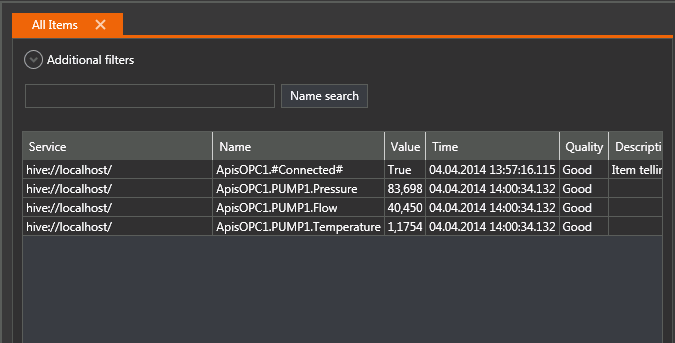

- The item list will get new entries showing the added items.

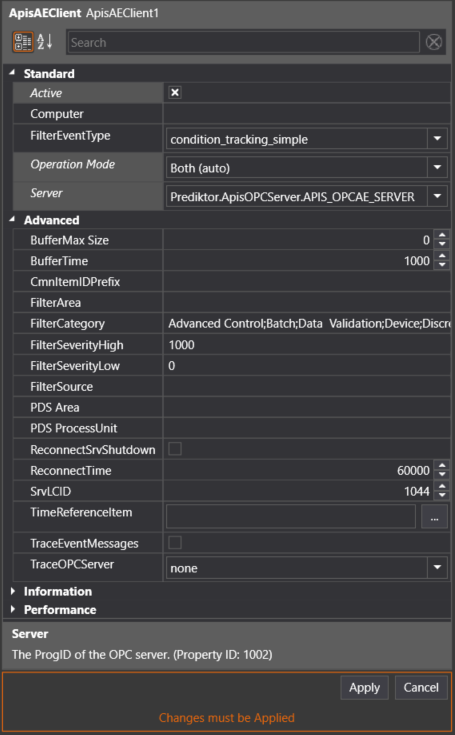

Connect To OPC AE Server

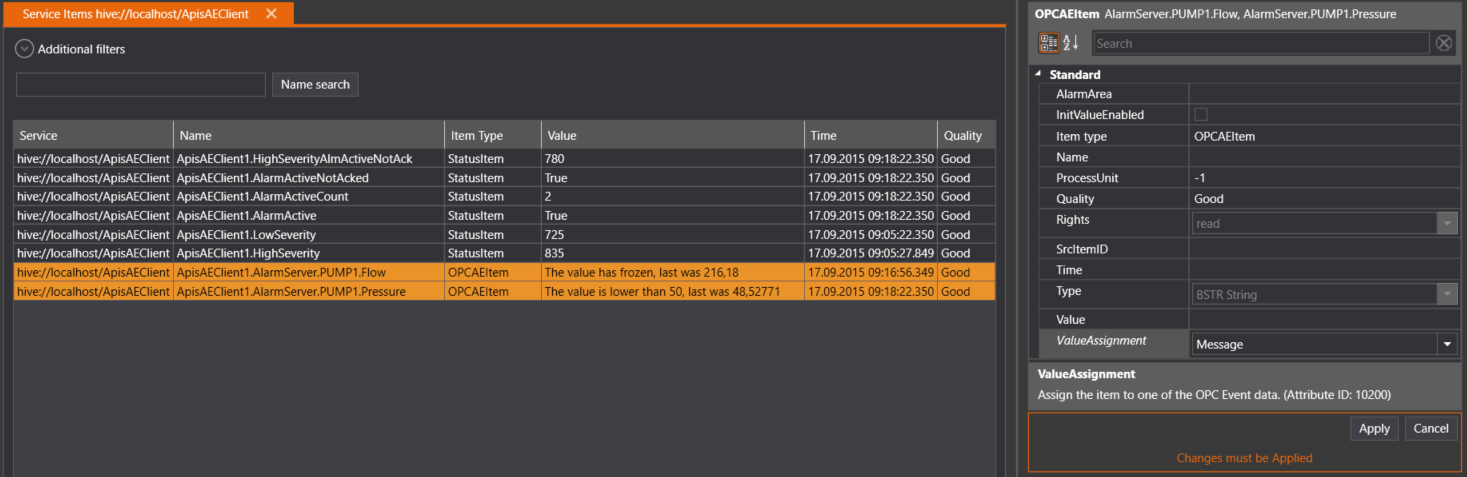

This module can both replicate the received Alarms&Event in a local AE server and display the information on items in a namespace. In this example we'll do both.

Follow the guide Add Module to Apis Hive, but this time select a module of type ApisAEClient from the "Module type" dropdown list.

-

After adding the module, select the new module named "ApisAEClient1" from the Solution Explorer.

-

In the Properties Editor, enter values for:

- Computer: The IP address or DNS name of your OPC server machine. If the OPC server is running on your local computer, you should leave this property blank;

- Server: Select the name of the OPC server from the dropdown list;

- Operation Mode: Both (auto). Items will be automatically added to the namespace and the alarms will be registered in the Apis Alarm server in same Apis instance.

TIP: If you cannot see the name of your OPC AE server, take a look at the guide OPC DCOM Setup . The log viewer in Apis Management Studio can also be useful when trouble-shooting DCOM Setup.

- Press "Apply" when done.

- The items will be automatically created when alarms & events are received. You can change the default alarm information to display on items of type OPC AE Item. Select the item and change the ValueAssigment

- Press "Apply" when done.

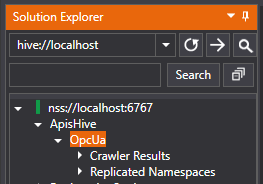

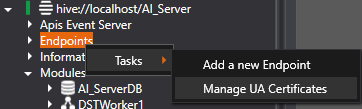

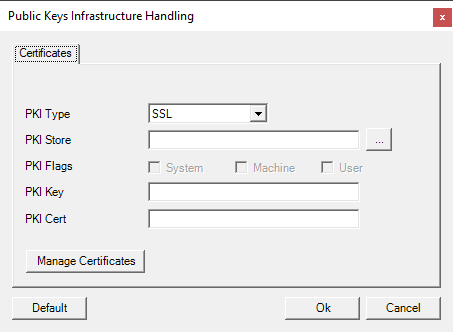

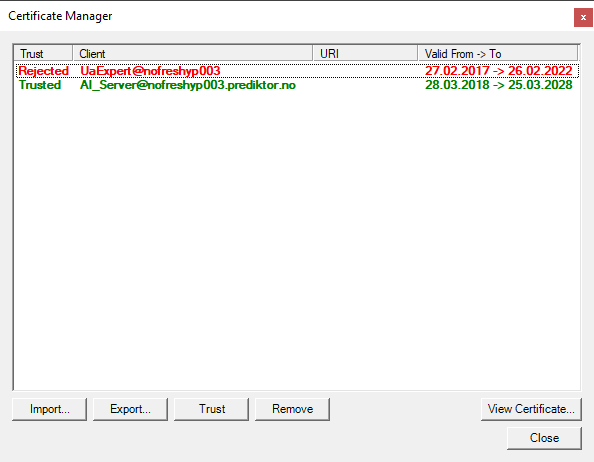

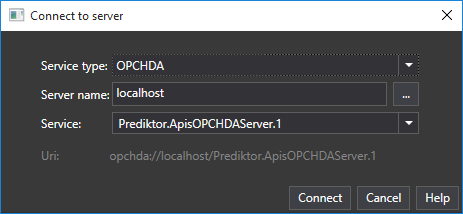

Connect To OPC UA Server

Follow the guide Add Module to Apis Hive, but this time select a module of type Apis OpcUa from the Module type dropdown list.

- After adding the module, select the new module named "ApisOPCUA1" from the Solution Explorer.

- In the Properties Editor, enter values for:

- ServerEndPoint: Endpoint URL of the OPC UA server. Syntax opc.tcp://{HostName or IP address}:{port number}

- Press "Apply" when done.

Follow the guide Add Items to a Module, but this time add item of type "OPC Item".

-

Click the "Browse" button.

-

A dialog opens that lets you select items from the OPC UA Server.

-

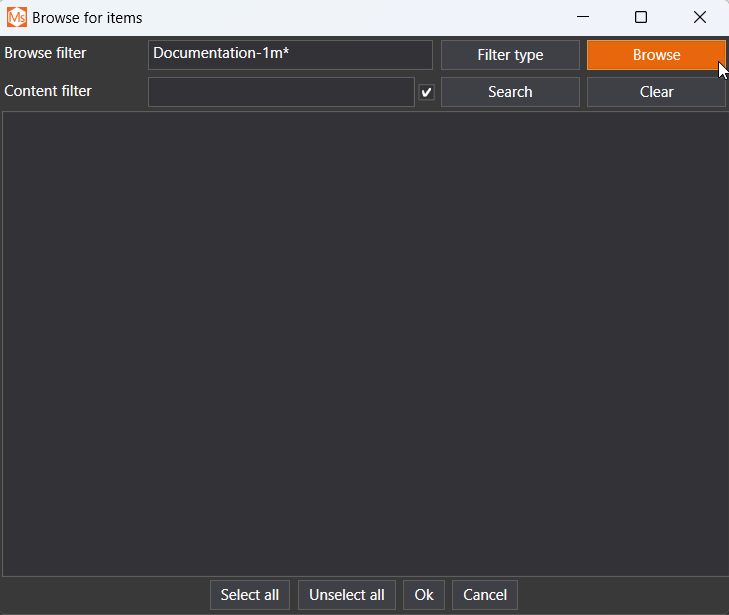

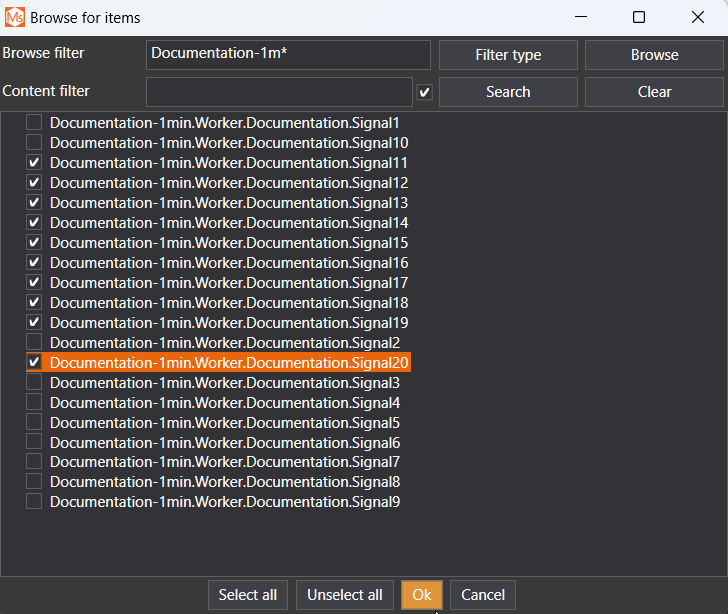

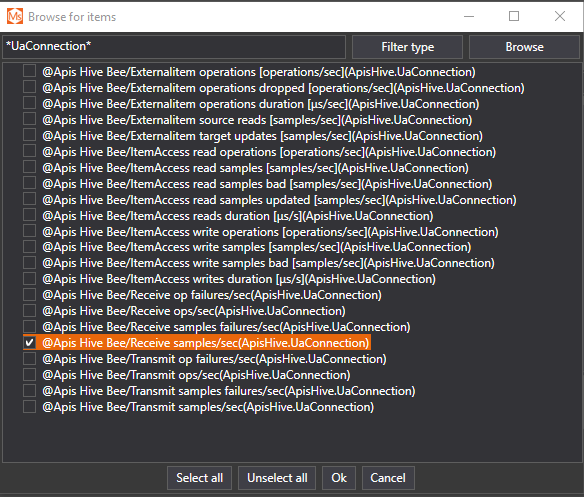

When the dialog appears it is not populated with any items. In order to find the items you are looking for, you may enter your search criteria in the input box at the top of the dialog, By default it is the name of the leaf nodes you may filter on, but you can click the "Filter type" button to set other filter-types.

-

Click "Browse" to perform the search for items. When no search criteria is entered, the entire available namespace will be displayed.

-

Select the items you want to add and click "Ok" when done.

-

The item list will get new entries showing the added items.

Connect To ModBus Slave

Follow the guide Add Module to Apis Hive, but this time select a module of type ApisModbus from the "Module type" dropdown list.

- After adding the module, select the new module named "ApisModbus1" from the Solution Explorer.

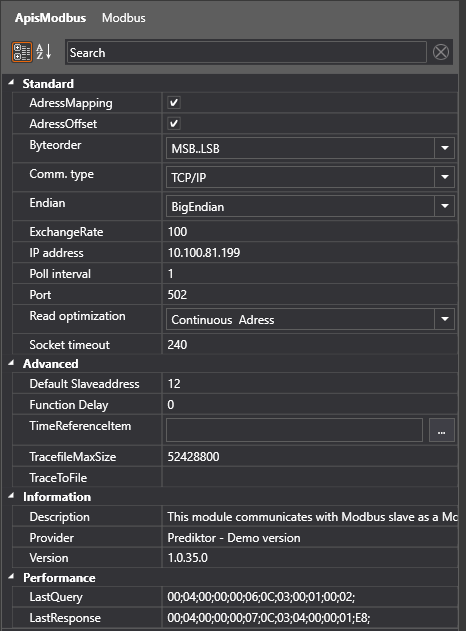

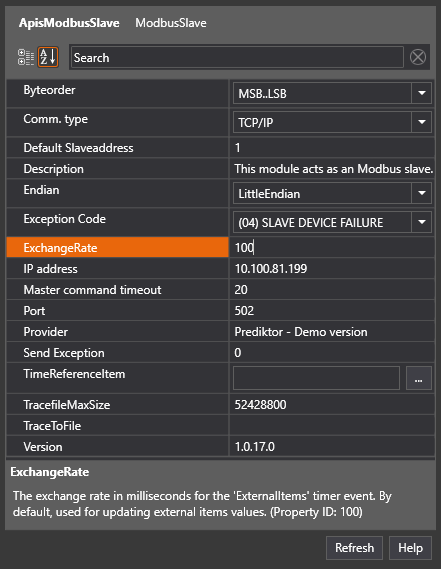

Basic setup, communication interface

The module supports both serial (RTU) and TCP/IP (Modbus TCP) interface, depending on your server. In the Properties Editor, enter values for:

-

TCP/IP based server:

- Comm. type: TCP/IP

- IP address: IP address of your Modbus slave.

- Port: TCP port of your Modbus slave

.

-

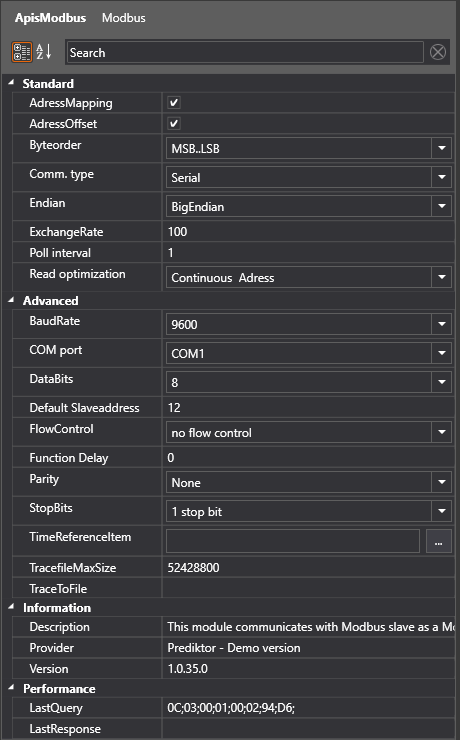

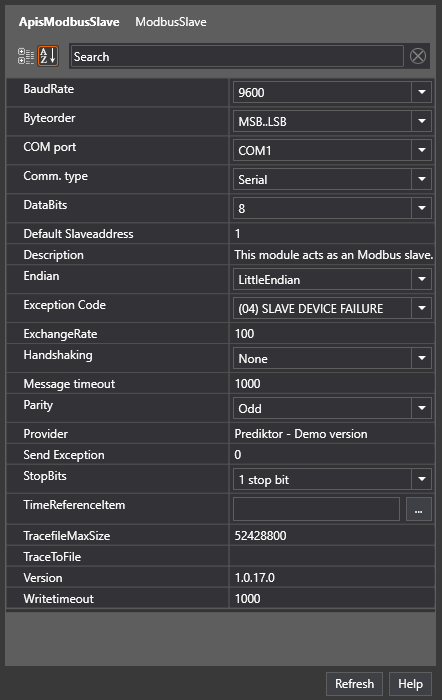

Serial communication based server :

- Comm. type receive : Serial

- Com port: Com port connected to the slave.

- BaudRate: Baud rate of your slave serial setup.

- DataBits: Data bits of your slave serial setup.

- FlowControl: Handshake of your slave serial setup.

- Parity: Parity of your slave serial setup.

- StopBits: Stop bits of your slave serial setup.

- Further in the Properties Editor:

- Poll interval: Enter the value for how often this masterr should poll for new values on server (in seconds).

- Default Slave address : Note! this is the initial property when new items are created.

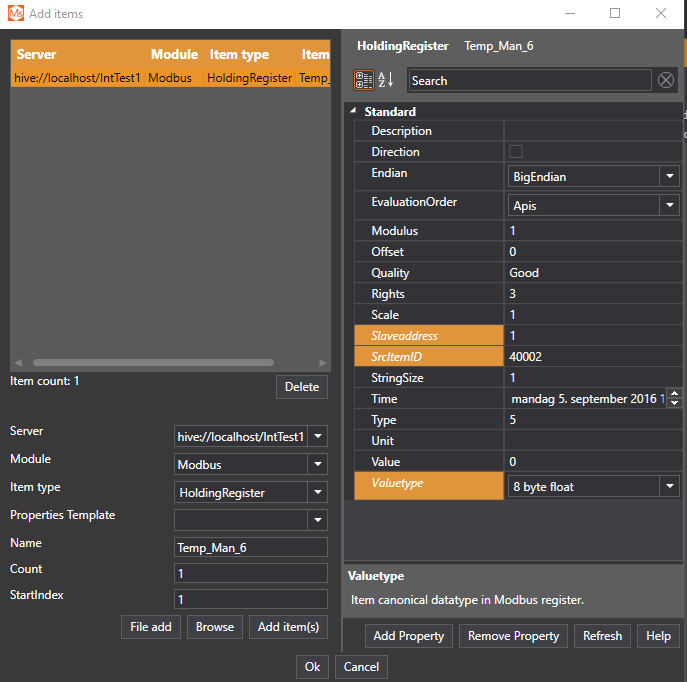

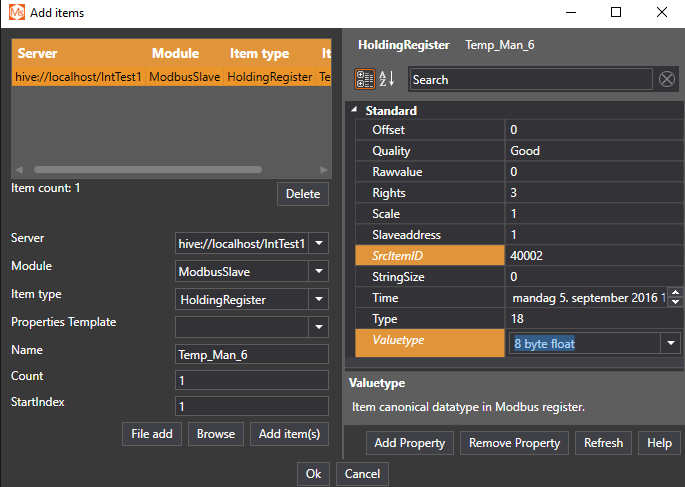

Add Items (registers)

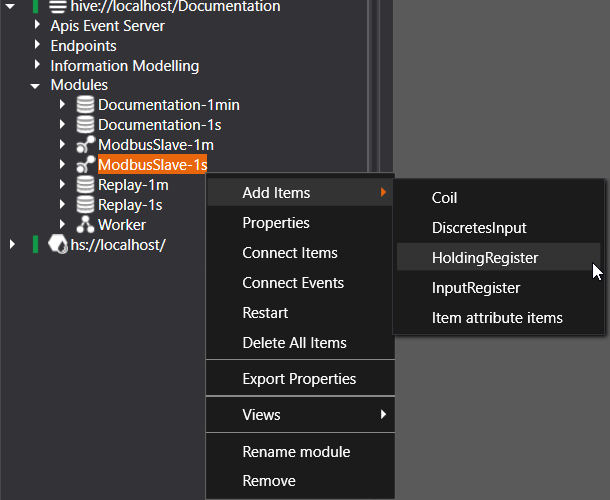

Now follow the guide Add Items to a Module, but this time select the Modbus module and add items of one of the register types:

- Coil

- DiscretesInput

- InputRegister

- HoldingRegister

Example Holding register:

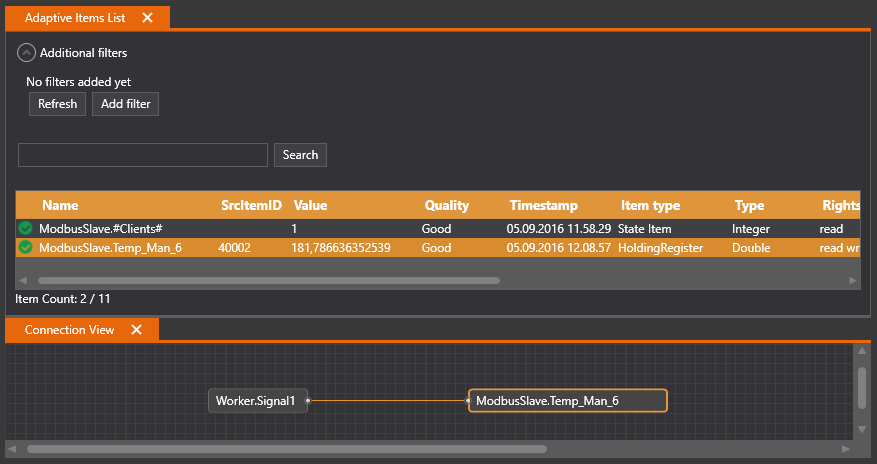

Give the Item a proper name like "Temp_Man_6". Assure SrcItemID is pointing to valid register address like "40002",check the Slaveadress and set correct Valetype of the value in the register of the slave. Ok

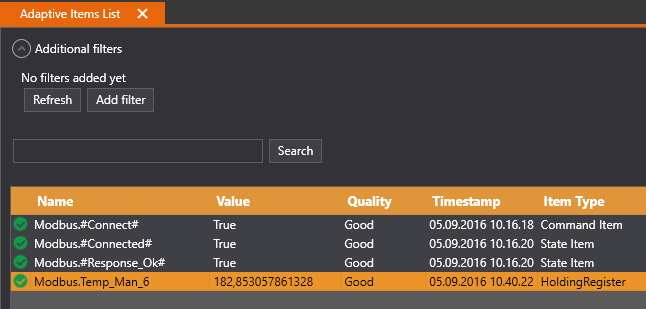

If all setting are correct the "Temp_Man_6" tag should be displaying the value of holding register 40002 of the slave.

Troubleshooting

If there's no connection or data received:

- Use a third-party terminal application like wireshark, to check if the server is sending telegrams.

- For a TCP/IP based server:

- Check the firewall settings for the receiving port.

- Check the network connection to the server, (ping)

Connect to a WITS-0 server

Follow the guide Add Module to Apis Hive, but this time select a module of type ApisWITS from the "Module type" dropdown list.

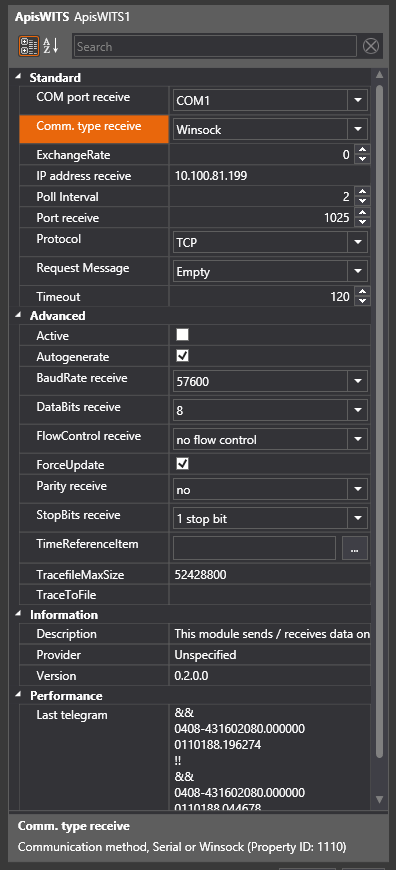

- After adding the module, select the new module named "ApisWITS1" from the Solution Explorer.

The module supports both serial and TCP/IP interfaces, depending on your server. In the Properties Editor, enter values for:

- TCP/IP based server:

- Comm. type receive : Winsock

- IP address receive: IP address of your WITS-0 server .

- Port receive: TCP port of your WITS-0 server .

- Protocol: The protocol of your WITS-0 server, TCP or UDP.

- Serial communication based server :

- Comm. type receive : Serial

- Com port receive: Com port connected to the server.

- Baud rate receive: Baud rate of your server serial setup.

- Data bits receive: Data bits of your server serial setup.

- Flow Control receive: Handshake of your server serial setup.

- Parity receive: Parity of your server serial setup.

- StopBit receive: Stop bits of your server serial setup.

- Further in the Properties Editor:

- Poll interval: enter the value for how often this client should poll for new values on the server (in seconds).

- Autogenerate :Decide whether the client should generate items automatically based on the telegram from the server. The items will be generated according to the specification in W.I.T.S. Wellsite Information Transfer Specification.

- Press "Apply" when done.

If you selected Autogenerate in the property setup there should be no need to add items manually.

Otherwise:

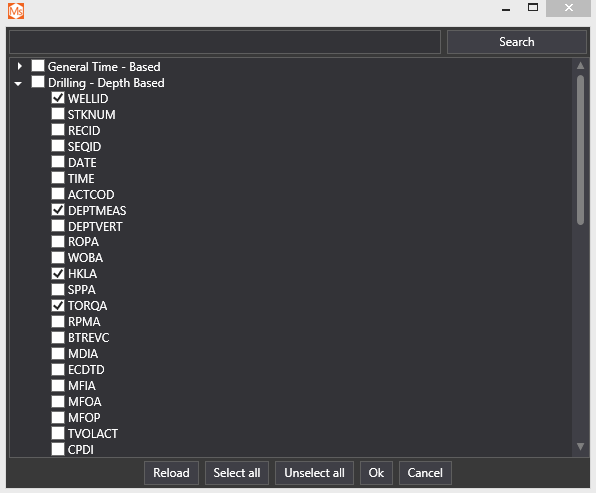

Follow the guide Add Items to a Module, but this time add items of type "WITSItem".

- Click the "Browse" button.

- A dialog opens that lets you select predefined items according to the specification in W.I.T.S. Wellsite Information Transfer Specification. Click "Ok" when done.

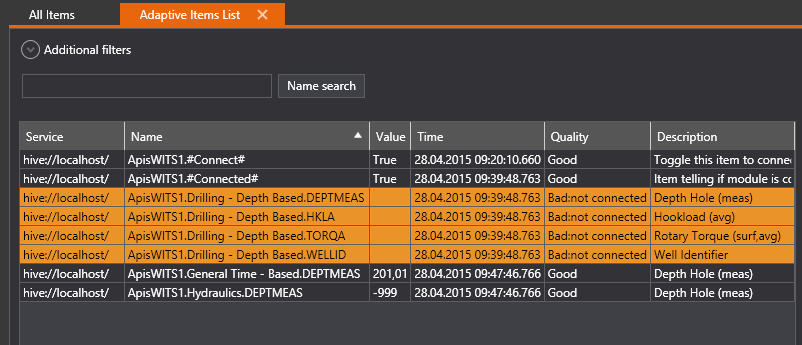

- The item list will get new entries showing the added items.

- Alternative:

- In the name field, write a custom name and click the "Add item(s) " button.

- Select the new item and fill in properties manually; Record, Field, Type, etc.

Troubleshooting

If there's no connection or data received:

- Use a third-party terminal application like putty, to check if the server is sending telegrams.

- For a TCP/IP based server:

- Check the firewall settings for the receiving port.

- Check the network connection to the server, (ping)

Connect to an SQL Server

Follow the guide Add Module to Apis Hive, but this time select a module of type ApisSQL from the "Module type" dropdown list.

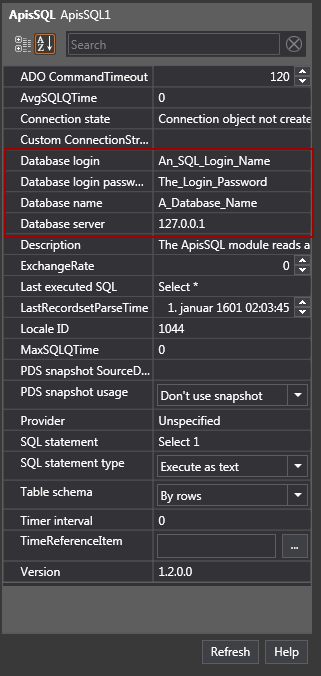

- After adding the module, select the new module named "ApisSQL1" from the Solution Explorer.

The module supports communication with SQL Server, either on a fixed interval (based on the Timer interval property) or triggered by using a trigger item.

This Quick Start Guide will show you how to connect an SQL Bee to your SQL Server instance, and send and receive values from SQL Server, using simple query and more advanced stored procedures.

To start with, you'll need to configure your new SQL Bee to connect to SQL Server. You'll need access to an SQL Server from the computer on which you're configuring the SQL Bee. This includes a database username and password, along with network access between the two.

In your SQL Module, you'll need to set the following properties:

The "Database login" property should contain the name of the user you want to connect as. The "Database login password" property should contain the password for that user. The "Database name" should contain the name of the SQL database you want to use. The "Database server" property should contain the name or IP address of the SQL server, along with the name of the instance, if the database isn't on the default instance.

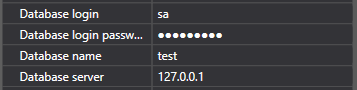

Assume database name is "test", we use "sa" login and database server is local.

It can also be useful to change the SQL statement property to "Select 1" and the Timer interval property to 1000. Press Apply when you're happy with the field values. This will allow you to see if the connection was successful by using the "Connection state" property. If the connection is successful, it should display "Connection state: open".

The next step is to change the "SQL Statement" property to reflect the SQL command you want run. Writing "Select 1" allowed us to check the connection worked, but we're going to want to do something more useful.

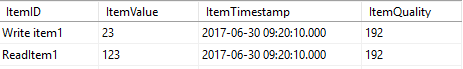

First of all you will need a table named "Items" you can use this query to create it.

USE [test]

GO

/****** Object: Table [dbo].[Items] Script Date: 30.06.2017 10.24.40 ******/

SET ANSI_NULLS ON

GO

SET QUOTED_IDENTIFIER ON

GO

CREATE TABLE [dbo].[Items](

[ItemID] [nvarchar](50) NOT NULL,

[ItemValue] [float] NULL,

[ItemTimestamp] [datetime] NULL,

[ItemQuality] [int] NULL,

CONSTRAINT [PK_Items] PRIMARY KEY CLUSTERED

(

[ItemID] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

) ON [PRIMARY]

GO

The table should at least have one row with ItemId and ItemValue set.

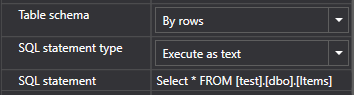

Execute as text

The simplest way to acquire data is by sending a simple SQL statement to the server.

Set the "SQL statement type" property to "Execute as text"

Set "SQL statement" to "Select * FROM [test].[dbo].[Items]"

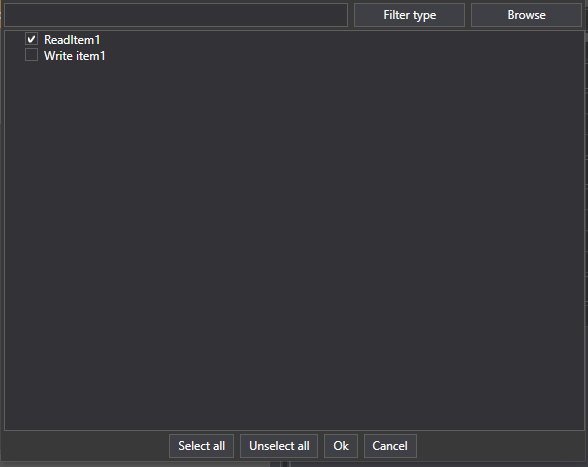

To add a Read Item, right-click on the SQL Bee module again and press Read Item. In the dialog box, instead of pressing Add Item, press Browse.

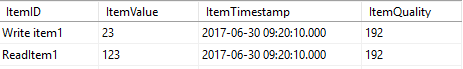

This will give you a list of available items, one of which should be ReadItem1 :

Press the checkbox beside ReadItem1 and then OK. Press OK again to add the item.

The Read Item will now reflect the values of "ItemValue", "ItemTimestamp" and "ItemQuality" of row "ReadItem" in table "Items"

When the foreign system updates these field in the table the values will be reflected in the namespace of ApisHive.

Execute as stored procedure

For more advanced queries execution of stored procedures in the SQL server might me required.

In this example, we'll be using a stored procedure called TestBeeParams:

USE [test]

GO

/****** Object: StoredProcedure [dbo].[TestBeeParams] Script Date: 28.06.2017 12.50.45 ******/

SET ANSI_NULLS ON

GO

SET QUOTED_IDENTIFIER ON

GO

ALTER PROCEDURE [dbo].[TestBeeParams] @Number int, @SPName nvarchar(max), @Source nvarchar(max), @XML nvarchar(max)

as

begin

set nocount on

DECLARE @idoc int;

EXEC sp_xml_preparedocument @idoc OUTPUT, @XML;

DECLARE @ItemID nvarchar(max);

DECLARE @ItemValue float;

DECLARE @ItemTimestamp DateTime;

DECLARE @ItemQuality int;

SELECT @ItemID = ItemID, @ItemValue = ItemValue, @ItemTimeStamp = ItemTimestamp, @ItemQuality = ItemQuality

FROM OPENXML(@idoc, 'ROOT/ItemSample')

WITH

(

ItemID nvarchar(50) '@ItemID',

ItemValue float '@ItemValue',

ItemTimestamp DateTime '@ItemTimestamp',

ItemQuality int '@ItemQuality'

)

if @ItemID is not null

begin

-- Update write items

if exists(select itemid from Items where ItemID = @ItemID)

begin

-- Item exsists update data

update Items set ItemValue = @ItemValue, ItemTimestamp= @ItemTimestamp, ItemQuality= @ItemQuality where ItemID =@ItemID

end

else

begin

-- Item does not exsist, insert it into table

insert into dbo.Items(ItemID,ItemValue,ItemTimestamp,ItemQuality)

SELECT @ItemID, @ItemValue , @ItemTimeStamp , @ItemQuality

end

end

-- Return all items regardless they have changed or not

select * from dbo.Items

end

This stored procedure takes in write item(s) and returns all items as read item(s). Add it to your SQL database.

You will need a table named "Items" you can use this query to create it.

USE [test]

GO

/****** Object: Table [dbo].[Items] Script Date: 30.06.2017 10.24.40 ******/

SET ANSI_NULLS ON

GO

SET QUOTED_IDENTIFIER ON

GO

CREATE TABLE [dbo].[Items](

[ItemID] [nvarchar](50) NOT NULL,

[ItemValue] [float] NULL,

[ItemTimestamp] [datetime] NULL,

[ItemQuality] [int] NULL,

CONSTRAINT [PK_Items] PRIMARY KEY CLUSTERED

(

[ItemID] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

) ON [PRIMARY]

GO

As you can see from the stored procedure, the write items are passed in using XML. For multiple items, you can add a WHERE clause to the initial select, allowing you to distinguish between different items. However, for this example, we'll just be sending in a single value.

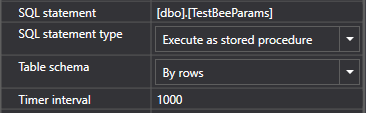

Once the stored procedure is on your database, change the following SQL Bee parameters:

The "SQL statement" should contain the name of the statement without any parameters or the EXEC keyword. This is because the "SQL statement type" is set to "Execute as stored procedure" so the parameters and EXEC will be added automatically. The "Table schema" property is set to "By rows", since we're sending back a row per value. The Timer interval is set to 1000 milliseconds, so we can see a response fairly rapidly.

After we apply the values, we need to add a single write item to the module. Do this by right-clicking on the SQL Bee module, going to "Add Item" and pressing on Write Item:

The name of the item doesn't matter in this case, the item name will be inserted into the table, so you can just press the "Add Item" button at the bottom of the dialog box:

Then press "Ok" to finish adding the Write Item. You can then alter the item to set it to any type you like.

Once the write item is setup, you can add a Read Item. Assume the Item table contains Item named ReadItem1 or you can add it with following query :

USE [test]

GO

INSERT INTO [dbo].[Items]

([ItemID]

,[ItemValue]

,[ItemTimestamp]

,[ItemQuality])

SELECT 'ReadItem1', 123.5 , '2017-06-30 10:06:08.000' , 192

GO

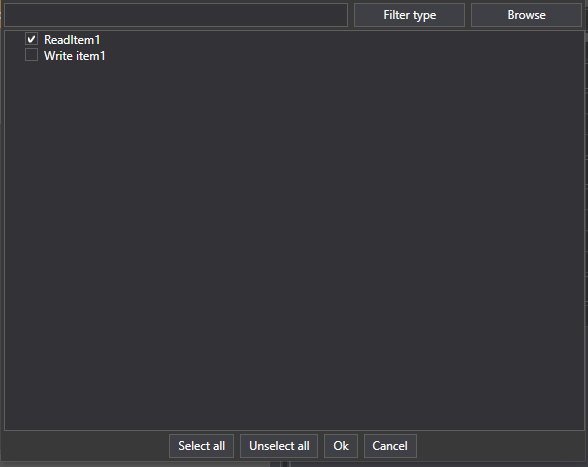

To add a Read Item, right-click on the SQL Bee module again and press Read Item. In the dialog box, instead of pressing Add Item, press Browse.

This will give you a list of available items, one of which should be ReadItem1 (and Write Item1 added previously):

Press the checkbox beside ReadItem1 and then OK. Press OK again to add the item.

The Read Item will now reflect any value you write in the Write Item. The Write Item value is sent into the stored procedure, read from the XML, and sent back through the SELECT statement at the bottom of the stored procedure.

Troubleshooting

An important point to note is that stored procedures called from the SQL Bee module may not contain temporary tables. Any stored procedure containing a temporary table will fail to run.

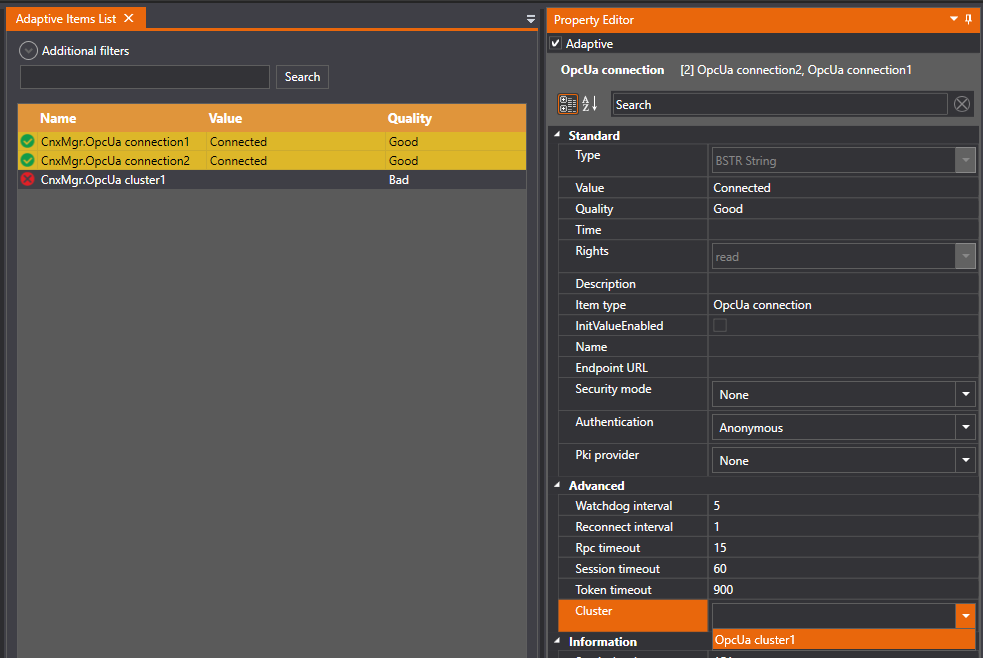

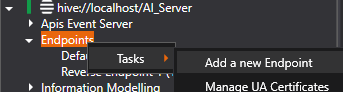

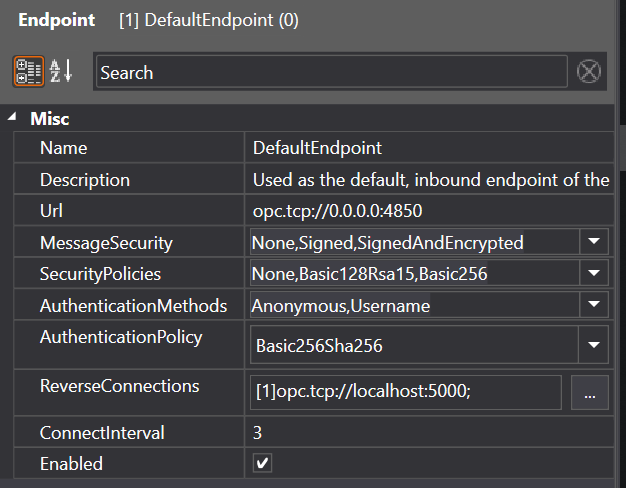

Configure Connection Manager

The connection manager module (ApisCnxMgr) is used to configure connections to remote OpcUa servers.

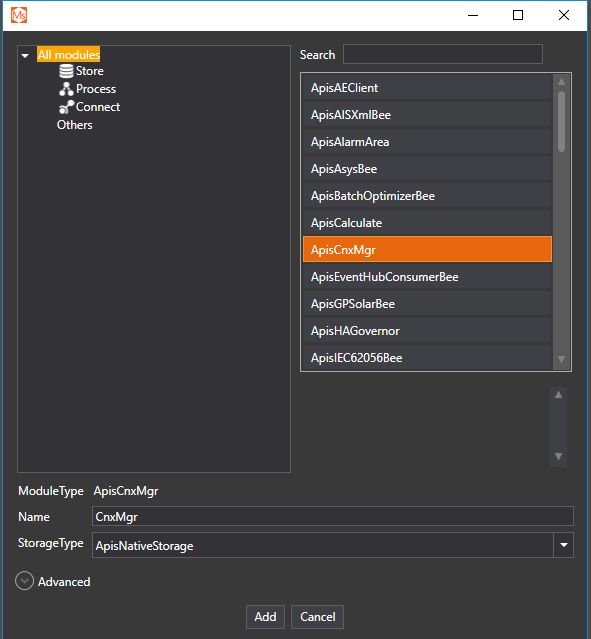

To create a module of this type, follow the guide Add Module to Apis Hive and select the module type "ApisCnxMgr":

Click 'Add' followed by 'Ok' in the module properties dialog to create the module.

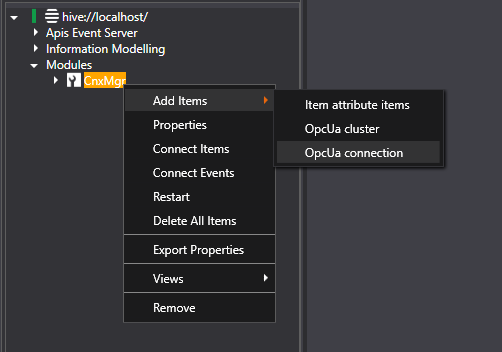

Next, items of type "OpcUa connection" must be added to the module. Right-click the new module in Solution explorer, select "Add items" and then "OpcUa connection":

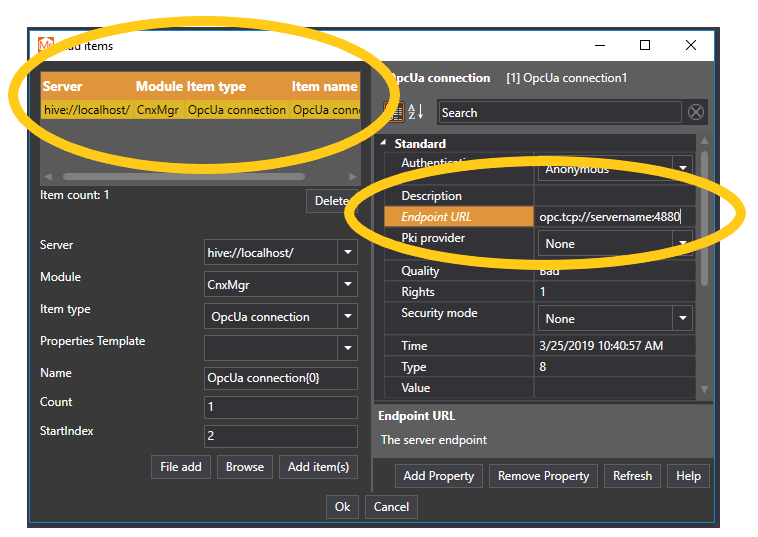

In the "Add items" dialog, click 'Add item(s)' to create an OpcUa connection item with the name "OpcUa connection1". Select this item in the item-list, and specify the "Endpoint URL" property for the OpcUa server:

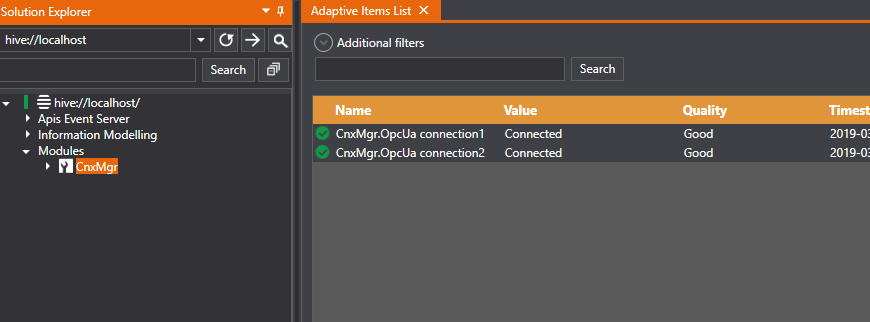

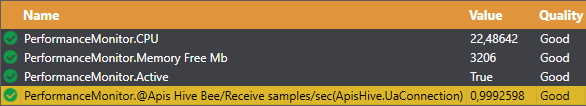

Repeat these steps for each OpcUa server that ApisHive should connect to. The item list in Apis Management Studio will show the status of each connection:

The connection manager module also supports the item type "OpcUa cluster", which is used when redundant OpcUa servers are available and you want ApisHive to automatically fail-over between these servers. After creating an OpcUa cluster item, select the connection items that are part of this cluster and assign their "Cluster" property:

The OpcUa and OpcUaProxy module can now connect to the cluster, and will automatically choose the best server in the cluster by observing the connection status and servicelevel of each server.

Stream Data to Broker

Follow the guide Add Module to Apis Hive, but this time select a module of type ApisUaPublisherBee from the Module type dropdown list.

- After adding the module, select the new module named "UaPublisherBee" from the Solution Explorer.

- First select type of communication:

- File (is used for debugging purposer, information is written to a file for inspection of mesages)

- MQTT (when using MQTT protocole to the broker)

- AMQP (when using AMQP protocole to the broker)

- Press Apply

When the protocole is selected several parameters need to be set, and these wil wary depending on the selectet brokertype.

Brokertype: File

- Properties

- Filename: This propery shall be the filename to use.

This is mainly used for debugguing purpose, to see the content of message itself. the only property here is to set the filename where the messages will be stored. There will be one line for each messages. The plugin will use 10 files to avoid ublimited filesize and use of diskspace. When a file get a serten limit it will create a new file with name XX_o up til XX_9

Brokertype: AMQP

- Properties

- AMQP Type: select the communication to use eiter Websocket or HTTPS syncronius or not.

- AMQPMain Address: The microsoft Endpoint to Eventhub where all realtime data are sent.

- AMQPBackFill Address: The endpoint to the backfil channel.

- AMQP Connectiontype. Multiple,single, or transient. Defailt is multiple.

In addition to thes settings the property the Main EntityPath/Topic and BackFill EntityPath/Topic have to be filled out. It is possible to use the same parameters for both Main and Backfill properties, but then all data will be sent to same Eventhub.

Brokertype: MQTT

- Properties

- MQTTMain Address: addres to broker eg test.mosquitto.org

- MQTTMain Port: port to use e.g. 1883

- MQTTMain ClientId: A unique string (e.g. GUID)

- MQTTMain User: A user defined by the broker.

- MQTTMain Password: password to the broker.

- MQTTMain CleanSession: (enabled or not)

- MQTTMain Version (V3.1.1 or V5.0)

- MQTTMain Transport (Tspserver without security, TcpSerberTLS with security/encryption)

- MQTTMain Client certificate: full name of a certificat, if then broker needs this to verify the clent.

- MQTTBacFill Address: addres to broker eg test.mosquitto.org

- MQTTBacFill Port: port to use e.g. 1883

- MQTTBacFill ClientId: A unique string (e.g. GUID)

- MQTTBacFill User: A user defined by the broker.

- MQTTBacFill Password: password to the broker.

- MQTTBacFill CleanSession: (enabled or not)

- MQTTBacFill Version (V3.1.1 or V5.0)

- MQTTBacFill Transport (Tspserver without security, TcpSerberTLS with security/encryption)

- MQTTBacFill Client certificate: full name of a certificat, if then broker needs this to verify the clent.

These properties define communication to both the primary and secondary broker. The primary broker always get the realtime messages while the secondary gets messages that are old when doing catchup or resending old messages the primary broker did not accept. In adition to these properties the properies Main EntityPath/Topic and BackFill EntityPath/Topic has to be set. Also check documentation for common properties that has to be defined.

When using MQTT to Microsoft IOT Hub you get a connectionstring from Microsoft. This has to be decodes to different parameters. See https://docs.microsoft.com/en-us/azure/iot-hub/iot-hub-mqtt-support#using-the-mqtt-protocol-directly-as-a-device to get details.

The other parameters van be changed later.

To start publishing data we need to:

-

Add a Apis OpcUa module. This represent the data to be published.

-

Follow the guide Add Items to a Module (UaPublisher) and add item of type "Writer Group".

- The property PublishInterval define how often a message is published.

- MaxNetworkMessageSize define the maximum size a message can bee.

-

Follow the guide Add Items to a Module (UaPublisher) and add item of type "Variable Dataset Writer". This is used to connect the dataset to a "Writer Group".

- Select the "Writer Group" in the WriterGroupItem property.

- Select the ApisOpcUa module in property "DataSetName"

Now there should be a system that every PublisherInterval create a message including all data arrived in current interval, create a message according to the configuration, and send this to the Eventhub. To see how the transmitted messages look like select BrokerType equal to File and press Apply. Then enter a filname for property "FileName" (id 2300). Then published messages are written to that file. This is a nice way to verify that the messages look as expected. When satisfied then go back and send the messages to the eventhub.

One Publisher can have many "Writer Group" Items and "Variable Dataset Writer". A "Variable Dataset Writer" can only be connected to one Datset (Apis OpcUa module) and one "Writer Group". A Dataset can only be connected to one "Variable Dataset Writer".

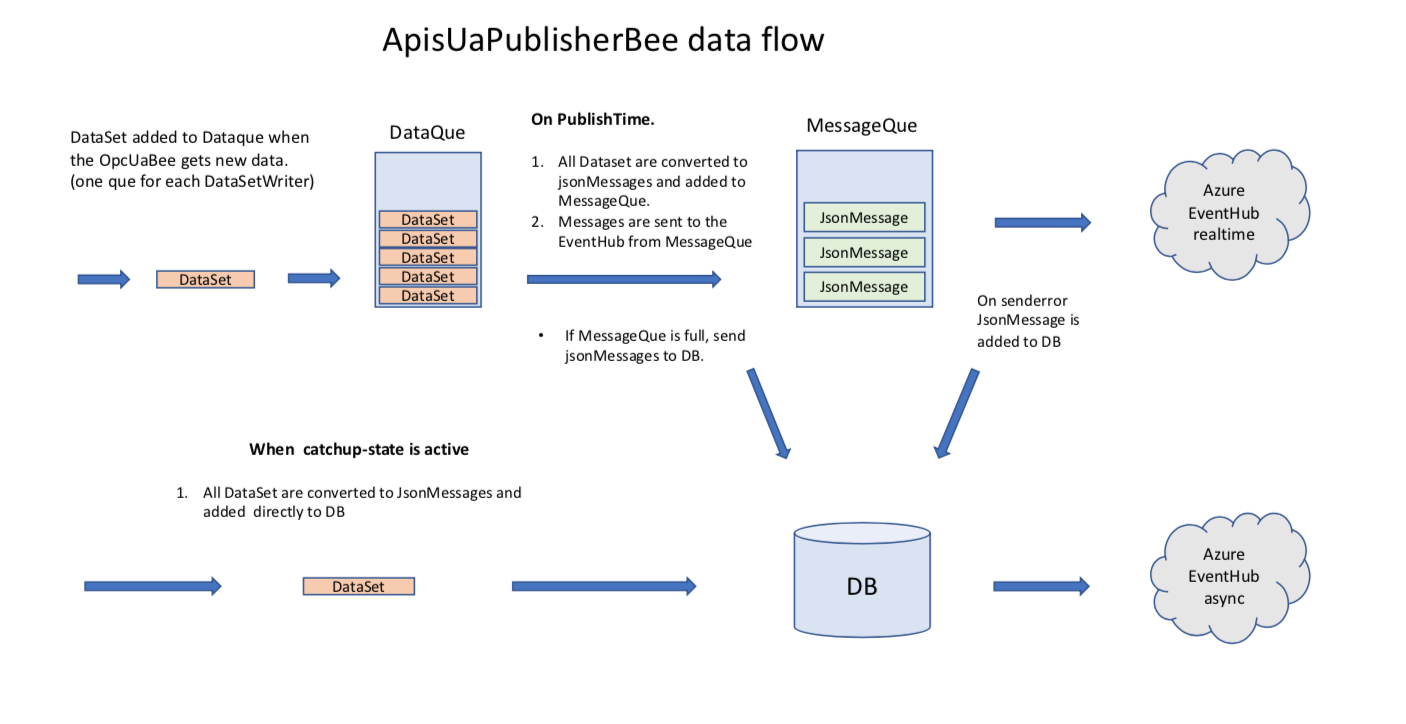

To get an overview of the dataflow in a PublishertBee se figure in ApisUaPublisherBee.

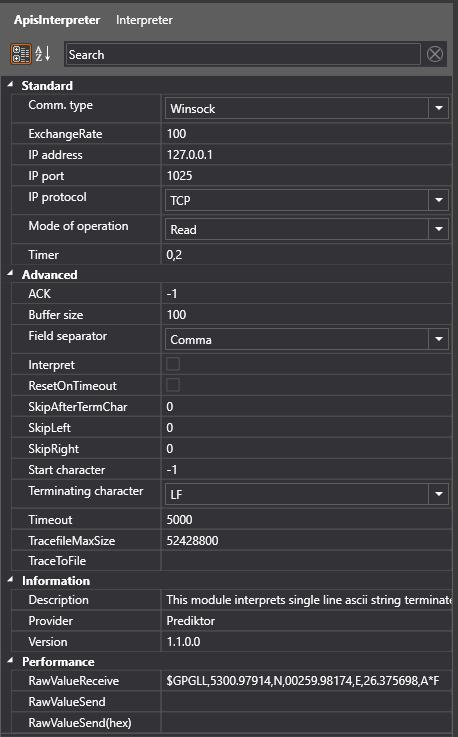

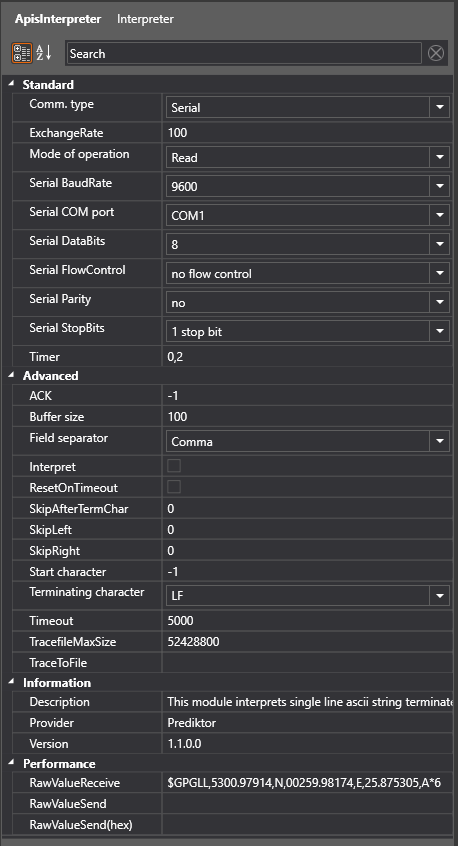

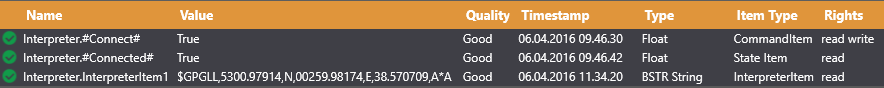

Connect Interpreter module to source

Follow the guide Add Module to Apis Hive, but this time select a module of type ApisInterpreter rom the "Module type" dropdown list.

- After adding the module, select the new module named "Interpreter" or what name you chose from the Solution Explorer.

Basic setup, communication interface

The module supports both serial and TCP/IP interfaces, depending on your server. In the Properties Editor, enter values for:

- TCP/IP based server/source:

- Comm. type receive : Winsock

- IP address : IP address of your server .

- IP Port receive: TCP port of your server .

- IP Protocol: The protocol of your server, TCP or UDP

.

.

- Serial communication based server :

- Comm. type receive : Serial

- Serial Com port: Com port connected to the server.

- Serial Baud rate: Baud rate of your server serial setup.

- Serial Data bits: Data bits of your server serial setup.

- Serial Flow Control: Handshake of your server serial setup.

- Serial Parity: Parity of your server serial setup.

- Serial StopBit: Stop bits of your server serial setup.

-

Further in the Properties Editor:

- Timer: enter the value for how often this client should poll (send) for new values on the server (in seconds).

-

Press "Apply" when done.

Mode of operation

Read

When the "Mode of operation" property is set to Read the module will attempt to Read a string from the selected communication port.

Depending of the format of the telegram various properties must be set in addition to the communication properties.

Assume a device sends a telegram with following format:

$GPGLL,5300.97914,N,00259.98174,E,26.375698,A*F<CR<LF>

Now follow the guide Add Items to a Module, but this time add items of type "InterpreterItem".

Buffer size:

First of all we need to allocate enough buffer space to the telegram, this telegram seems to contain nearly 50 characters including <CR>LF>

Setting the property Buffer size to 100 should be adequate.

Terminating character:

The telegram seems to be terminated with <LF> 0xA LineFeed , this means that the Terminating character property should be set to LF. If you dont find your terminating character, just type in the ASCII value of the character (decimal)

This property settings should be enough to receive "raw" telegram. The module reads the interface until terminating character is hit or buffer is full then InterpreterItem is updated.

If communication is OK and the server / device is sending the InterpreterItem tag should be updated.

If we want the module to extract / interpret part(s) of the telegram to different item(s):

Interpret:

Enable the Interpret property.

Field separator:

In this case different values seems to be separated by comma ',' , set Field separator property to Comma.

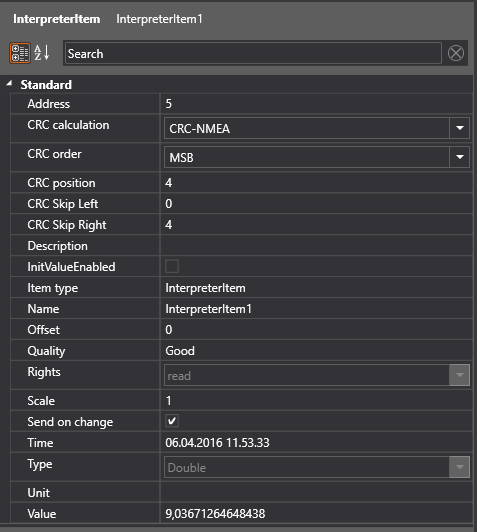

InterpreterItem property

In property window for the "InterpreterItem" set Adress to the field you want to interpret

$GPGLL,5300.97914,N,00259.98174,E,26.375698,A*F<CR<LF>

| 0 | 1 | 2 | 3 | 4 | 5 | 6 |

| $GPGLL | 5300.97914 | N | 00259.98174 | E | 26.375698 | A |

In this case 5

The InterpreterItem tag should be updated. with the value from field 5 in the telegram

If other fields need to be extracted just add InterpreterItems an set appropriate Address (fIeld)

Write

Follow the guide Add Items to a Module, but this time add items of type "InterpreterSendItem".

In Write mode the value of the "InterpreterSendItem" is sent to a listening server ,when:

- The value changes, depends on "Update only on change" attribute.

- Command Item using this "InterpreterSendItem" as a Parent Item is trigged.

Write->Read

Follow the guide Add Items to a Module, but this time add one item of type "InterpreterSendItem" and one item of type "InterpreterItem"

The value of the "InterpreterSendItem" is sent to a listening server, in same way as in Write mode, it is assumed that the server will send a response, the response will end up in the "InterpreterItem" in same way as in Read mode.

This mode is applicable to trig some kind of synchronous response (reading) from a casual server.

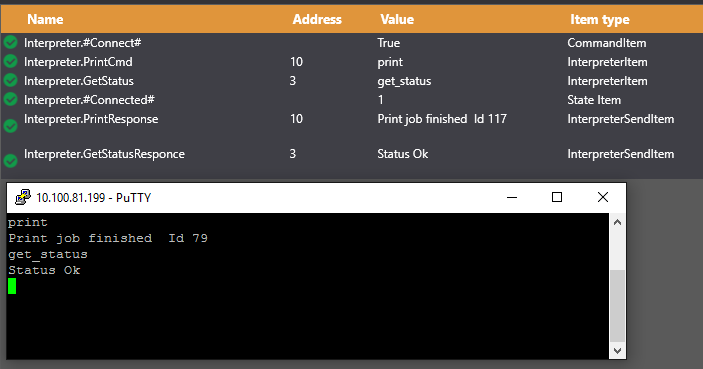

TCP-Server

Follow the guide Add Items to a Module, but this time add items of type "InterpreterSendItem".

This mode

If no "InterpreterItem" are defined the "InterpreterSendItem" is sent to a connected client as a stream, no handshake.

If a "InterpreterItem" is defined the "InterpreterItem" acts as a command identifier, when the server receives data matching the value of the "InterpreterItem" it will respond with the value of "InterpreterSendItem". The Address is used as identificatior.

For instance Worker StringFormatter can be used to format the command and response for your needs.

The example below shows server where two commands are defined "print" and "get_status". The Address property decides what response belongs to what command. When the TCP-Server receives "print" from external client it will respond with "Print job finished Id 177". "print" matches the value of "PrintCmd" item which has Address 10 the server responds with the value of SendItem having Address 10.

In this case PuTTy terminal is connected to the server to demonstrate this feature

If there's no connection or data received:

- Use a third-party terminal application like putty, to check if the server is sending telegrams.

- For a TCP/IP based server:

- Check the firewall settings for the receiving port.

- Check the network connection to the server, (ping)

- In many cases the device like hand held scanners send data occasionally , then it might be difficult to figure out whether the device is passive or there is configuration / communication problem.

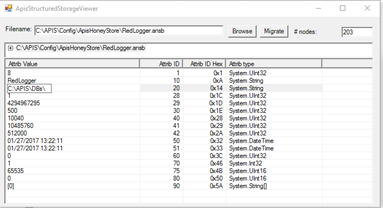

Store

This section gives an introduction to storing time series data with Apis Honeystore. Please pick a topic from the menu.

Store Time Series Data

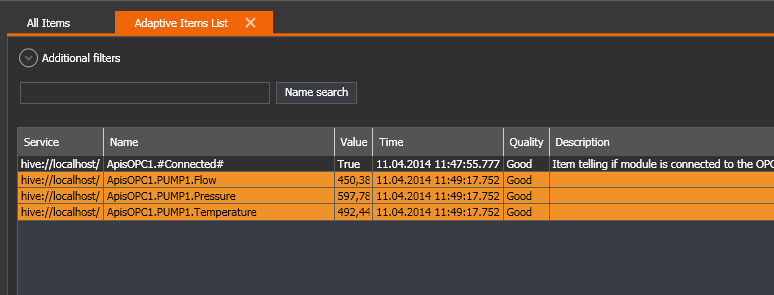

Follow the guide Add Module to Apis Hive, but this time select a module of type ApisLogger from the "Module type" dropdown list.

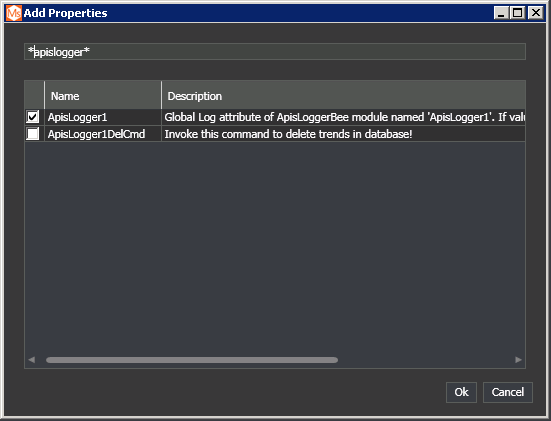

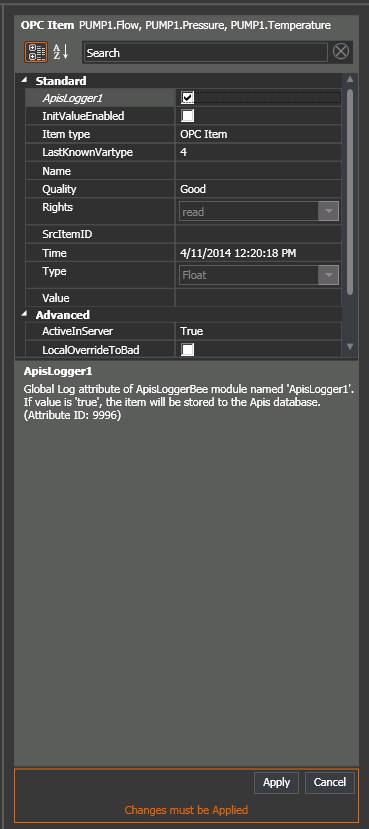

- In the Properties Editor, click on the button "Add Property"

- In the "Add property" dialog, type "*apislogger*" in the filter field.

- Click "Ok"

- In the Properties Editor, check off the new property "ApisLogger1"

- Click "Apply".

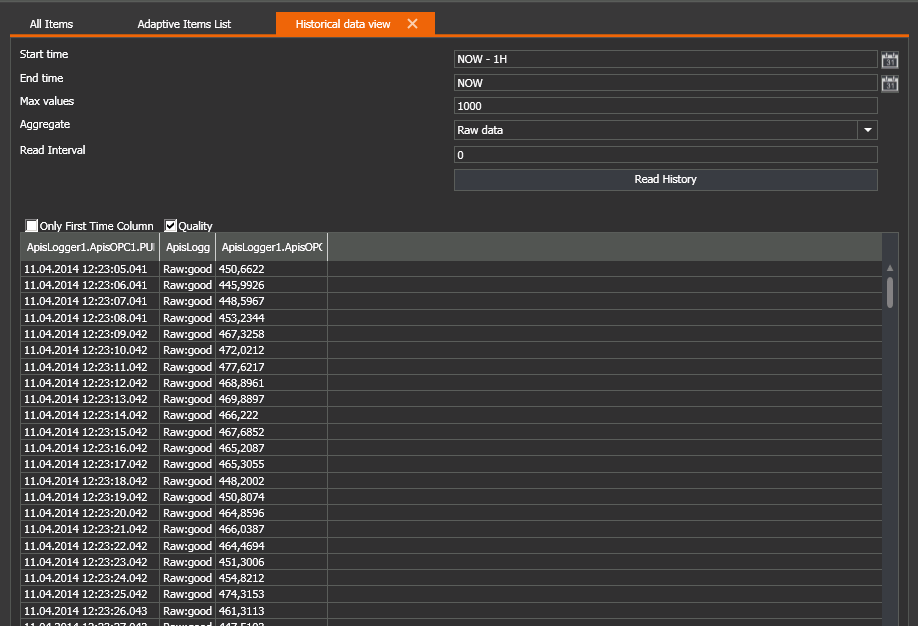

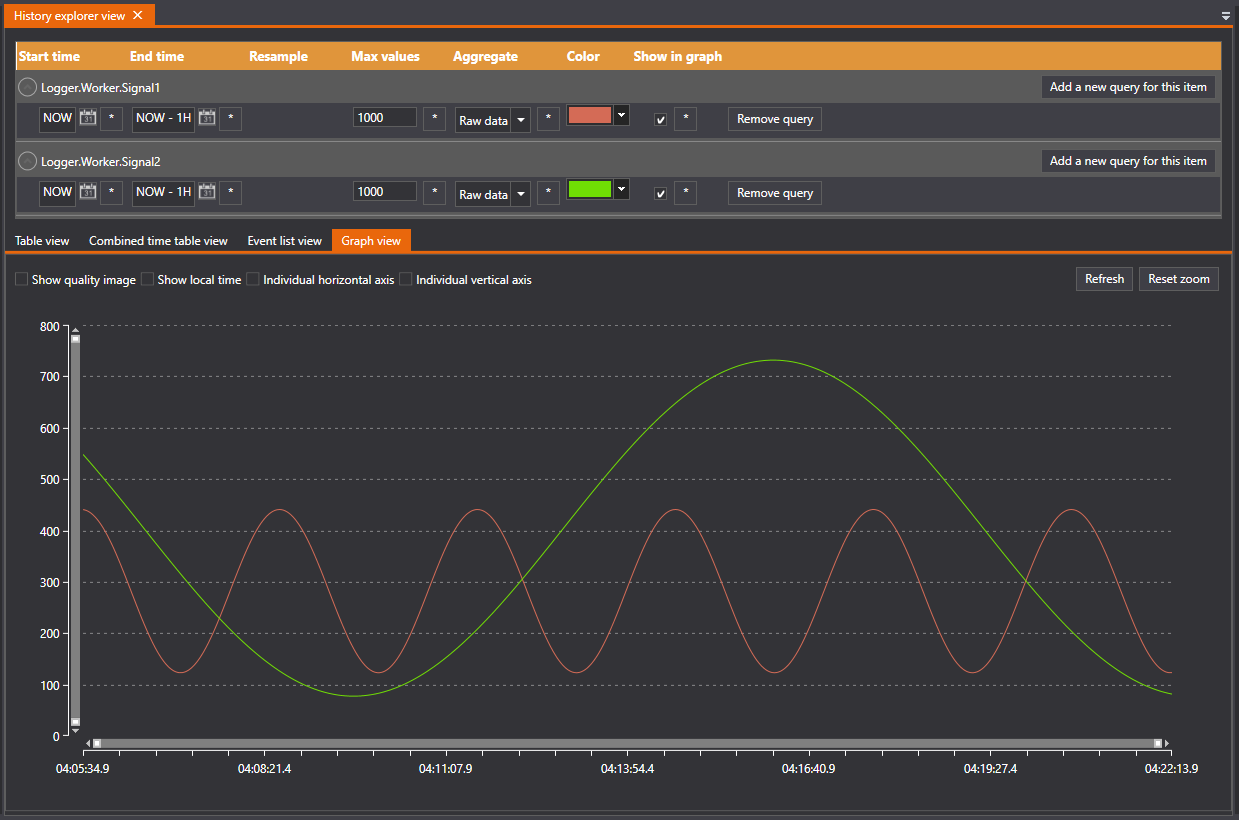

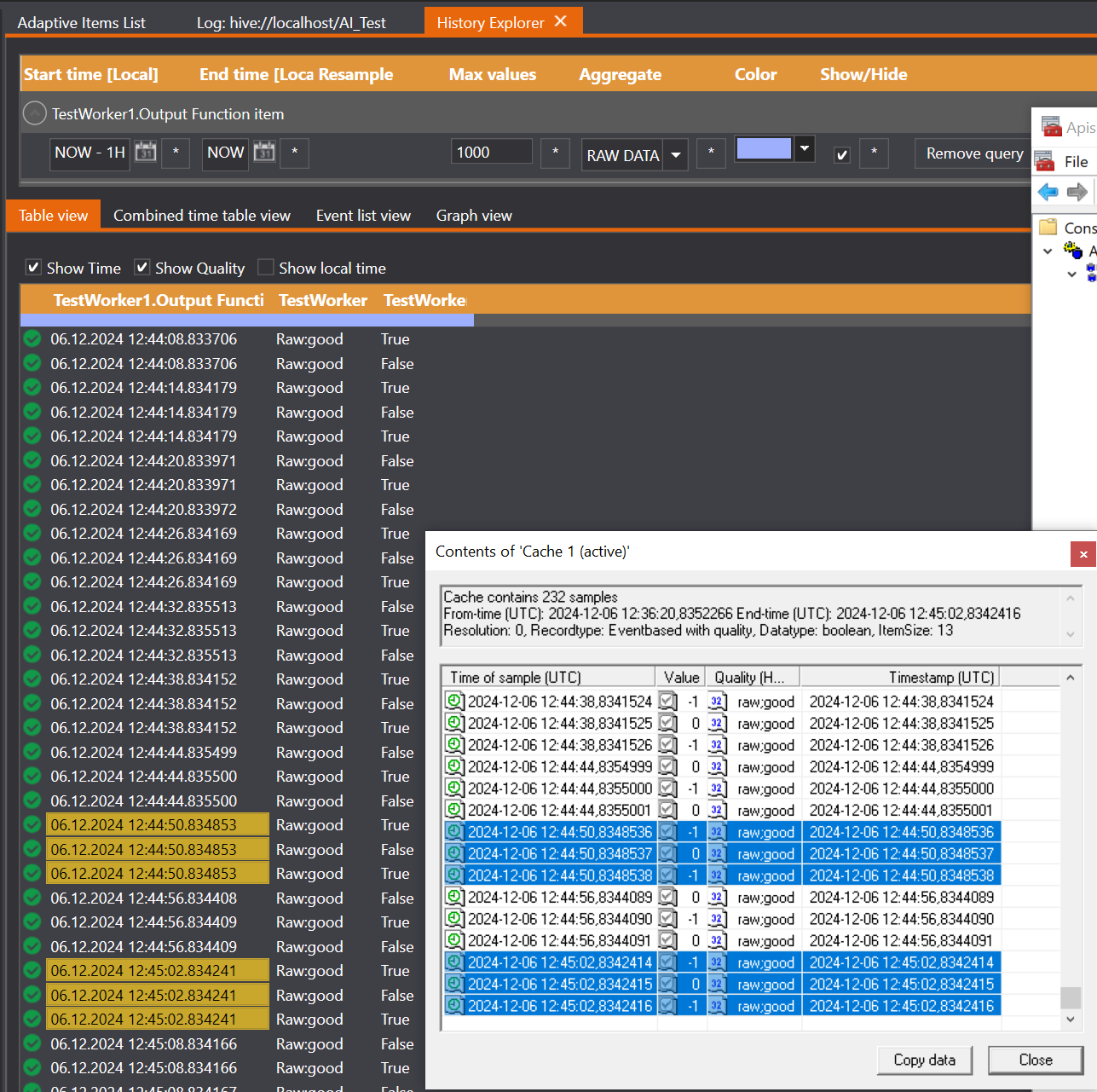

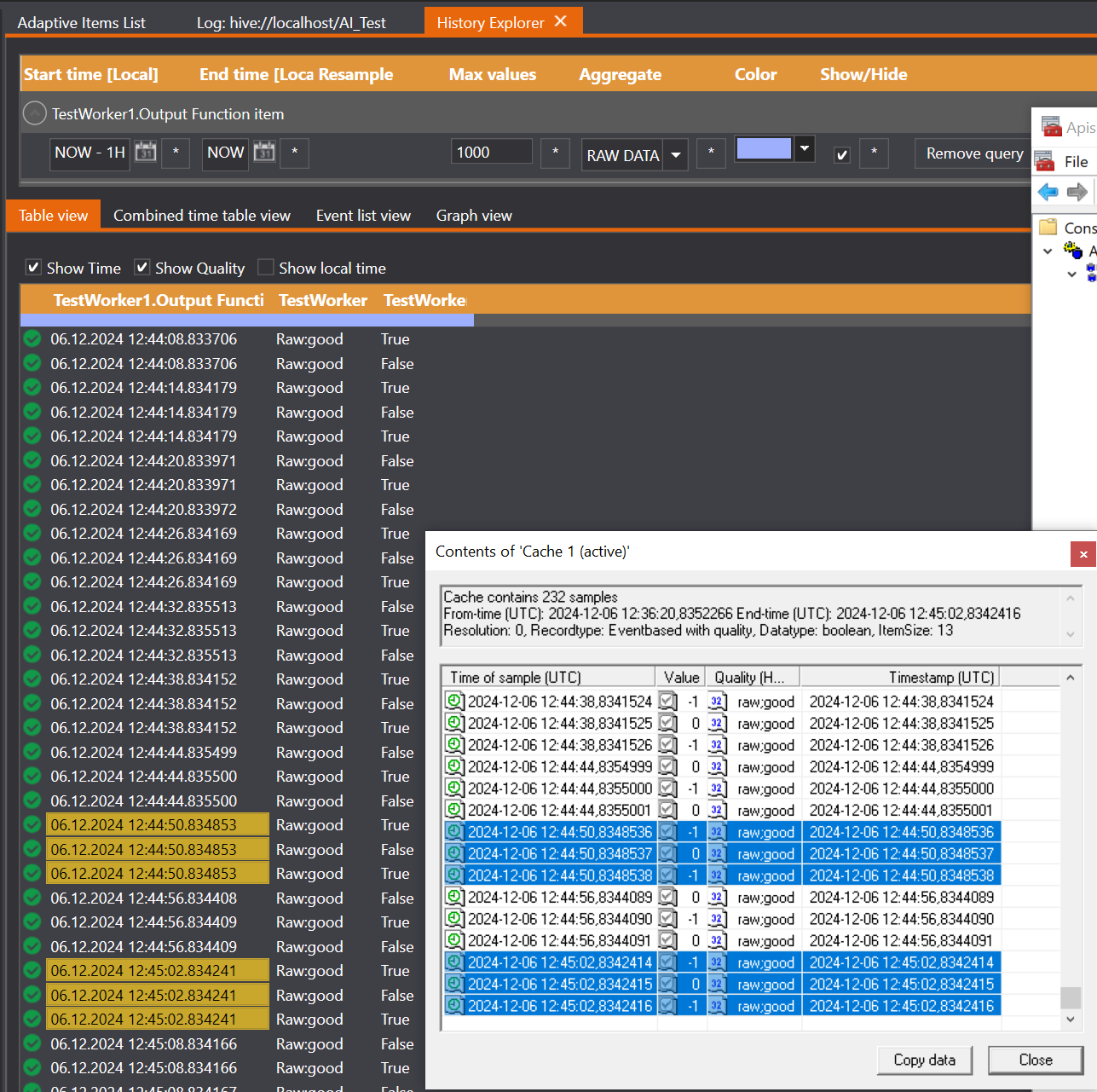

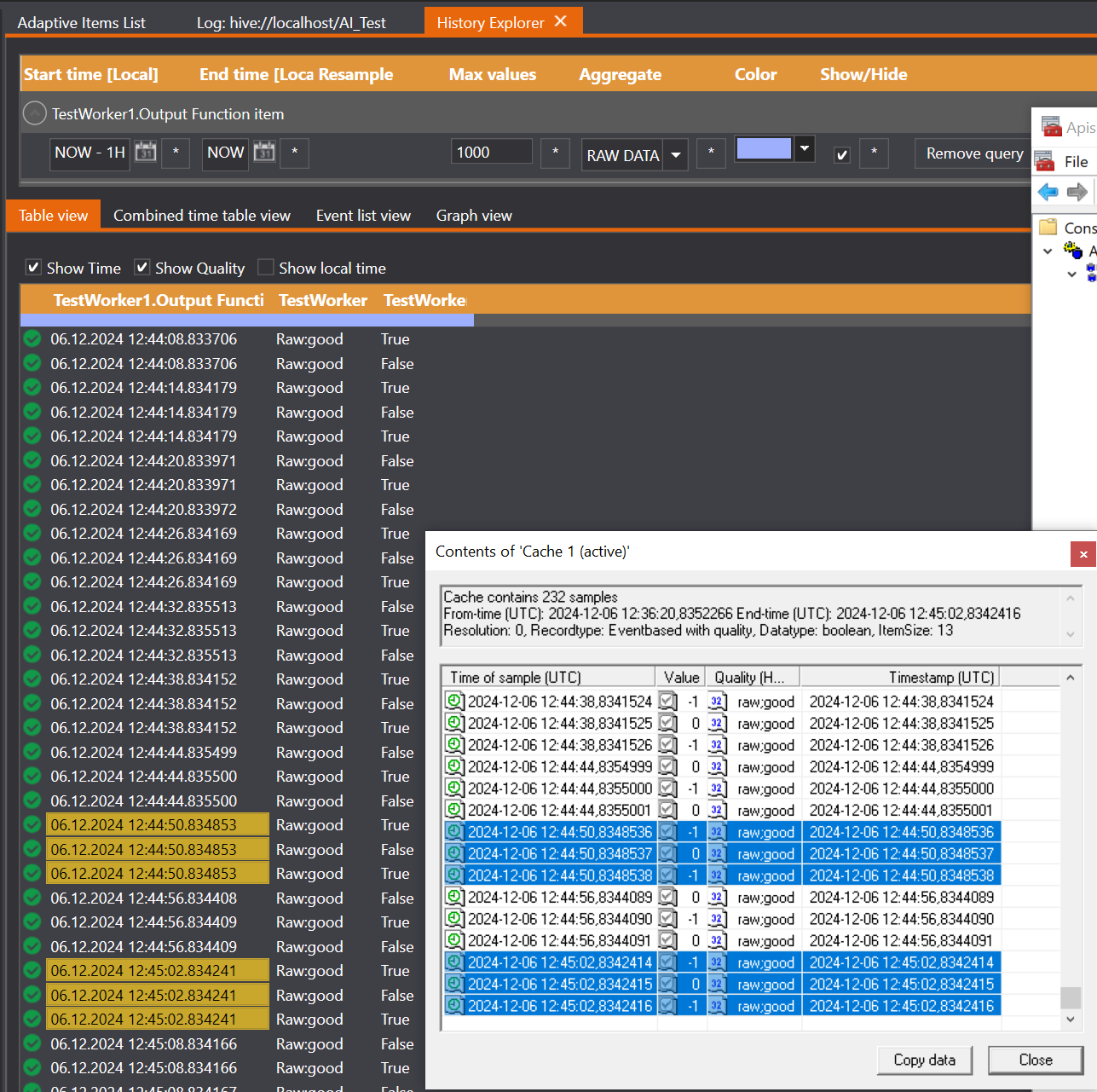

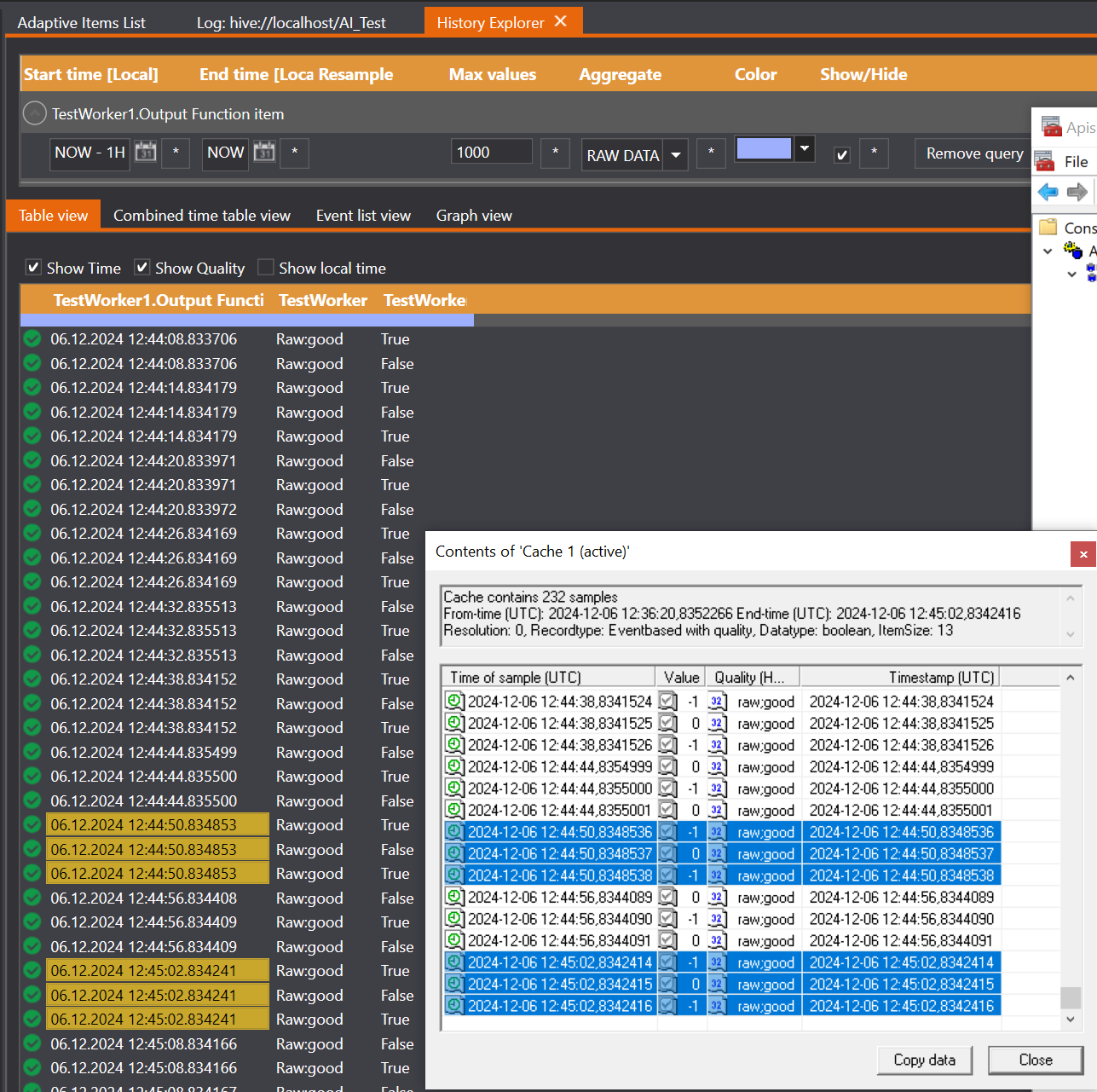

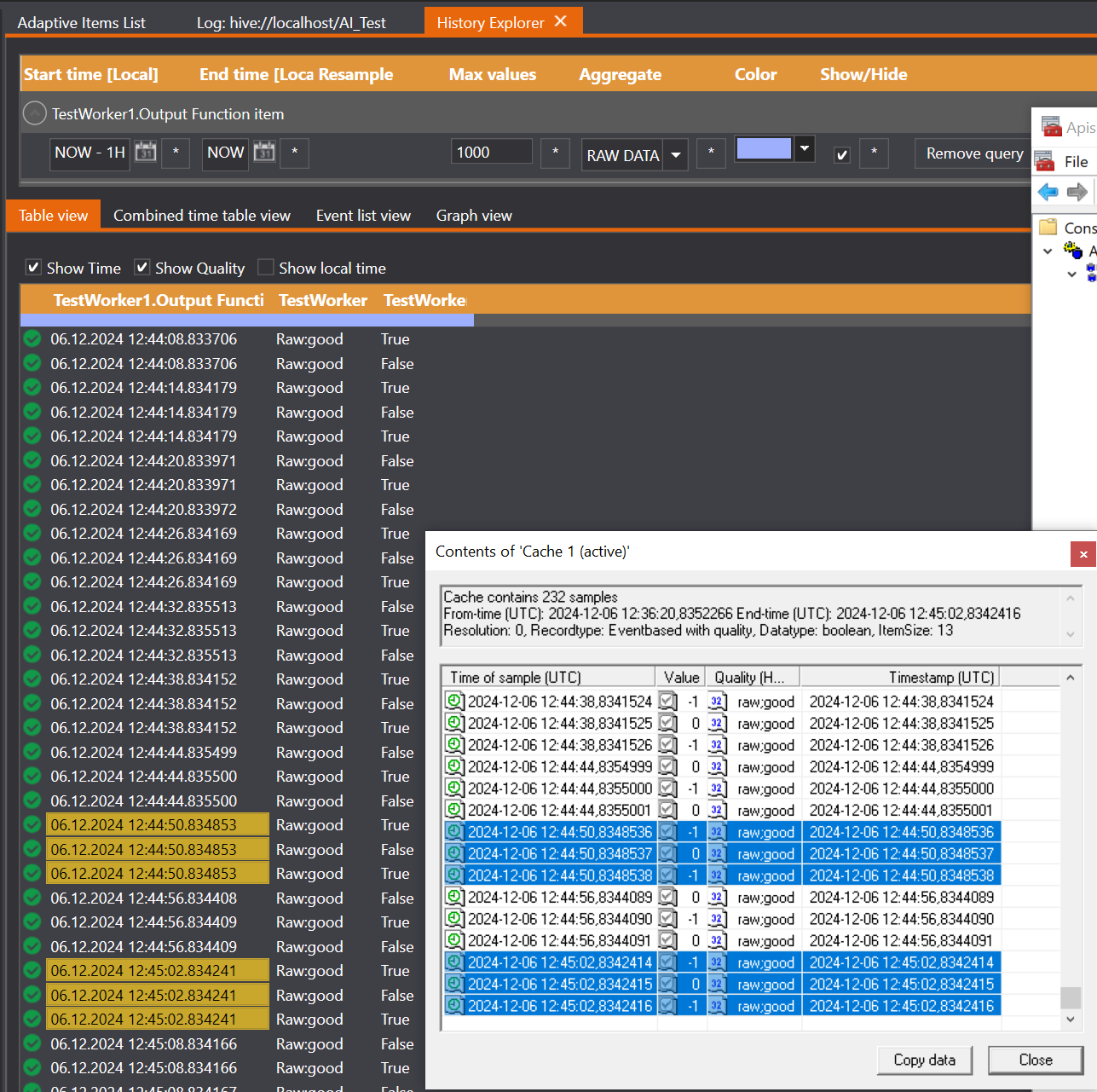

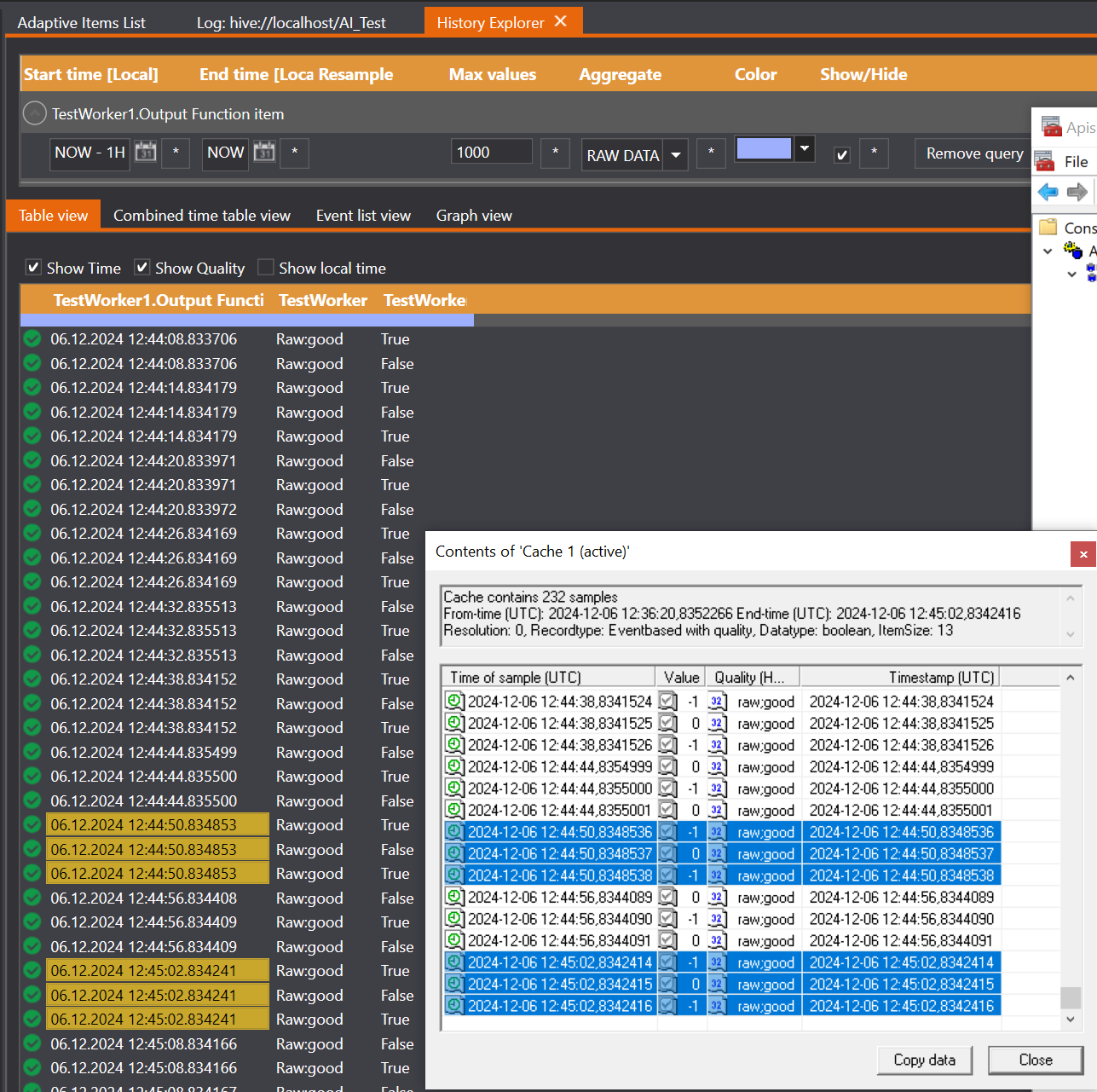

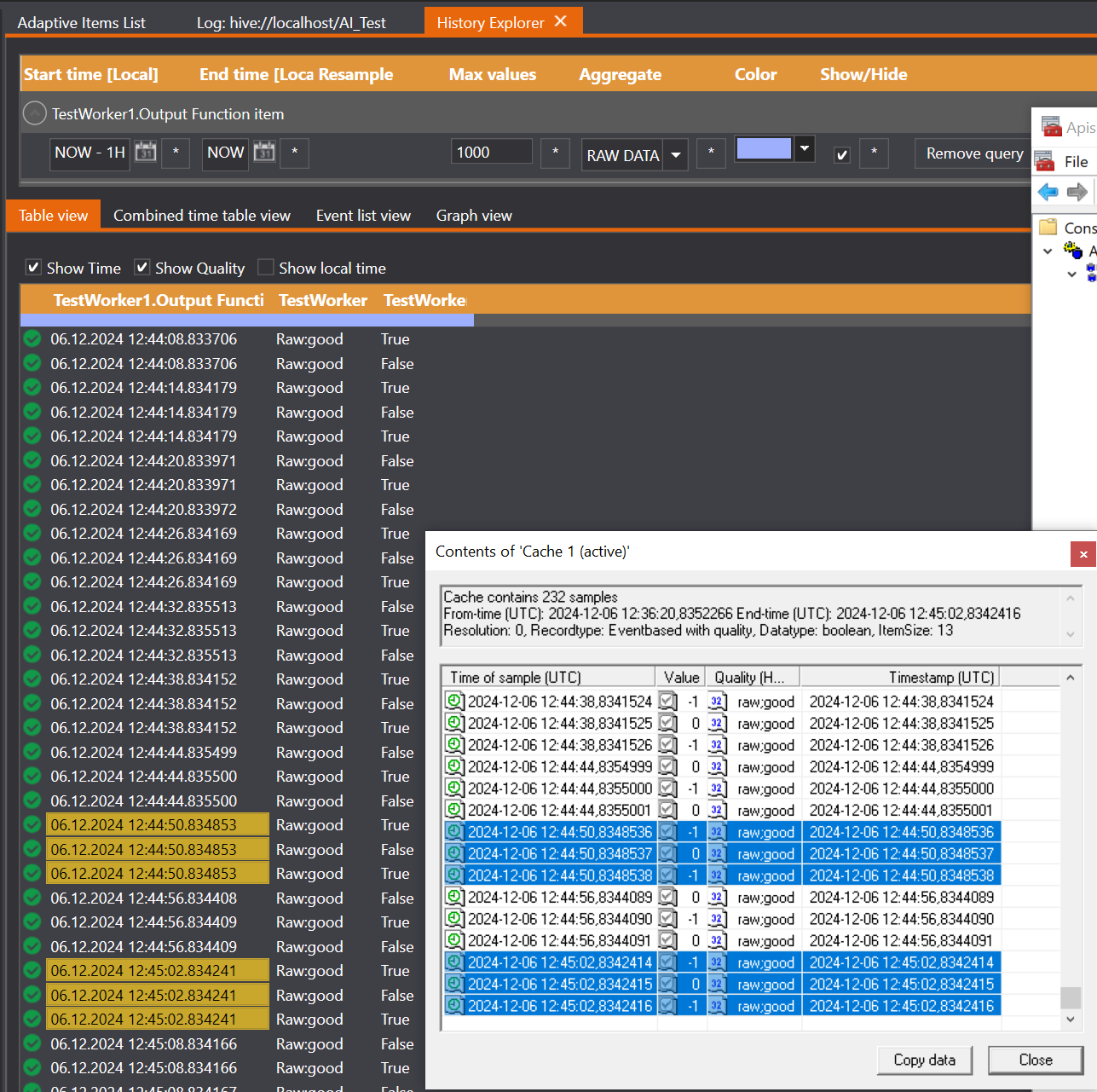

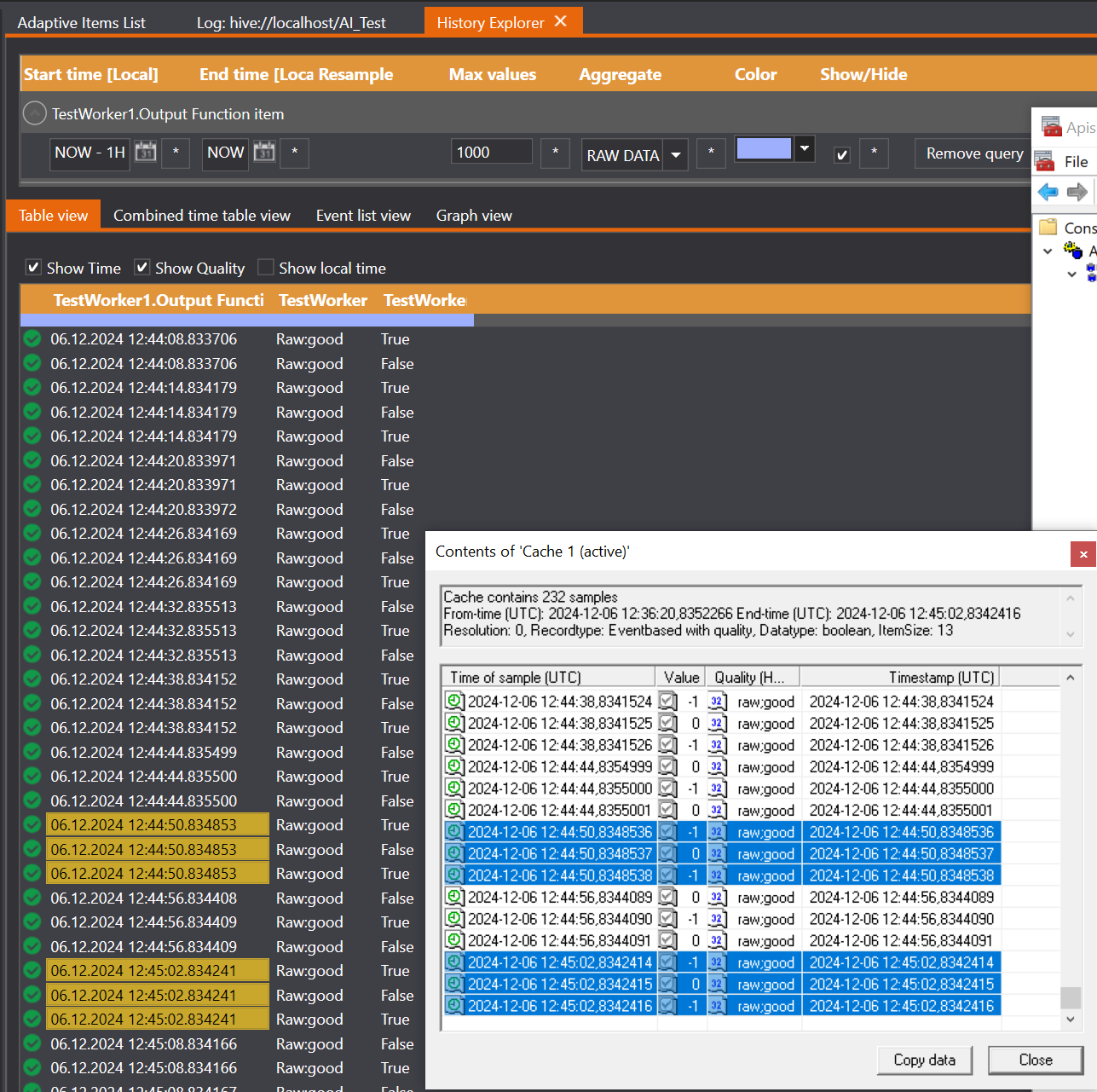

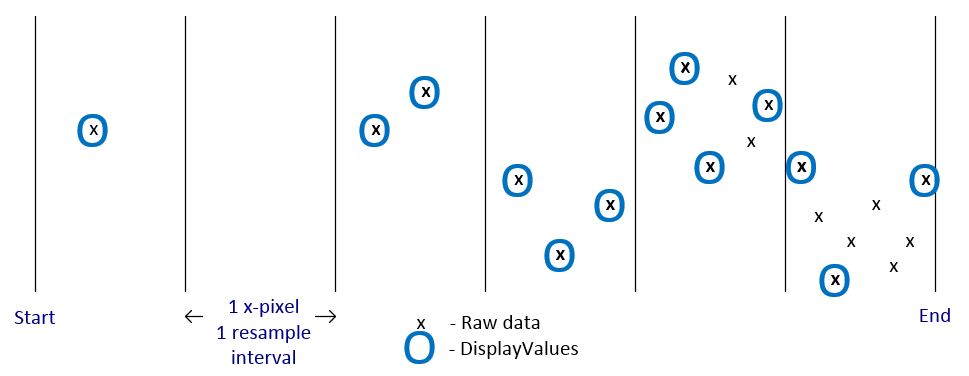

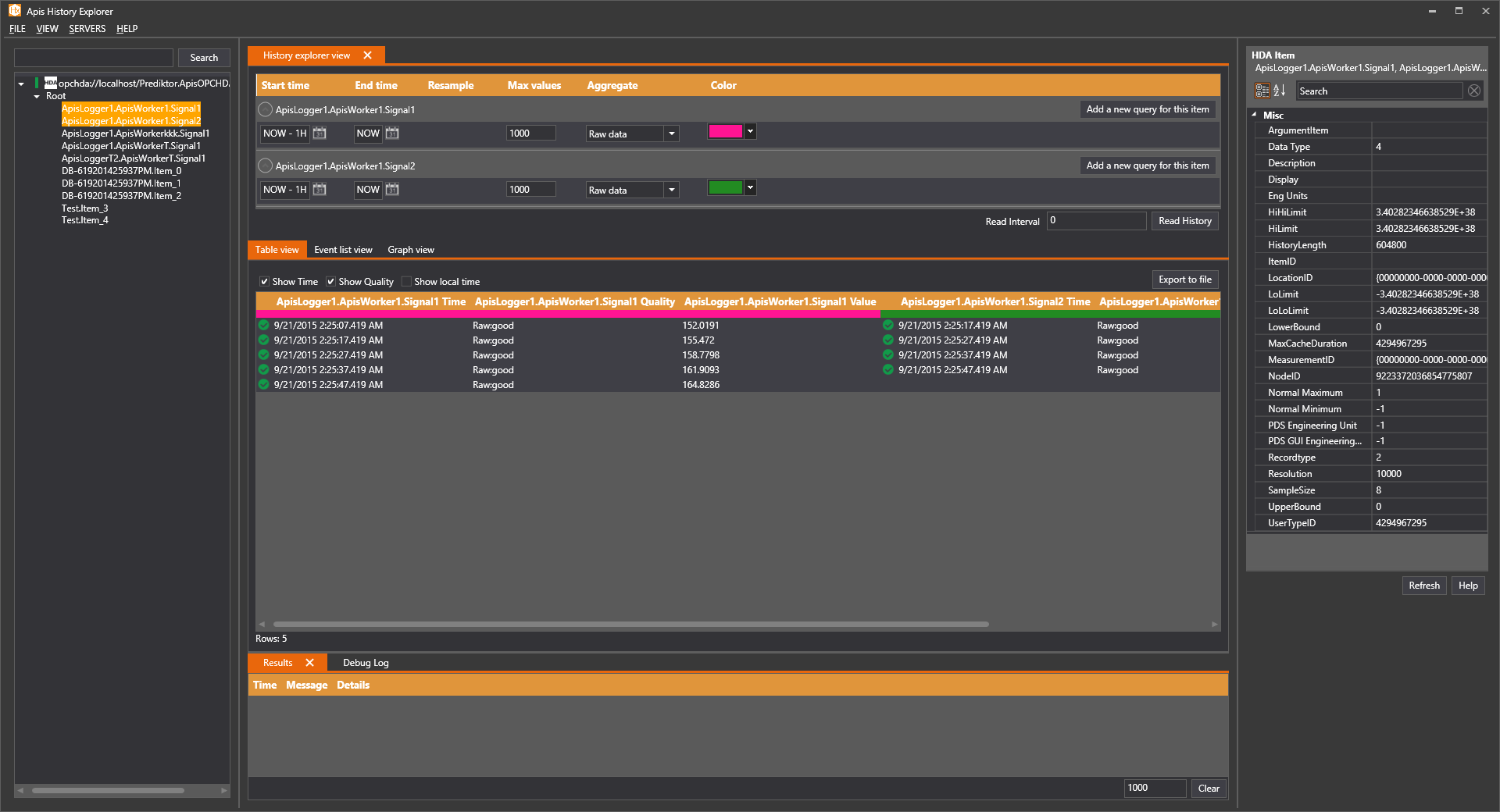

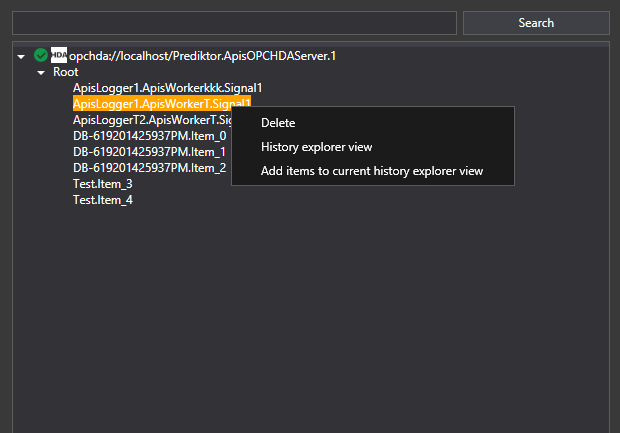

View Time Series Data

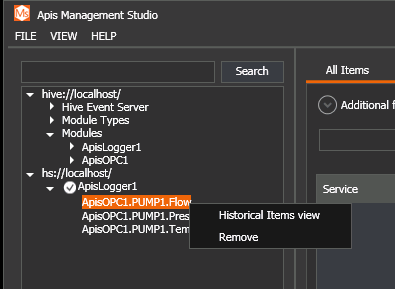

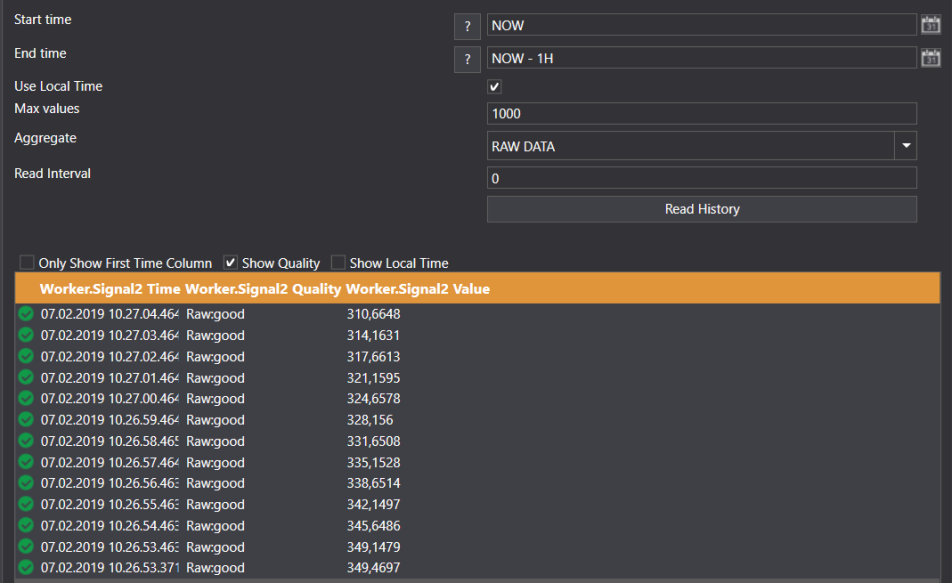

- Open Apis Management Studio and click on the "hs://localhost" instance in Solution Explorer .

- Right-click on the item you want to view and select "Historical Items view"

- In the Historical Items view, select the preferred start time, end time, and aggregate, then click "Read History"

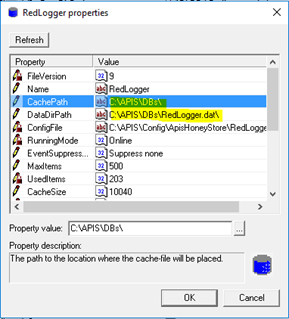

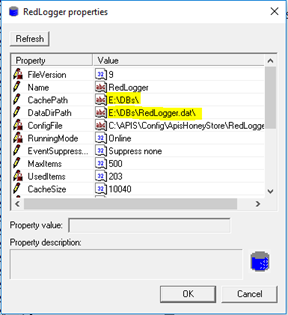

Adding a Database

A database can be added in AMS to Honeystore by selecting "Create database" in the context menu of the hs://<computer> node.

By setting:

- Database name - the name of the database

- Path - where the database is stored on disk

- Maximum items - the maximum number of items that can be stored in the database

- Cache size.- read this to find out how to calculate a reasonable cache size.

And clicking OK, a new database will be created.

Delete Databases

A database can be deleted in AMS by selecting "Delete" in the context menu of the database node.

When deleting a database, all historical data contained within the database also will be deleted.

Typically, the application responsible for storing the data to the database also handles the deletion of the database. E.g. the ApisLoggerBee module of Apis Hive.

It's only possible to delete a database in "Admin" running mode

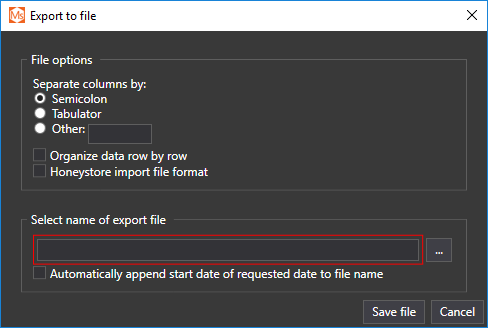

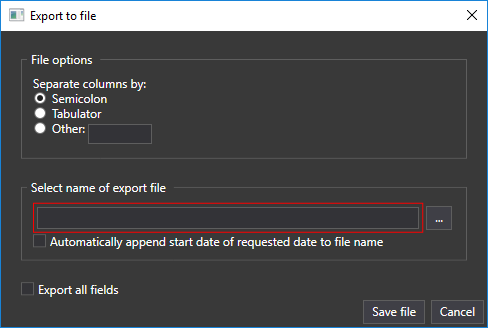

Export Data

The data in a database can be exported to a file in AMS.

It's only possible to export a database in the "Online" and "OnlineNoCache" running modes

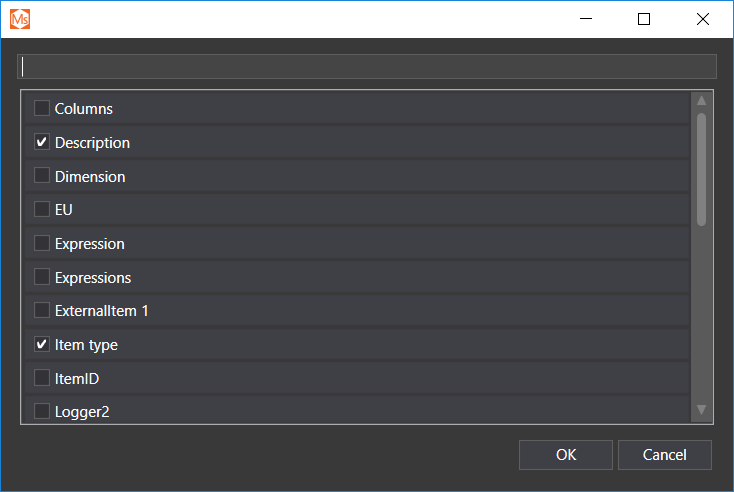

This is done by selecting "Tasks->Export Data" from the context menu of the database node. This will display a dialog box for selecting the items to export.

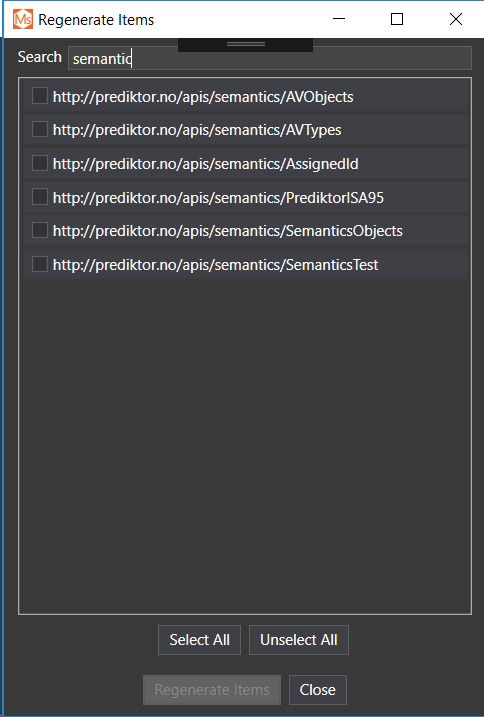

By clicking "Select All", all items will be selected. At the top, there's a filter to find items easily.

When the items which you want to export have been selected, press the "Next" button. This brings up fields for inputting start and end times, and the name of the exported file.

After pressing the "Next" button again, you can start exporting by clicking the "Start Export" button. A progress bar indicates the progress of the export. When the data has been exported, you push the "Close" button to close the dialog box

Import Data

Data can be imported into existing databases in AMS.

It's only possible to import into a database in the "OnlineNoCache" running mode.

Assure the database mode is "OnlineNoCache", then select "Tasks -> Import Data" in the context menu of the database node.

This brings up a dialog box where you can select a file and file type. If the file ends with .ahx, it's assumed the file is binary. The file type can, however, be overriden.

There are two possible file types: Binary and Tab Delimited text files.

Tree Filter

It is possible to filter the content of the tree view for the Honeystore nodes in AMS. In the context menu of the honeystore ned there is a Filter menu item. By clicking that the a dialog appears in which it is possbile to:

- Hide disabled databases.

- Hide databases which are in OnlineNoCache running mode

- Shows only databases which adhere to the naming filter. * as wildcard is allowed.

- Maximum number of databases displayed.

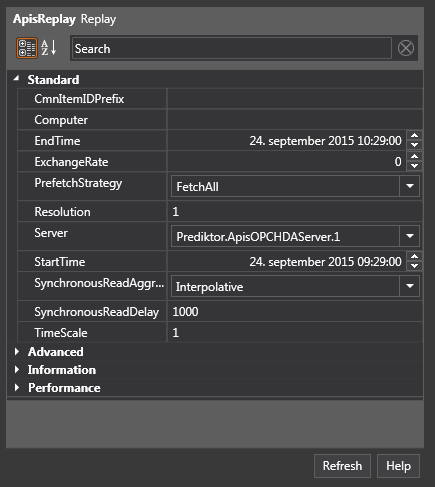

Replay Data

Follow the guide Add Module to Apis Hive, but this time select a module of type ApisReplay from the Module type drop down list.

3 ways of running the Replay module will be described here:

- Playing back data from specific start time to end time on certain speed.

- Calculate aggregate using relative start and end-time.

- Playback data and use for input to another module and synchronize these.

- After adding the module, select the new module named "Replay" from theSolution Explorer.

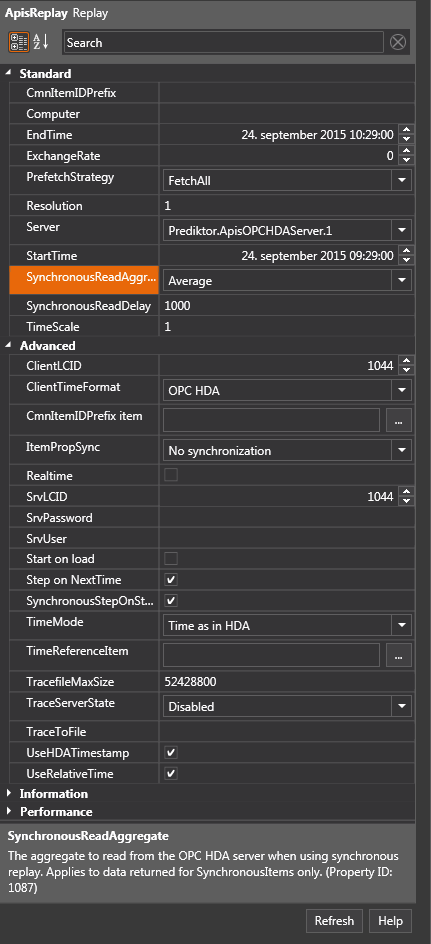

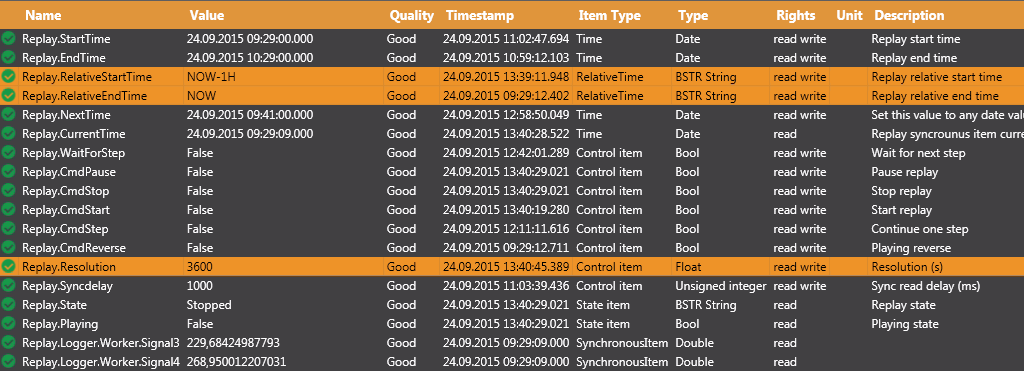

1. Playing back data from specific start time to end time on certain speed.

In the Properties Editor, enter values for:

"SynchronousReadDelay": 1000 ms.This property is the delay in ms. between read from the database. Setting this property to 0 might cause system overload.

"Resolution":1 s.

"StartTime" and "EndTime": Choose values where you are sure that database contains data.

"Exchange rate": Exchange rate in milliseconds for updating items from external items.

Press "Apply" when done.

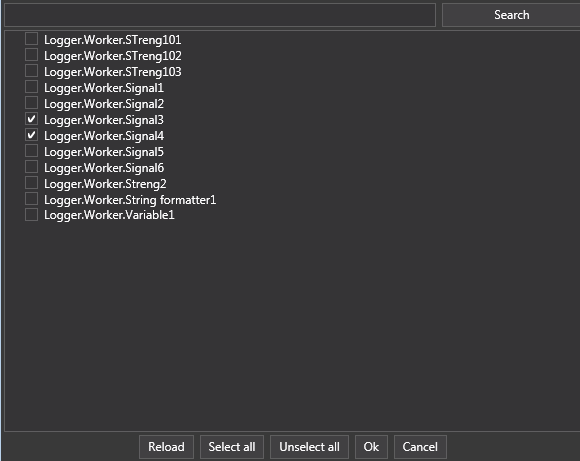

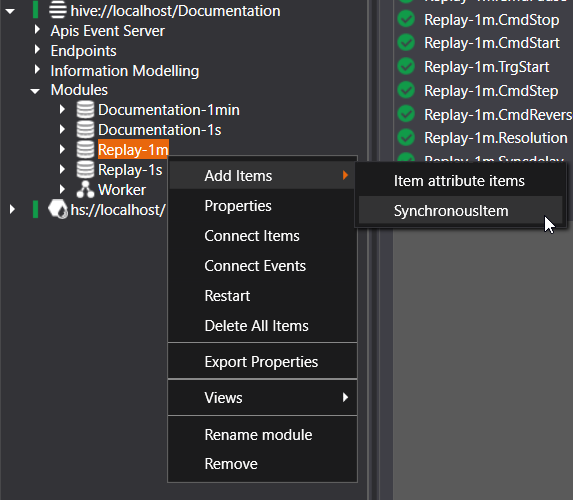

Follow the guide Add Items to a Module, but this time add item of type "SynchrounusItem".

-

Click the "Browse" button.

-

-

A dialog opens that lets you select Items in the database(s). Click "Ok" when done.

-

The item list will get new entries showing the added items.

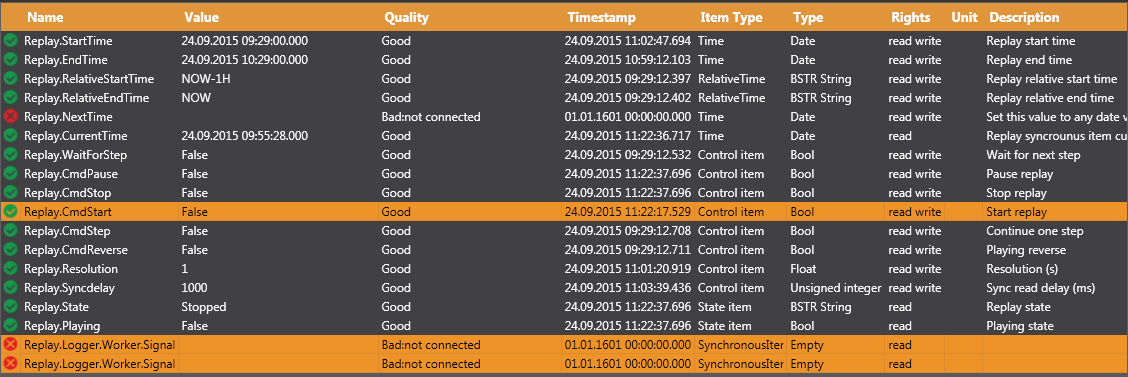

In addition it shows number of status and control items, amongst them; "Start and End-Time", "Resolution" and "Sync delay". The newly added items now shows quality bad, they still have no data.

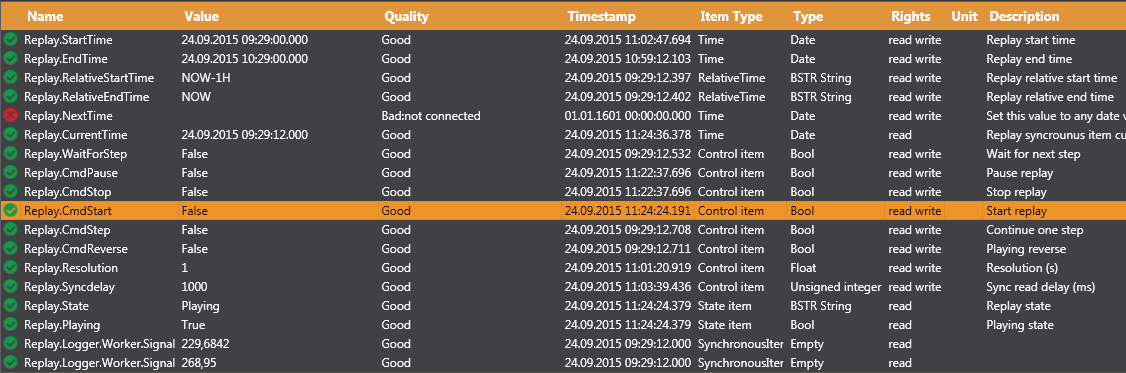

Toggle the "CmdStart" tag to true

The replay starts, CurrentTime shows the timestamps of played values, the timestamps of replayed values depends of the "TimeMode" property.

Controlling the playback:

The "speed" of the playback can be adjusted with the "Syncdelay" item and the Resolution depending of application.

Playback can be paused/unpaused by toggle the "CmdPause" item, in pause you can step playback by toggle the "CmdStep" item.

If the "Step on NextTime" property is set, you can in Pause step to a desired time by setting the "NextTime" item to a value between "Start" and "End-time"

2. Calculate aggregate using relative start and end-time.

In the Properties Editor, Advanced section enter values for:

UseRelativeTime : true

SynchronousReadAggregate : "Average" or any other aggregate suitable for your needs.

We use the same items as in previous example, in the item list set the values of "RelativeStart" and "EndTime" and "Resolution". In this case we want to calculate the average of the last hour.

Toggle "CmdStart"

The replayed items will now show the average for the last hour.

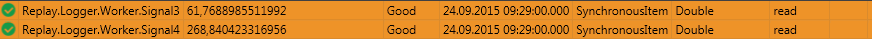

3. Playback data and use for input to another module and synchronize these.

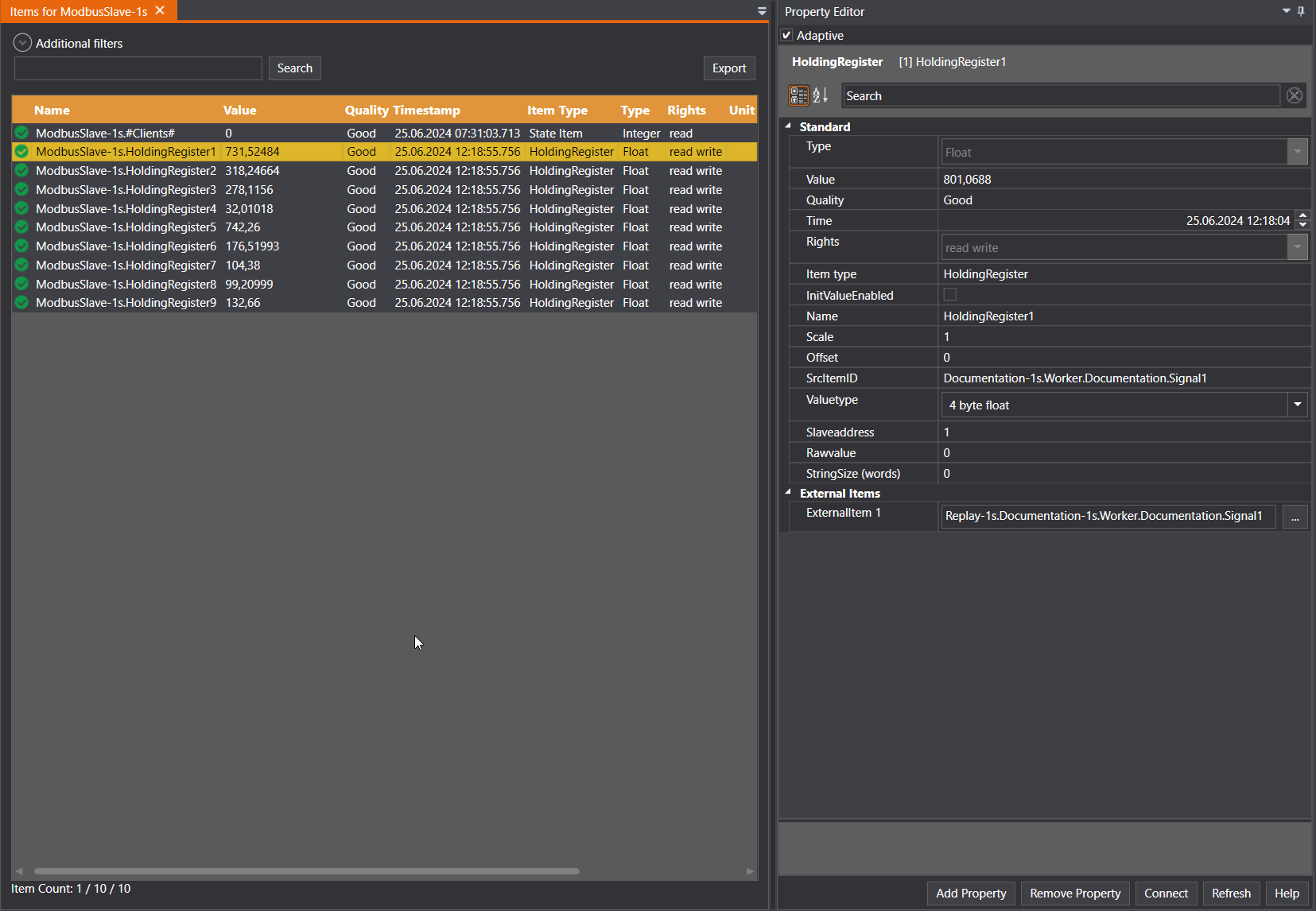

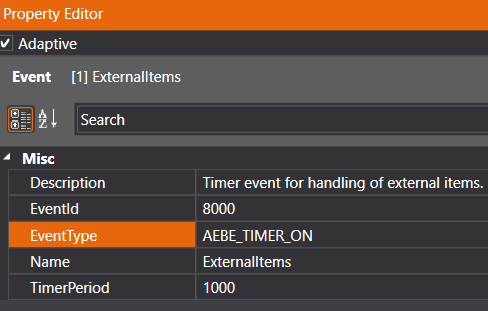

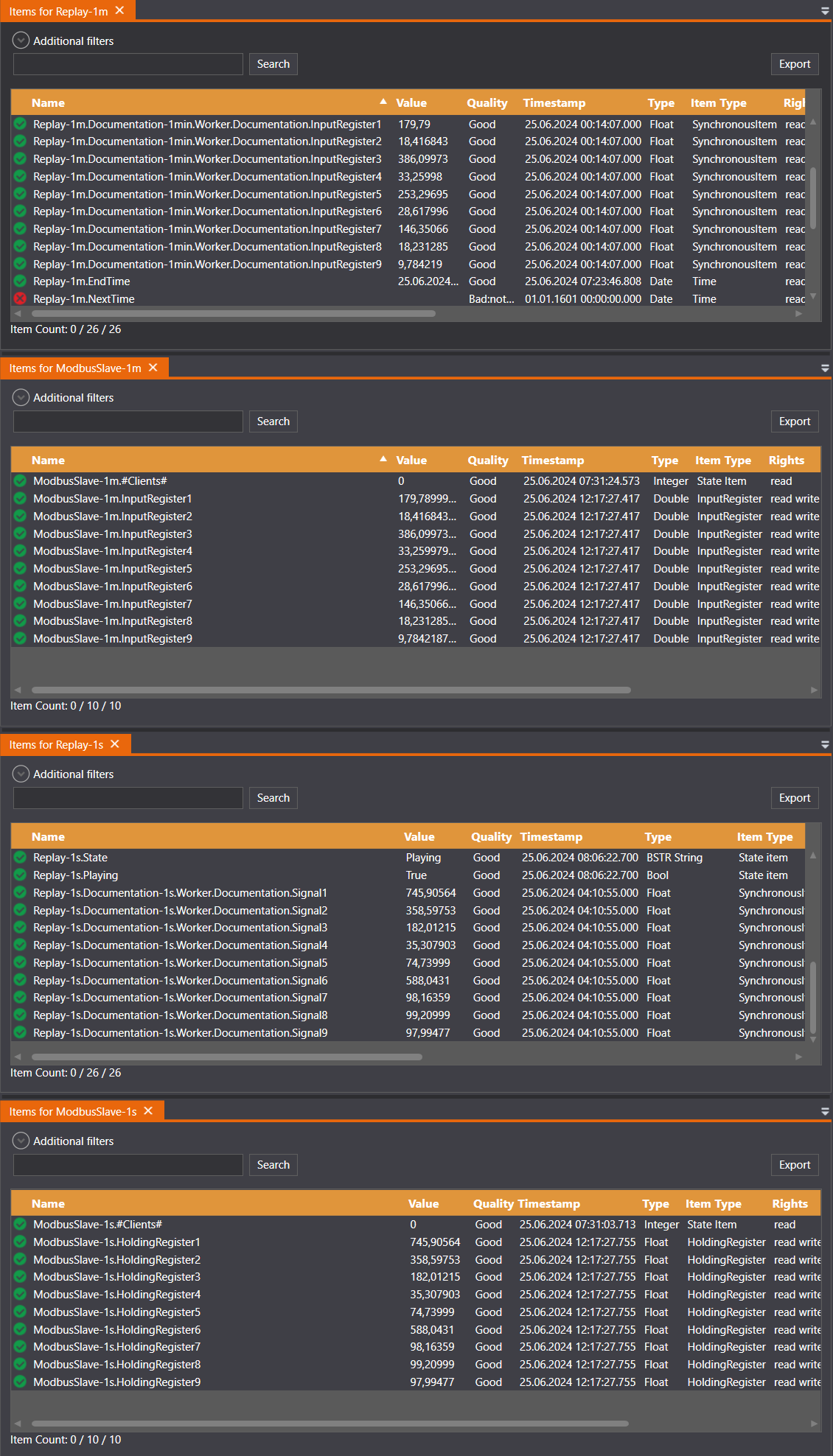

A. Replicate data from a Modbus source stored in HoneyStore database to a ModbusSlave module

Create a new Replay module and add SynchronousItems.

Click browse to open the dialogue screen for selecting database items.

Applying a filter before clicking browse a second time is recommended if you are looking for specific items when working in an environment with large databases (unless it is desired to view all items found in all present databases.) Also note that using Content filter with the box ticked performs filtering as you type and can potentially slow down performance when working with a large amount of items.

IMPORTANT: Both HoneyStore and the OPCHDA service/server must be running for the items to show up in this step.

Once the items have been selected click OK and they will appear in the Replay module.

Create a ModbusSlave module and add the relevant items. In this example we are using two modules, one with HoldingRegister and the other with InputRegister items connected to two seperate Replay modules reading values from databases containing values sampled with 1 second and 1 minute intervals.

Right-click an item and select Connect. Find the desired source item in the Replay module and link it to the corresponding destination item in the ModbusSlave module. Click Connect, then OK.

It is important that the SrcItemId in the destination item matches that of the source item (ExternalItem 1). In this case the item name is Documentation-1s.Worker.Documentation.Signal1 (omitting the modulename prefix "Replay-1s").

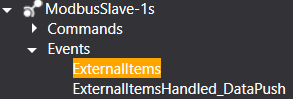

In certain cases the ExternalItems will fail to load on initial startup. Check to see if EventType is set to AEBE_TIMER_ON under Module->Events->ExternalItems. If this value is AEBE_TIMER_OFF changing the modules ExhcangeRate to some random value and then back to the desired rate will fix this issue.

In this example setup the Resolution is set to 1 and SynchronousReadAggregate is Interpolative zero-order, resulting in the values being changed every minute given that the database only contains new values with 1 minute intervals.

In the Property editor under "Information" the StartTime and EndTime of the two buffers are displayed. These buffers are determined by the MajorSubInterval property in seconds, which divides the entire timespan given by StartTime, EndTime and Resolution into smaller chunks, in this case 1 hour intervals (3600 seconds).

If the amount of data required exceeds available memory the module will calculatue the largest possible MajorSubInterval and override the property before starting. If MajorSubInterval is set to 0 the entire timespan will be used as one buffer if there is enough memory available, if not the largest possible MajorSubInterval will be used.

Note that the data Type might change depending on the SynchronousReadAggregate and Resolution. In this example the values from the 1 second database are being passed on as their original valuetype (Float) given that the SynchronousReadAggregate is set to "Interpolative zero-order", but the values from the 1 minute database gets converted to Double since SynchronousReadAggregate is set to "Average" and Resolution is 60.

B.

This implicates another module consuming data from the Replay module, this is beyond Quick start guide, however here is a simple example.

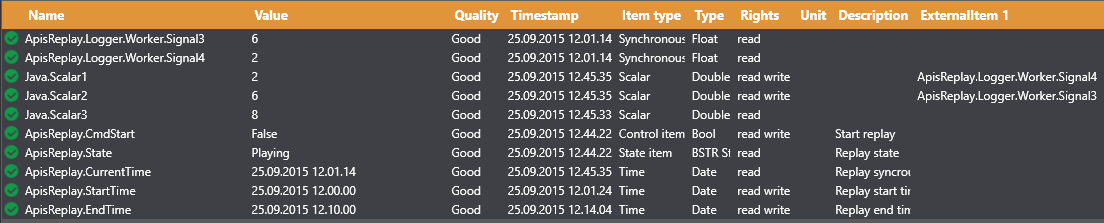

A simulation module is required in this case Java module connected to a simple ModFrame application. The ModFrame application has two input signals Salar1 and 2 and one output signal Scalar3. The playback items ApisReplay.Logger.Worker.Signal3 and 4 ar used as input to Java.Scalar1 and 2

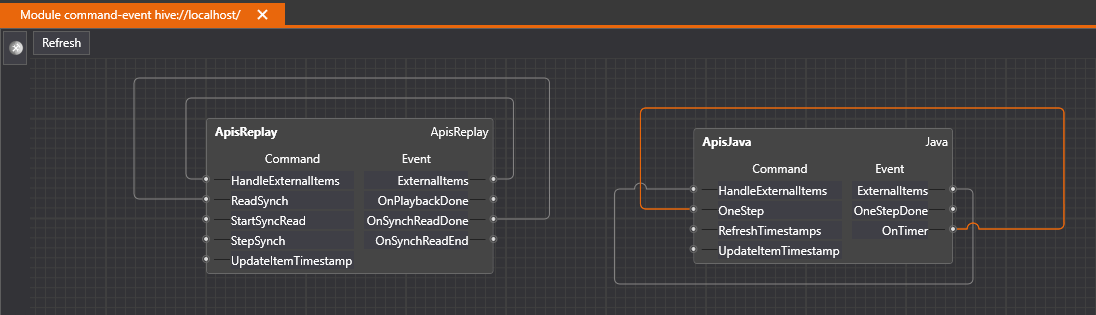

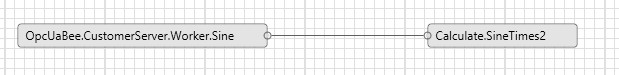

To be able to synchronize these two modules Java and ApisReplay we have to use the internal Commands and Events mechanism in Apis.The image below shows the default configuration for these two modules.

In the Replay module we have OnSyncReadDone, event th at notifies that a synchronous read operation has finished and new data is ready on replayed items. In the default configuration this event is connected to ReadSync command which initiates a new synchronized read operation immediately (a loop).

In Java module there is a OnTimer, event fired when at a rate given by the module-property Timerperiod. this is connected to the OnsStep command calling the OnOneStep() java method, when OnOneStep() retuns it fires OneStepDone event indicating that the OnOneStep() java method is finished. With this configuration these two modules live their own life.

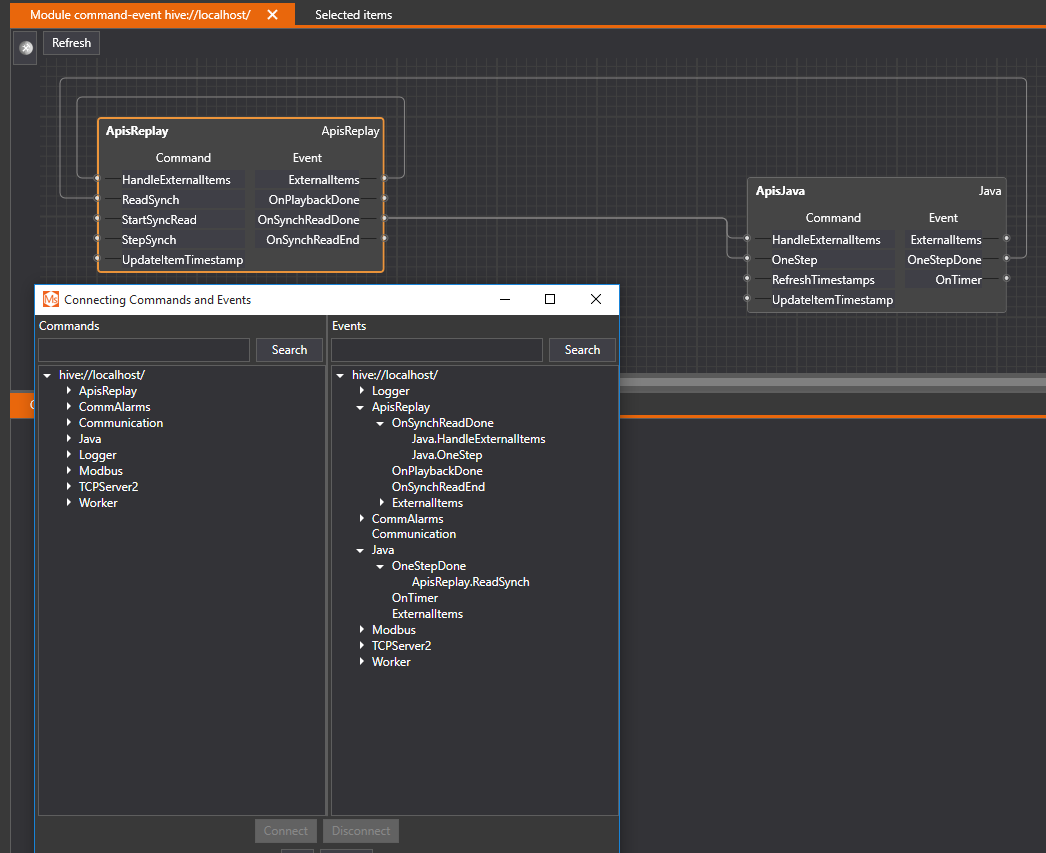

In next image we have changed the configuration: When Replay module has new data (OnSyncReadDone) it notifies the Java module first to read input (HandleExternalItems) and then to run a step (OneStep). When Java has finished its calculation, (this could take some time if the application was a advanced simulator) it fires OnStepDone which triggers ReadSynch i Replay module. We now have a "loop" where the two modules are synchronized.

Trouble shooting

No data :Check Start- and End- time, is there any data in the time period.

Hangs : Check Commands and Events loop.

Process

This section gives an introduction to a adding alarms, calculations and email notifications. Please pick a topic from the menu.

Hive Worker Module

How to configure worker

This section gives an introduction to configure worker module. Please pick a topic from the menu.

How to configure Signal item

This example show you how to add an signal item, which is used to auto generate different types of signal. There are seven different signals types which are supported:

- Sine

- Triangle

- Sawtooth

- Square

- Random

- PeriodicRandom

- Counter

The variable type which support this functionality is the item type Signal on the ApisWorker module.

Add worker module

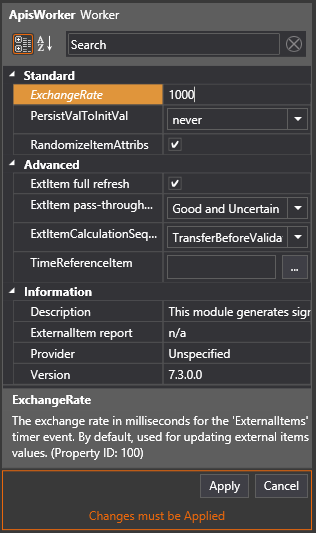

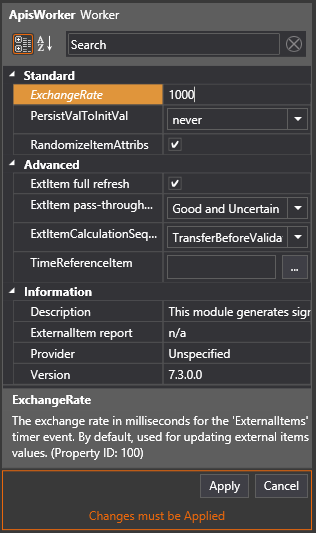

Follow the guide Add Module to Apis Hive to add a module of type ApisWorker to an Apis Hive Instance.

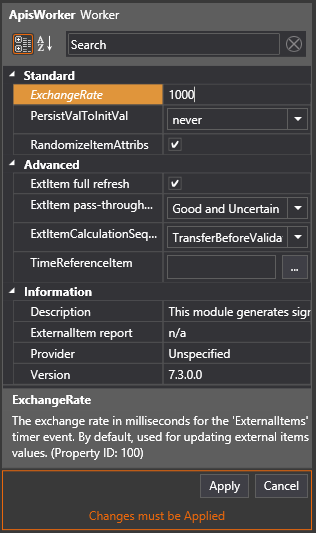

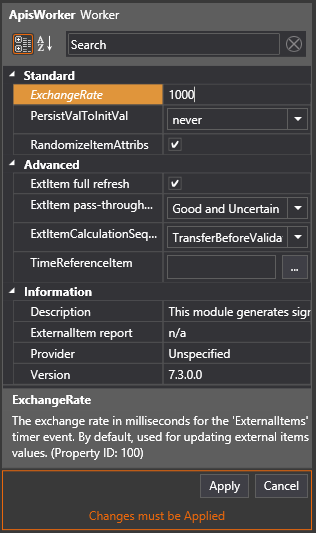

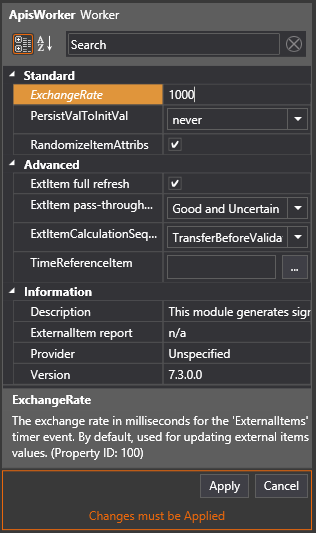

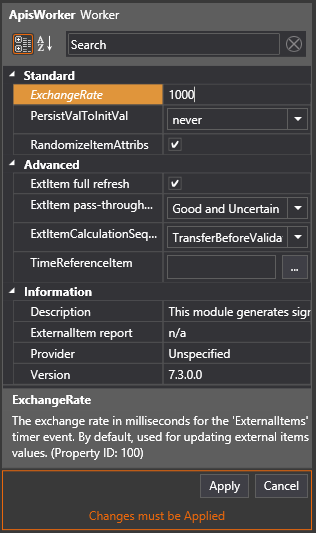

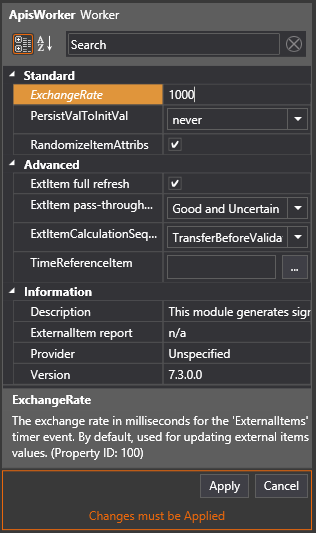

- After adding the module, select the new module named "Worker" from the Solution Explorer.

- Set the "ExchangeRate" property to e.g. 1000 ms. This is the update rate when this module exchanges data with other modules.

- Click on Apply

Add item type Signal

- Follow the guide Add Items to a Module, but this time select item type "Signal"

- Add 2 items by setting Count to 2

- Click 'Add item(s)'

- Click Ok

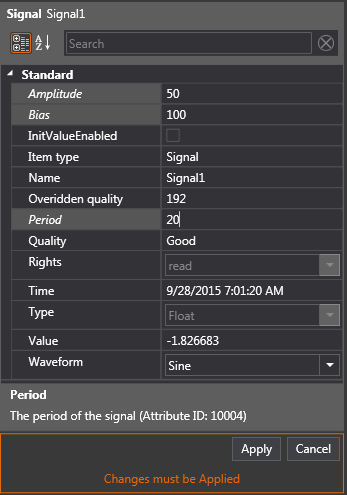

Configure item Signal1 as signal type Sine

This will show you how to configure a signal that generates a sinus curve with an amplitude of 50. offset (or bias) of 100 and a period of 20 seconds

- Select the item Signal1

- In the attribute window select the attribute Waveform and select the value Sine

- In the attribute window select the attribute Amplitude and set the value to 50

- In the attribute window select the attribute Bias and set the value to 100

- In the attribute window select the attribute Period and set the value to 20

- Click Apply

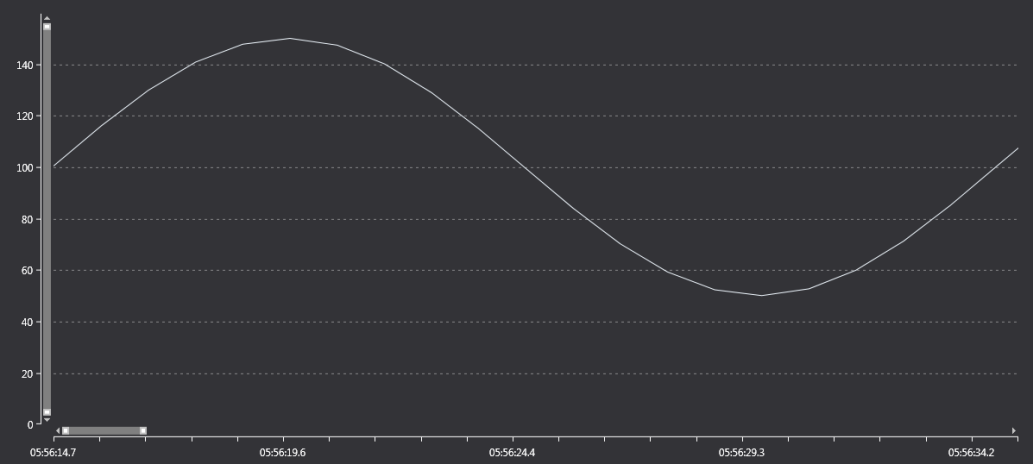

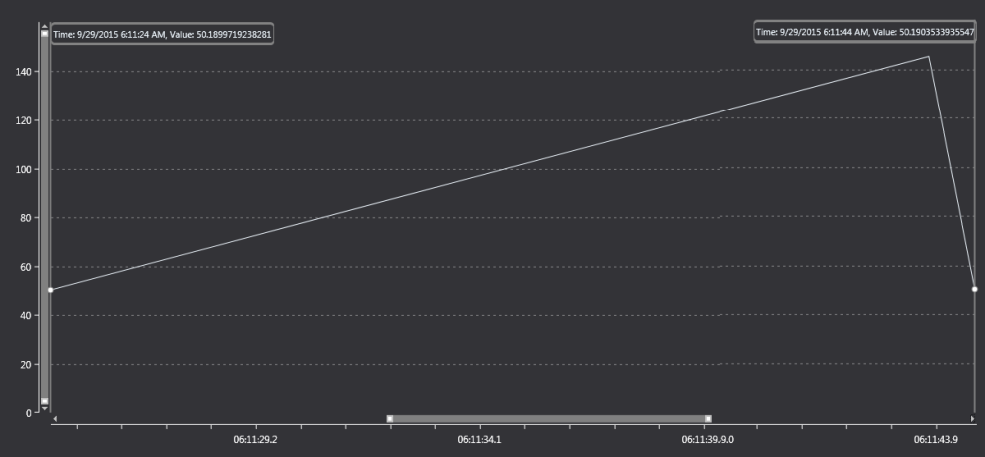

- You should get an sinus signal with an offset of 100 and amplitude of 50 and a period of 20 seconds as shown below.

Configure item Signal2 as signal type sawtooth

- Follow the same step as for Signal1 but change the waveform to Sawtooth

- The signal should look like the picture below with the amplitude of 100 and bias of 50. The period is 20 seconds

Configure item Signal2 as signal type square

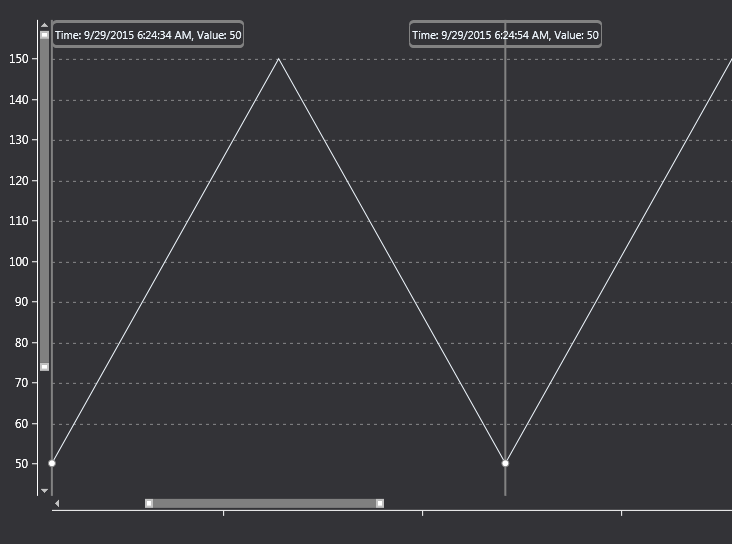

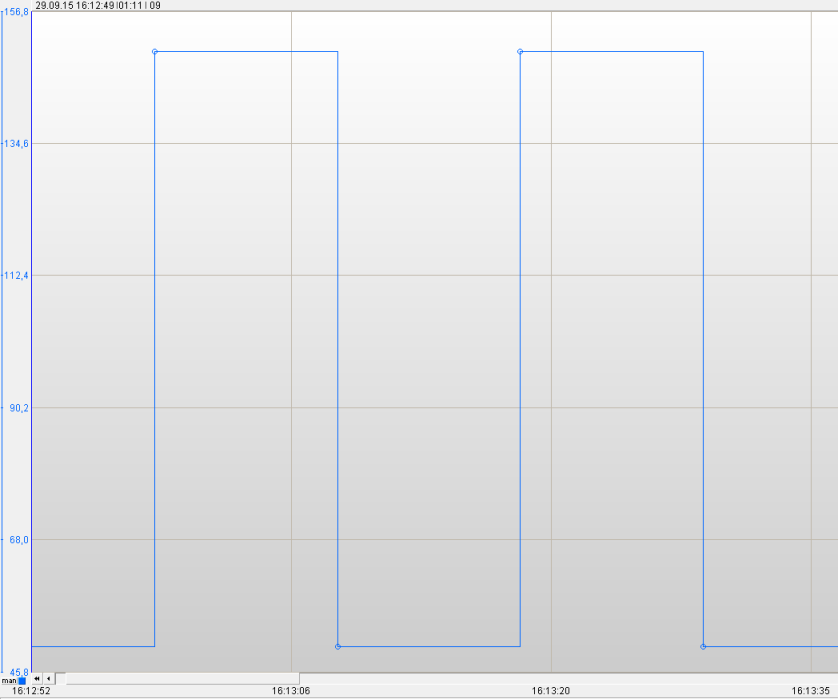

- Follow the same step as for Signal2 but change the waveform to Square.

- The picture below is showing the just the data point with a line drawn between them.

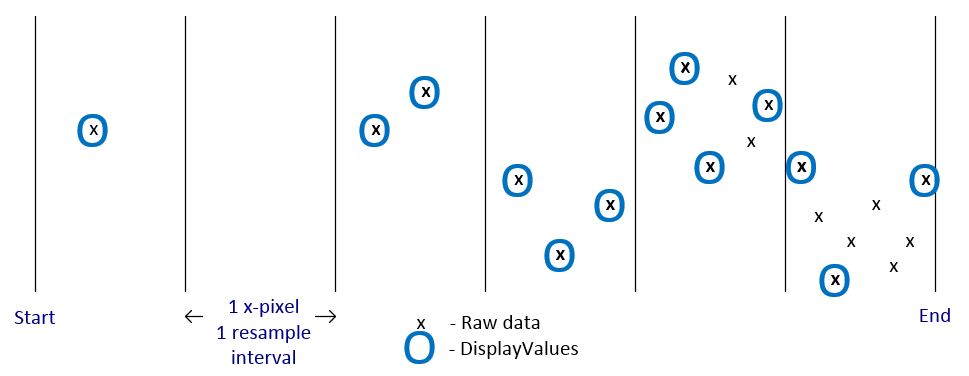

- The picture below shows a graph where the current value is keep until a new value is received. The actual value (raw data)is shown as a circle on the curv.

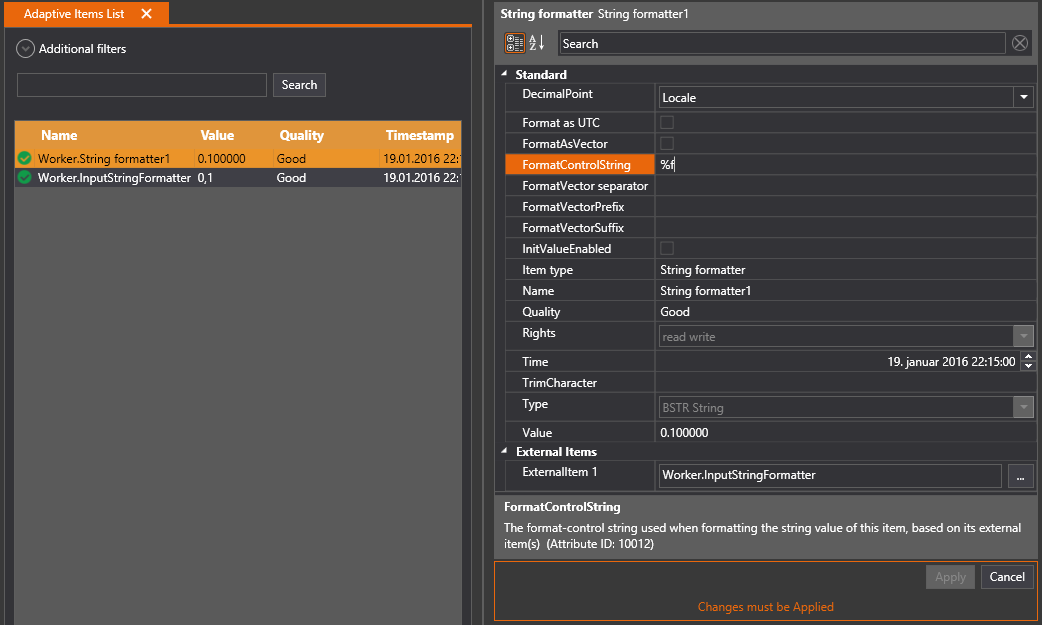

How to configure Stringformatter item

This example explains how to add a Stringformatter item which is used to format a string based on external item(s) and a format-control string.

The variable type which support this functionality is the item type StringFormatter on the ApisWorker module.

Add worker module

Follow the guide Add Module to Apis Hive to add a module of type ApisWorker to an Apis Hive Instance.

- After adding the module, select the new module named "Worker" from the Solution Explorer.

- Set the "ExchangeRate" property to e.g. 1000 ms. This is the update rate when this module exchanges data with other modules.

- Click on Apply

Add items

- Follow the guide Add Items to a Module, but this time select item type "StringFormatter".

- Click "Add item(s)"

- Click Ok

Connect String Formatter item to source item

The StringFormatter will format the value on the input item according to the value in the FormatControlString property.

Connect external item

- Right Click on the StringFormatter item and select Connect

- Select the item which should be used as source item. In this case I will use an item called Worker.InputStringFormatter

- Click Connect and then Ok

Configure FormatControlString property

- Select the StringFormatter item to get the property view of the item

- Set the value of the FormatControlString to format item based on input value.

- Click Apply

How to configure Variable item with reset value

This example explains how to add automatically reset an item to a user defined value after a given period. The variable type which support this functionality is the item type Item type: Item Attribute Items on the ApisWorker module.

Add worker module

Follow the guide Add Module to Apis Hive, but this time select a module of type ApisWorker from the Module type drop down list.

- After adding the module, select the new module named "ApisWorker1" from the Solution Explorer.

- Set the "ExchangeRate" property to e.g. 1000 ms. This is the update rate when this module exchanges data with other modules.

- Click on Apply

Add items

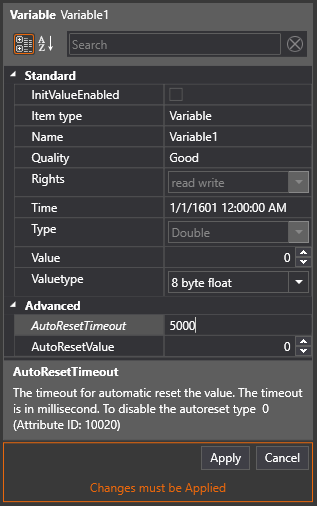

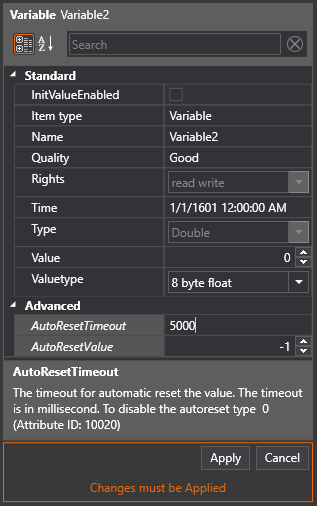

- Follow the guide Add Items to a Module, but this time select item type "Variable Item".

- Add two items by setting Count to 2

- Click Add items(s)

- Select the items (in the item list) and change the Valuetype to 8 byte float (in the property view)

- Click Ok

Configure Value reset functionality

- In the items list view (All Items), select item ApisWorker1.Variable1 to get the item property

- Set the AutoResetTimeout to '5000' millisecond

- Click Apply

- Do the same operation for ApisWorker1.Variable2 but set the AutoResetValue to -1

- Click Apply

Change the value of the item.

- Select item 'ApisWorker1.Variable1' and set the value to 60. After 5 seconds the value should be reset to 0

- Select item 'ApisWorker1.Variable2' and set the value to 30. After 5 seconds the value should be reset to -1

How to configure Time item

This example explains how to add a time item which displays a "clock" as value in either UTC og LocalTime . The variable type which support this functionality is the item type Time on the ApisWorker module.

Add worker module

Follow the guide Add Module to Apis Hive to add a module of type ApisWorker to an Apis Hive Instance.

- After adding the module, select the new module named "Worker" from the Solution Explorer.

- Set the "ExchangeRate" property to e.g. 1000 ms. This is the update rate when this module exchanges data with other modules.

- Click on Apply

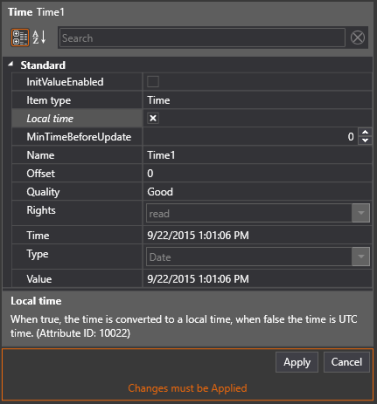

Add item type Time

- Add item named Time1 of type Time

- Click Add item(s)

- Click Ok

Configure item Type

- To display the value in local time, set the attribute 'Local time' to true. If you want to display the value in UTC, set the attribute 'Local time' to false

- Click Apply

How to configure Multiplexer item

This example explains how use a multiplexer to select between a set of input items. The variable type which support this functionality is the item type Multiplexer on the ApisWorker module.

Add worker module

Follow the guide Add Module to Apis Hive to add a module of type ApisWorker to an Apis Hive Instance.

- After adding the module, select the new module named "Worker" from the Solution Explorer.

- Set the "ExchangeRate" property to e.g. 1000 ms. This is the update rate when this module exchanges data with other modules.

- Click on Apply

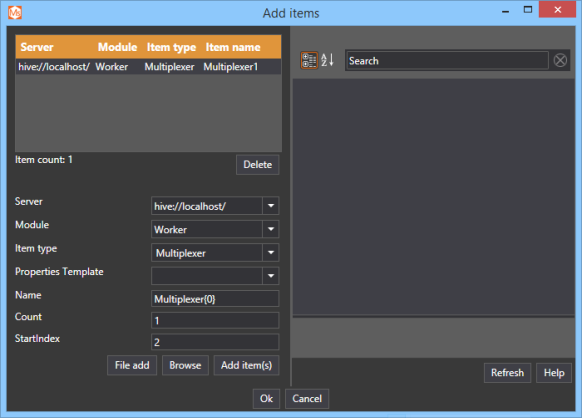

Add item type Multiplexer

- Follow the guide Add Items to a Module, but this time select item type "Multiplexer".

- Click "Add item(s)"

- Click Ok

Connect Multiplexer item to source item

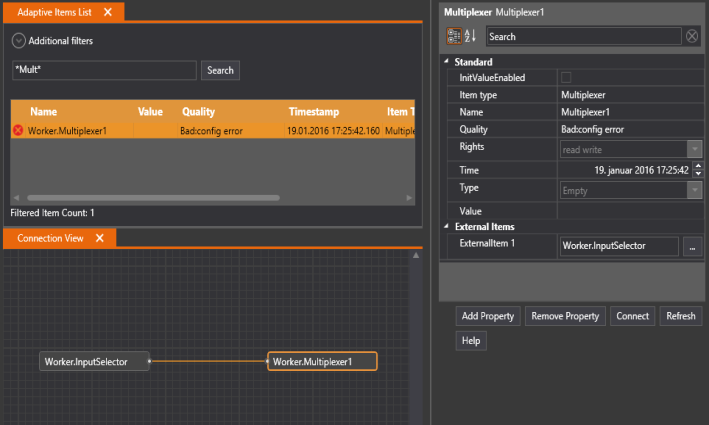

The numbers of input to select between is given by the external items connected. The first external item (ExternalItem1) is the selector of which port to use as value.

Connect selector item

- Make sure to connect the first item to the input selector item as ExternalItem1

- Right Click on the Multiplexer item and select connect

- Select the item which should be used as selector item. In this case I will use an item called Worker.InputSelector

- The attribute overview of item "_Multiplexter1"_shows that Input selector item "Worker.InputSelector" is connected to ExternalItem1

Select which items to multiplexes between

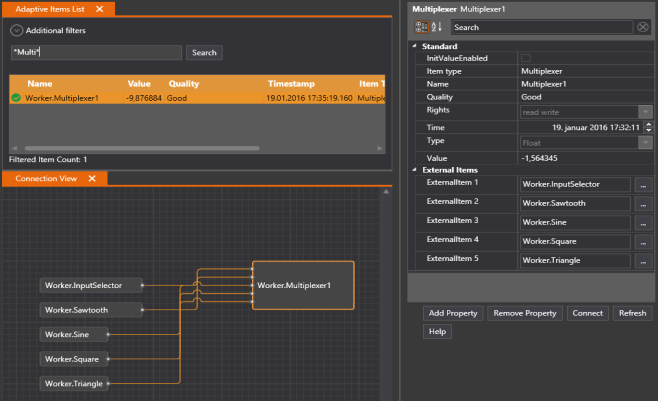

The different items to multiplex between is given by external items. ExternalItem2 = input1, ..., ExternalItemN = input(N-1)

- Connect Multiplexer item to input1, input2, input3 and input4.

- You should see selector item as ExternalItem1 and the External item 2 to 5 should be the different input items

How to configure Item Attribute item

In some cases you might want to make available item attribute(s) of as a separate item in the Apis name space. This example explains how to do just that. The variable type which support this functionality is the item type Item type: Item Attribute Items on the ApisWorker, ApisOpc and ApisOpcUa modules. This example will show you how to configure item attribute items on an ApisWorker module.

Add worker module

Follow the guide Add Module to Apis Hive to add a module of type ApisWorker to an Apis Hive Instance.

- After adding the module, select the new module named "Worker" from the Solution Explorer.

- Set the "ExchangeRate" property to e.g. 1000 ms. This is the update rate when this module exchanges data with other modules.

- Click on Apply

Add items

- Make sure that you have the items with attributes available in the Apis namespace. In this example I will use a Worker Signal item.

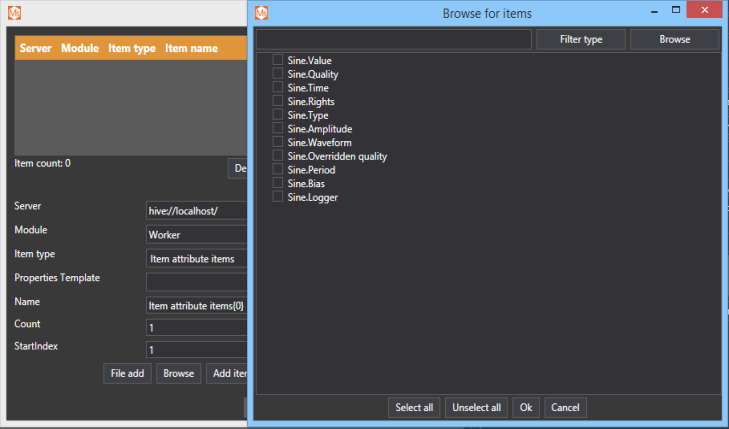

- Follow the guide Add Items to a Module, but this time select item type "Item type: Item Attribute Items".

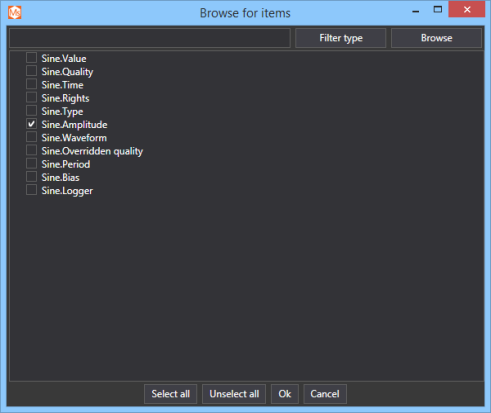

- Click on Browse button to get an overview of which attribute to expose as item.

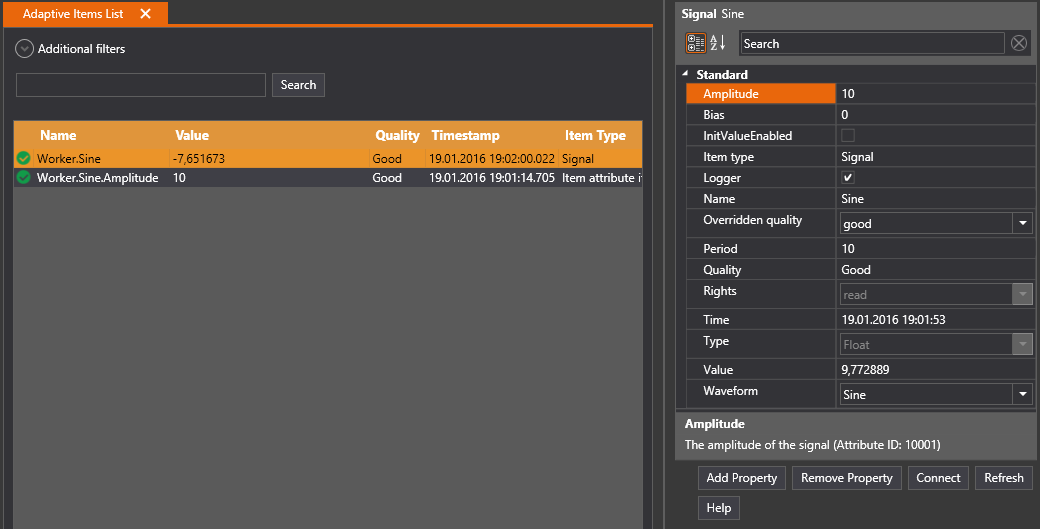

- Select Sine.Amplitude by click on the check box on the left side of the item and click Ok button twice

- The access rights of the item Worker.Sine.Amplitude is given by the access rights of the attribute. Since the Amplitude have read/write access you should be able to change the amplitude both from the Apis namespace and from the item attribute property window. Updating either one of them (item / attribute) should be automatically be reflected on each other.

How to configure Bit Selector item

This example explains how to select a bit in an value and check it the bit is set or not. The variable type which support this functionality is the item type BitSelect on the ApisWorker module.

Add worker module

Follow the guide Add Module to Apis Hive to add a module of type ApisWorker to an Apis Hive Instance.

- After adding the module, select the new module named "Worker" from the Solution Explorer.

- Set the "ExchangeRate" property to e.g. 1000 ms. This is the update rate when this module exchanges data with other modules.

- Click on Apply

Add items

- Follow the guide Add Items to a Module, but this time select item type "BitSelect".

- Add two items by setting Count to 2

- Click Ok

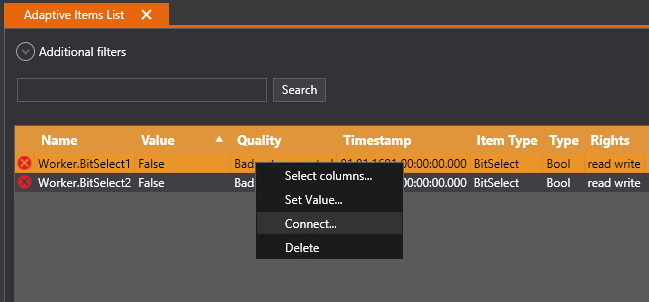

Connect Bit select item to source item

- Right click on the item and select Connect

- Select the source of the bit select item to connect

- Click Connect and then Ok

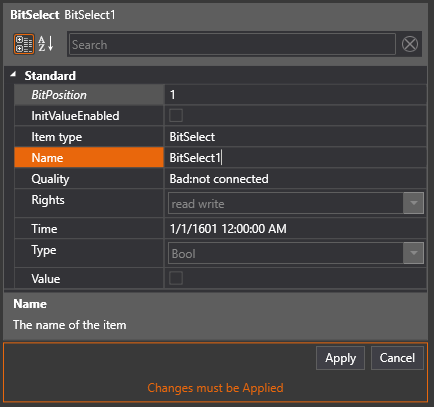

Configure which bit index to check of source item

- Select the bit select item to get the property view of the item

- Set the value of the BitPosition to check if the bit is set or not

- Click Apply

Result

- The bit select item should become true when the bit in position 1 is set and false if not.

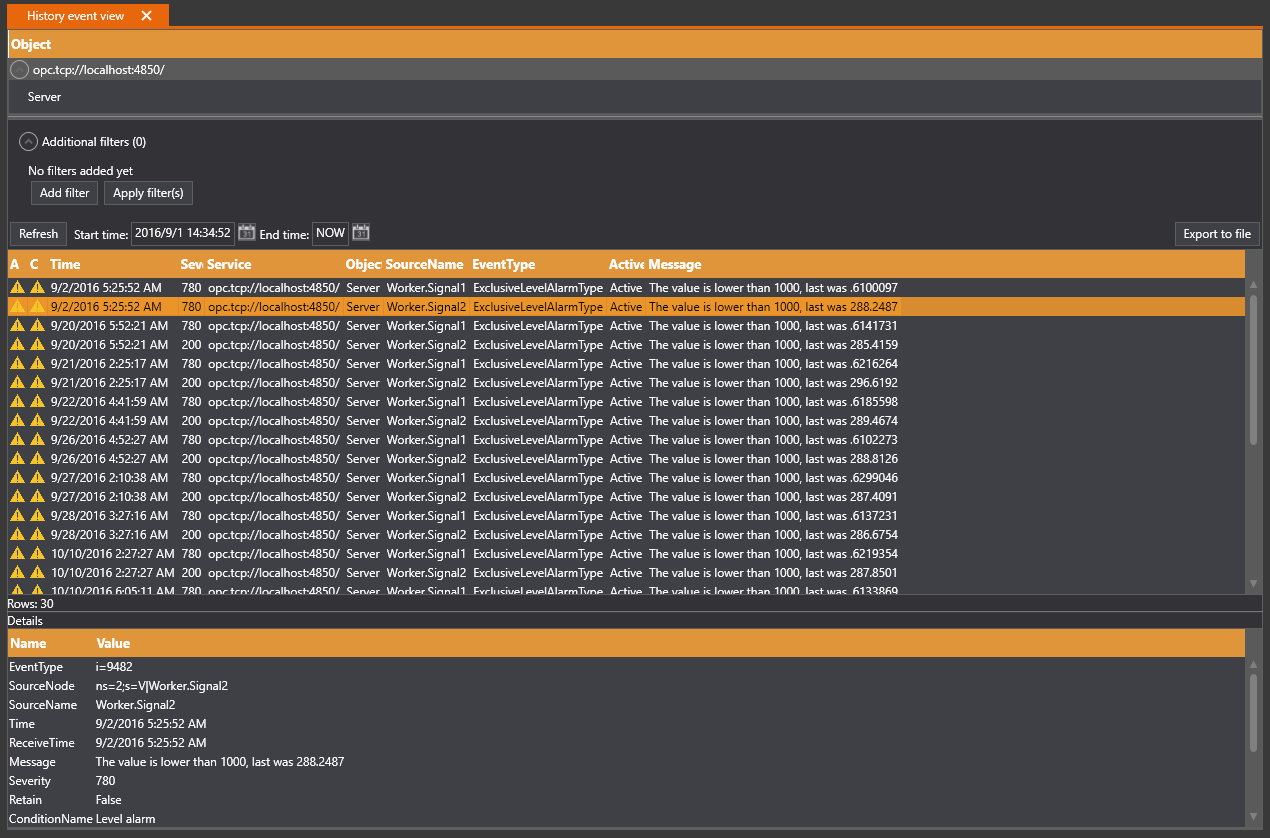

Add Alarms

Setup Level Alarms

This example explains how to configure Apis Hive as OPC AE service and add level alarms on items.

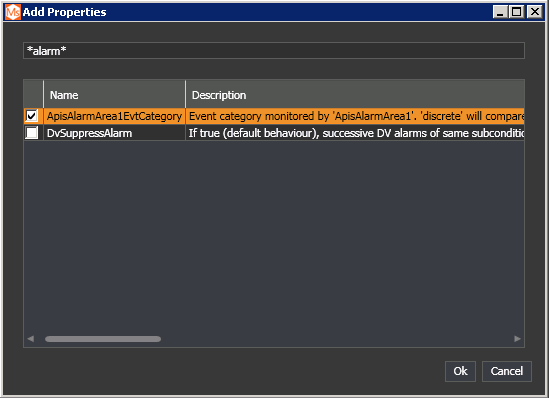

Follow the guide Add Module to Apis Hive, but this time select a module of type ApisAlarmArea from the "Module type" dropdown list.

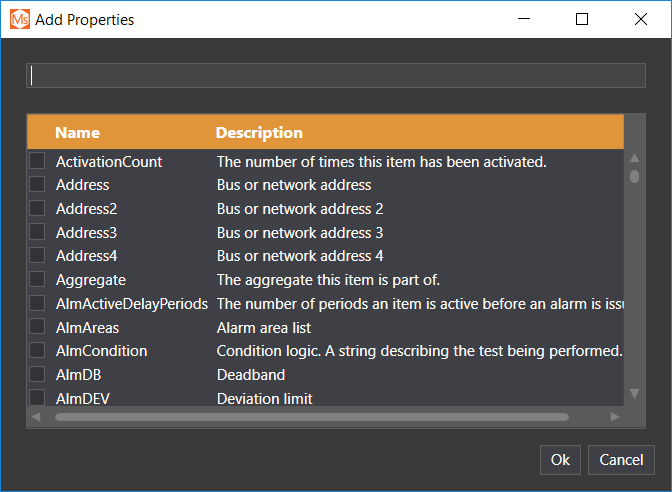

- In the Properties Editor, click on the button "Add Property"

- In the "Add property" dialog, type "*alarm*" in the filter field, and select the new global property "ApisAlarmArea1EvtCategory"

- Click "Ok"

- In the Properties Editor, click on the property "ApisAlarmArea1EvtCategory". From the dropdown menu, select the alarm category you want. In this example we'll use "level".

- Click "Apply".

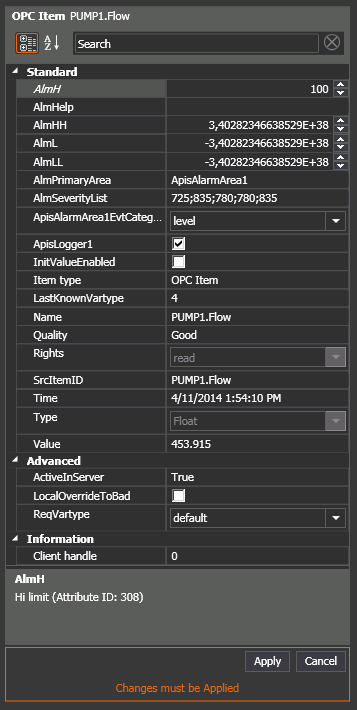

- The OPC DA alarm attributes AlmH, AlmHH, AlmL and AlmLL (Alarm High Limit, Alarm High-High Limit, Alarm Low Limit, Alarm Low-Low Limit) will be added to the item. Set the limits you want. In this example we set AlmH limit to 100.

- Click "Apply".

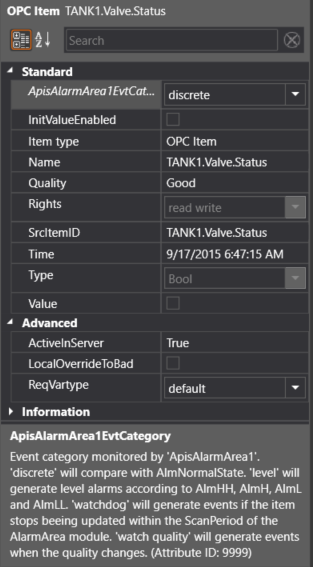

Setup Discrete Alarms

This example explains how to configure Apis Hive as OPC AE service and add level alarms on Items.

Follow the guide Add Module to Apis Hive, but this time select a module of type ApisAlarmArea from the "Module type" dropdown list.

- In the Properties Editor, click on the button "Add Property"

- In the "Add property" dialog, type "*alarm*" in the filter field, and select the new global property "ApisAlarmArea1EvtCategory"

- Click "Ok"

- In the Properties Editor, click on the property "ApisAlarmArea1EvtCategory".From the dropdown menu, select the alarm category you want. In this example we'll use "discrete".

- Click "Apply".

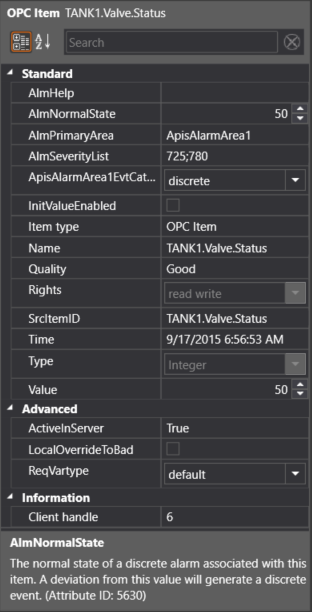

- The Predefined Apis Alarm Attribute AlmNormalState will be added to the item. Set the value of when the normal state you want. In this example we'll set AlmNormalState to 50.

- Click "Apply".

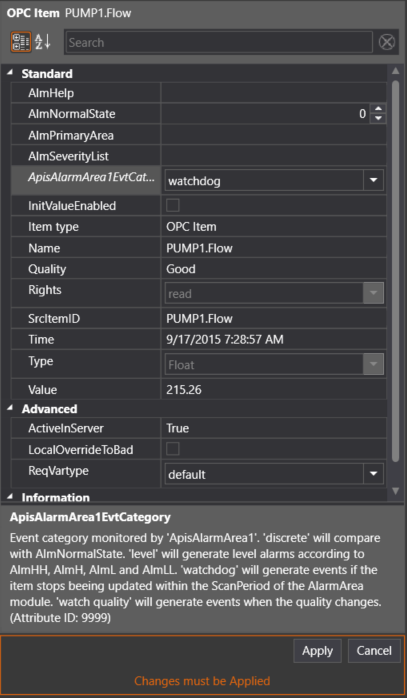

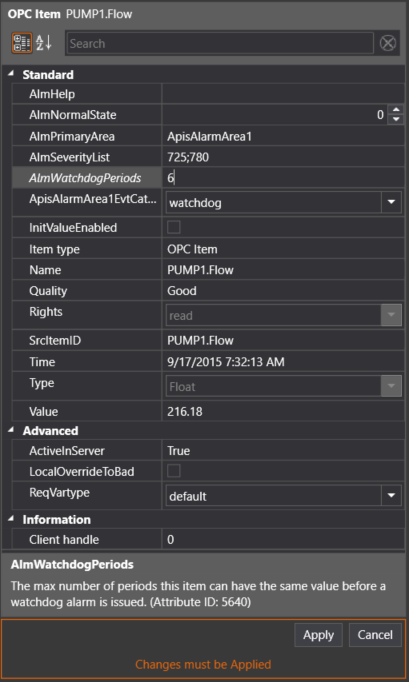

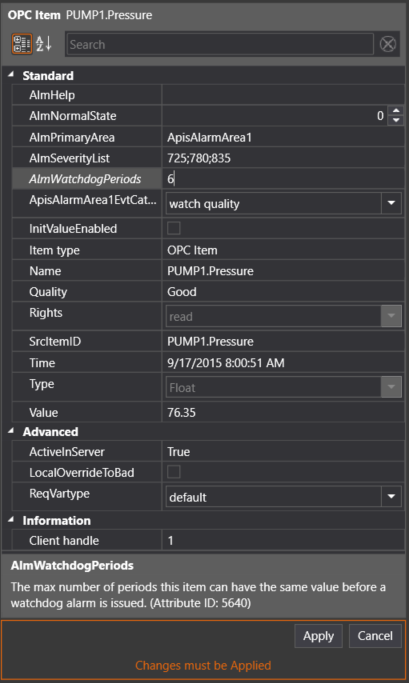

Setup Watchdog Alarms

This example explains how to configure Apis Hive as OPC AE service and add watchdog alarms on Items.

Follow the guide Add Module to Apis Hive, but this time select a module of type ApisAlarmArea from the "Module type" dropdown list.

- In the Properties Editor, click on the button "Add Property"

- In the Add property dialog, type "*alarm*" in the filter field, and select the new global property "ApisAlarmArea1EvtCategory"

- Click "Ok"

- In the Properties Editor, click on the property "ApisAlarmArea1EvtCategory".From the dropdown menu, select the alarm category you want. In this example we'll use "watchdog".

- Click "Apply".

- The Predefined Apis Alarm Attribute AlmWatchdogPeriods will be added to the item. Set the watchdog period. In this example we set AlmWatchdogPeriod to 6 seconds.

Note: The watchdog period is the frequency you have configured for module attribute ScanPeriod on the ApisAlarmAreaBee. If you have a scan period of 500ms on the ApisAlarmAreaBee and no changes of the items value have occurred within 3 second, the watchdog alarm will be triggered.

- Click "Apply".

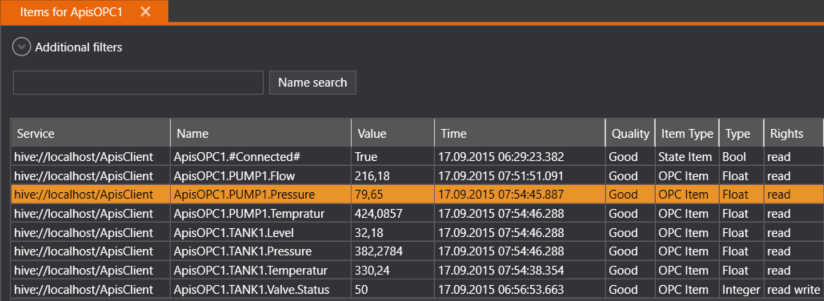

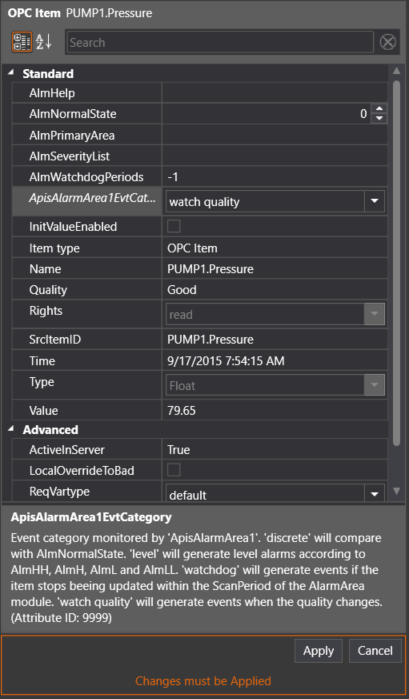

Setup Watch Quality Alarms

This example explains how to configure Apis Hive as OPC AE service and add watchdog alarms on items.

Follow the guide Add Module to Apis Hive, but this time select a module of type ApisAlarmArea from the "Module type" dropdown list.

- In the Properties Editor, click on the button "Add Property"

- In the "Add property" dialog, type "*alarm*" in the filter field, and select the new global property "ApisAlarmArea1EvtCategory"

- Click "Ok"

- In the Properties Editor, click on the property "ApisAlarmArea1EvtCategory".From the dropdown menu, select the alarm category you want. In this example we'll use "watch quality".

- Click "Apply".

- The Predefined Apis Alarm Attribute AlmWatchdogPeriods will be added to the item. Set the watchdog period. In this example we set AlmWatchdogPeriod to 6 seconds.

Note: The watchdog period is the frequency of what you have configured for module attribute ScanPeriode on the ApisAlarmAreaBee. In this example you will trigger an alarm, if you have a scan period of 500ms on the ApisAlarmAreaBee and no changes of the items value have occurred within 3 second.

- Click "Apply".

Add Dynamic Calculations

The ApisCalculate module has been deprecated.

Instead, use Function items in any module supporting this item type,

e.g. the ApisWorker module.

Process Events with ApisEventBus

Overview

The EventBus bee is used for event processing. It uses item types to define four basic processing components:

- Sources connects to something that can produce events, e.g. Chronical, Kafka.

- Sinks connects to something that can consume events, e.g. Chronical, RDBMS, Kafka.

- Channels are places where Sources can publish events, and Sinks can subscribe to events

- Routers move events between Channels with optional filtering and transformations

Channel item type

This item type defines a channel/queue with the same name as the item. By default, all events published to a channel will immediately be forwarded to all subscribers. If the attribute BatchTime is set, events will be grouped together for this number of seconds, into a single event. If the attribute BatchSize is also specified, this it will override BatchTime when the specified number of events have been batched.

Router item type

This item type has attributes for Input channel, Output channel and Script. All events published to the input channel is filtered and/or transformed by the script, and the result is published on the output channel.

Source.Chronical item type

This item type creates a subscription for chronical events in the local hive instance. It has attributes used to specify an Event source, an Event type, and an Output channel.

The Source.Chronical item produces events like this:

<event>

<Timestamp value="132313410105734253" filetime="2020-04-14T12:30:10.5734253Z"/>

<Generation value="1"/>

<Sequence value="19708435"/>

<Source value="2166" name="Worker.Variable1">

<Area name="AlarmArea"/>

</Source>

<Type value="20" name="LevelAlarm">

<Attr>

<UA_NODEID value="0:0:9482"/>

<UA_SUBSTATES value="Low,LowLow,High,HighHigh"/>

<AE_CONDITION value="Level alarm"/>

</Attr>

</Type>

<State value="16777227" Enabled="1" Active="1" Acked="1" Low="1"/>

<Severity value="780"/>

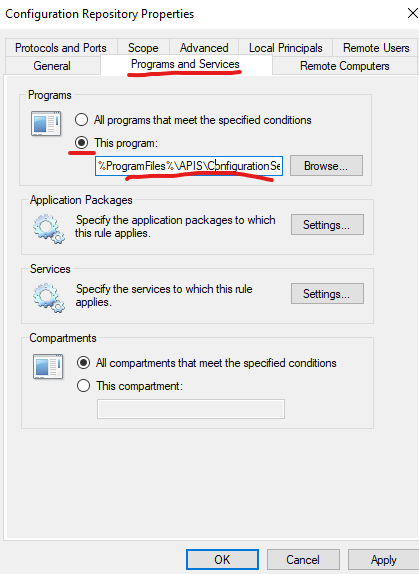

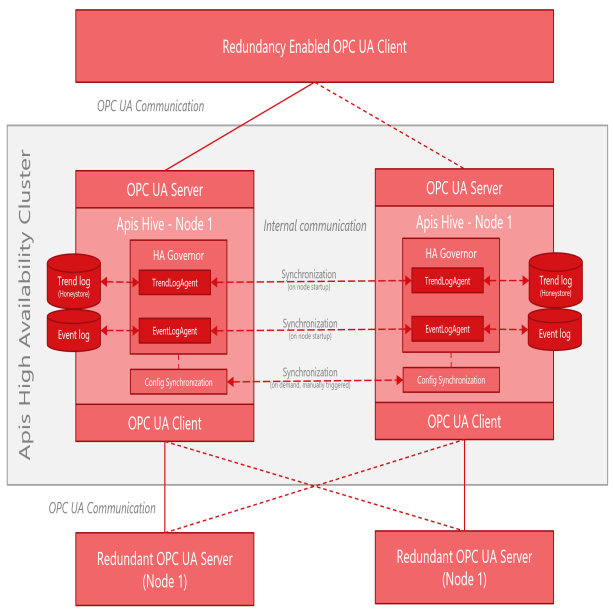

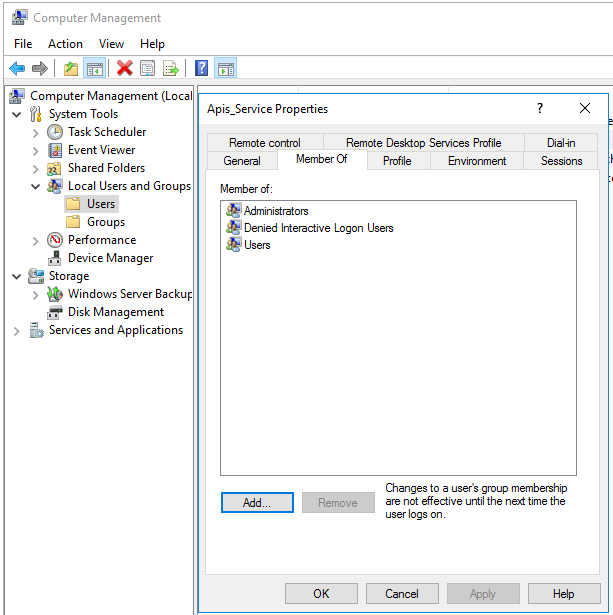

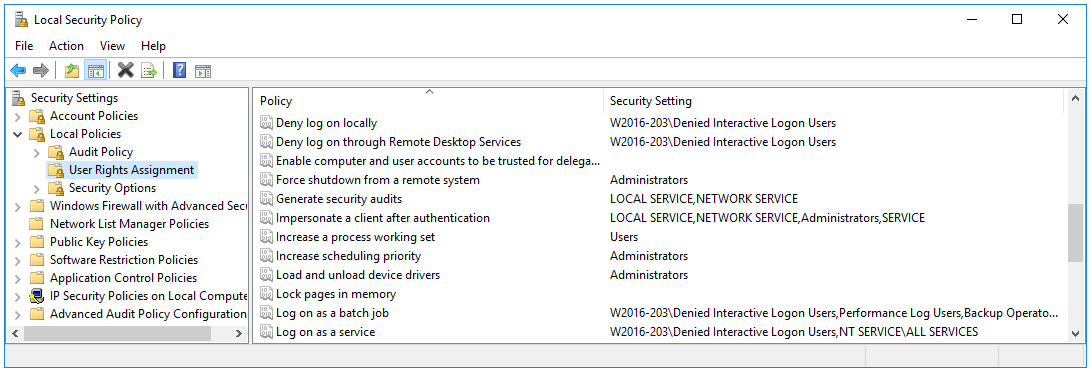

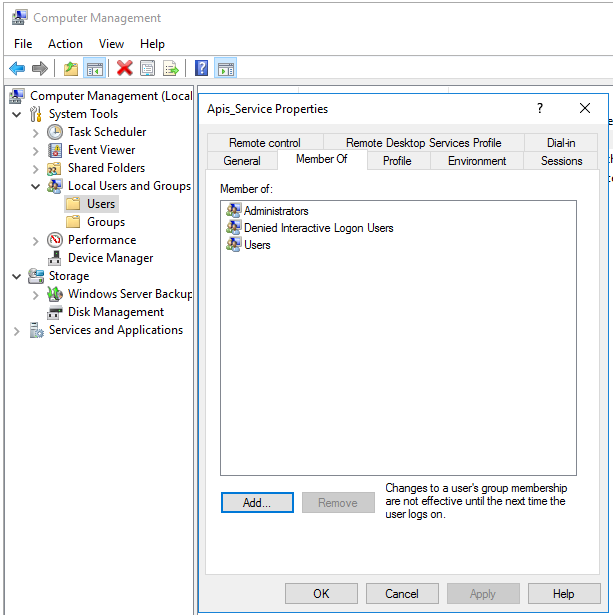

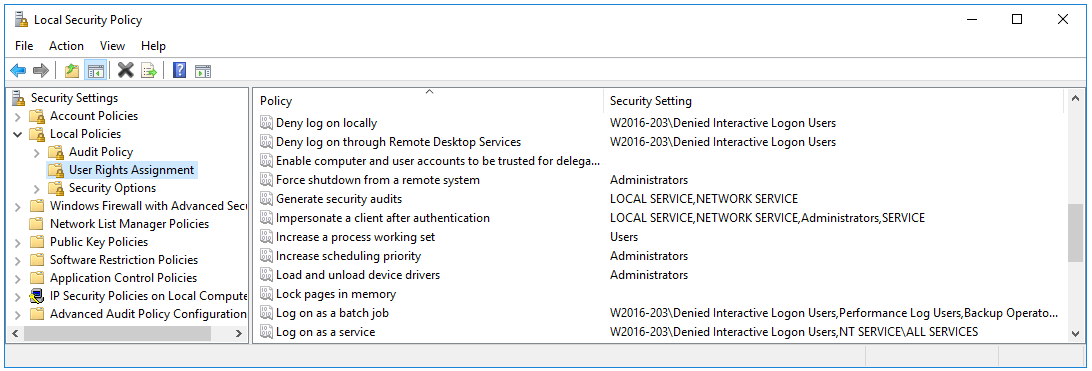

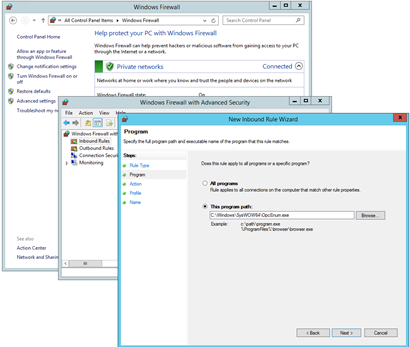

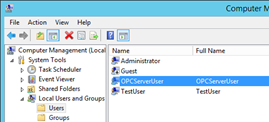

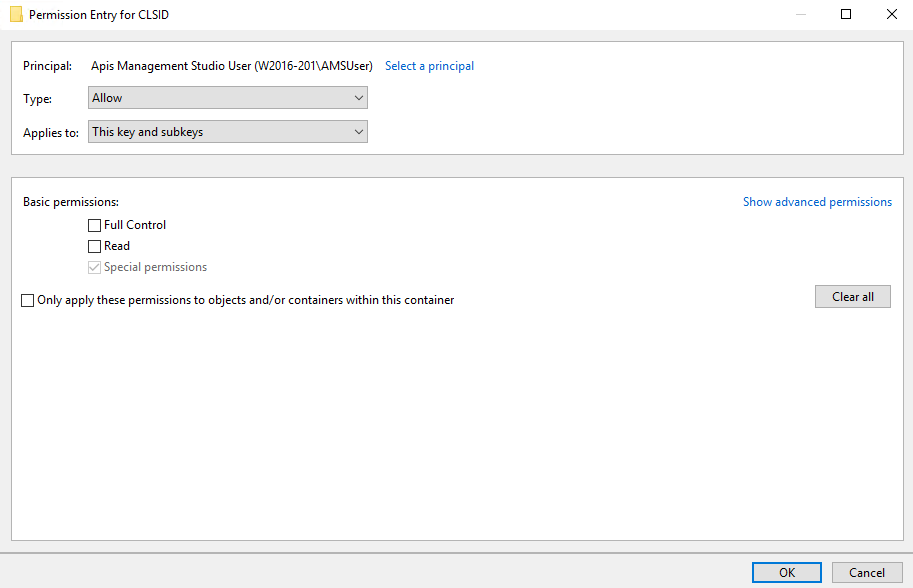

<Message value="The value is lower than 5, last was 0"/>